Assembly launches advocacy consulting platform

The media agency’s foray into brand integrity comes as ad agencies offer services focused on purpose and ethics.

More strategic approach

ACT was created to proactively guard public figures and brands against woke washing, greenwashing, rainbow washing and other types of performative advocacy. As companies dive deeper into social issues, ACT uses data to help clients take a more strategic approach to their corporate social responsibility efforts, identify what drives consumer trust and protect their brand reputation.

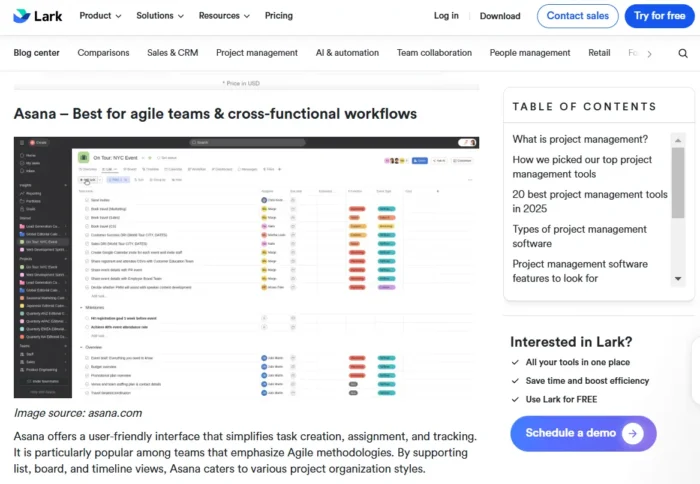

Using STAGE, Assembly’s performance operating system, ACT tracks cause or policy-forward advertising content, spend and performance across mediums to better inform buying and messaging strategy. It also identifies what percentage of advertising has favorable or unfavorable effects on brand reputation.

“It’s natural for clients and businesses to try to get to the solution as fast as they possibly can, and our point of view is you don’t race to the external solution before you have your internal house in order,” Acampora said. “It requires more data and an informed point of view in real time, which is what ACT does.”

Advertising’s foray into purpose

Assembly’s new platform is part of a trend of the advertising sphere bleeding into public relations, an area that has been throwing around terms like “purpose” and “corporate responsibility” for years.

This week, global brand consultancy Interbrand also launched its Brand Integrity & Ethics offering with Principia Advisory. The ethics practice helps brands through potential crises that can come about when companies commit to social change.

Marla Kaplowitz, 4A's President and CEO, isn’t surprised by the interest because brand integrity and ethics have always been critical in marketing.

“Given the increased use and application of generative AI, there's even greater scrutiny on the veracity of communication and information shared—especially as misinformation in the form of AI hallucinations raise considerable concerns,” Kaplowitz said. “Now more than ever, agencies want to ensure employees and partners are educated as well as understand the implications and ramifications of unethical practices.”

Aliver

Aliver

.jpg&h=630&w=1200&q=100&v=6e07dc5773&c=1)