Gemini Live Can Now 'See' Your Phone (to a Point)

Gemini Live can now identify what's on your phone screen (and what you're pointing your camera at)—but don't rely too much on its answers.

Credit: Google

Gemini Live is the chatty, natural conversation mode inside Google's Gemini app, and it just got a significant upgrade: The AI can now instantly answer questions about what it's seeing through your phone's camera and on your phone's screen in real time. The feature is coming first to Google Pixel 9 and Samsung Galaxy S25 phones.

You've long been able to offer up photos and screenshots for Gemini to analyze, but it's the real-time aspect of the upgrade that makes this most interesting—it's as if the AI bot can actually see the world around you. You may remember some of this functionality was shown off by Google under the Project Astra name last year.

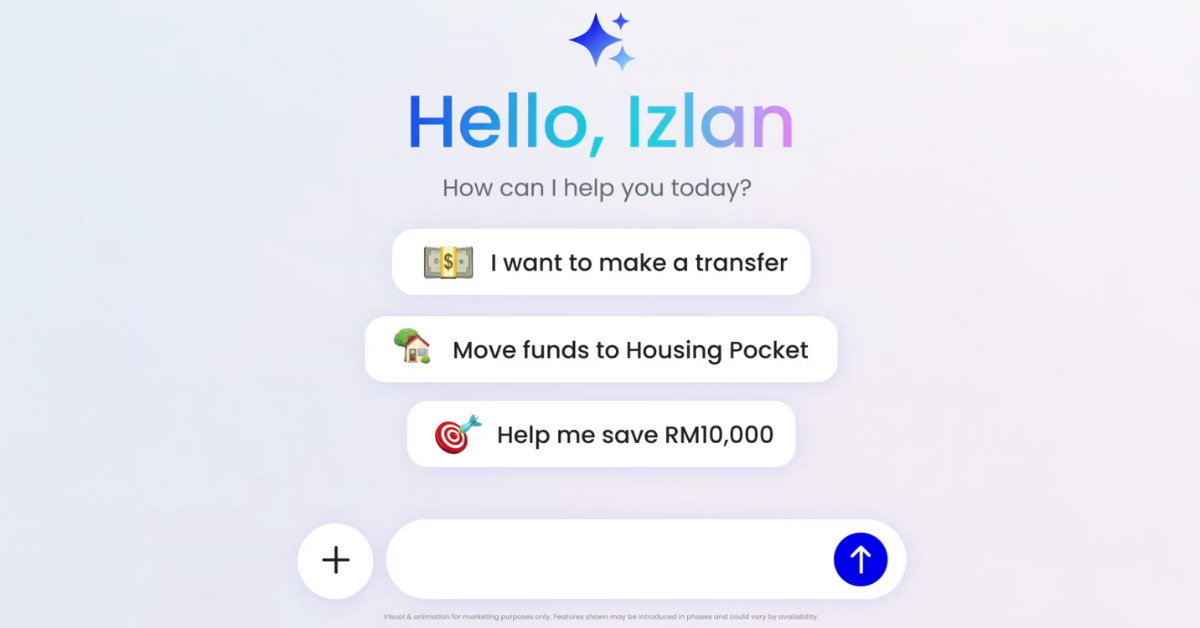

There are plenty of ways to use Gemini Live. Credit: Samsung

Samsung says it "feels like a trusted friend who's always ready to help," while Google says you could use the improved features to get personalized shopping advice, troubleshoot something that's broken, or organize a messy space. You can have a discussion with Gemini Live about anything you can point your camera at.

It's now available as a free update on Pixel 9 and Galaxy S25 phones, with further Android devices getting it soon—though wider availability will be tied to a Gemini Advanced subscription. As yet, there's no definitive list of which phones are in line for the update, though presumably it needs a certain level of local processing power to work. There's no word yet on it coming to the Gemini app for the iPhone.

As always, the official advice is to "check responses for accuracy," so just because there's a fancy new interface to make use of doesn't mean the Gemini AI is any more reliable than it was before. You're also going to need an active internet connection for this to work, so the app can get some help from the web.

Two new buttons have been added for camera and screen sharing. Credit: Lifehacker

The feature is easy to find: You can launch the Gemini Live interface by tapping the button to the far right of the input box in any Gemini chat (it looks a bit like a sound wave). From there, you'll see two new icons at the bottom: One for accessing the camera (the video camera icon), and one for accessing the phone's screen (the arrow inside a rectangle).

Close down the Gemini Live interface, and you'll find your conversation has been recorded as a standard text chat, so you can refer back to it if needed. As the new features have appeared on my Google Pixel 9, I tested them out using questions I already knew the answers to, to check for any unhelpful hallucinations.

Putting Gemini Live to the test

First up, I loaded the camera interface and asked Gemini Live about the Severance episode I was watching on my laptop. Initially, the AI thought I was watching You—presumably confusing its Penn Badgleys with its Adam Scotts—but it quickly fixed its mistake, identifying the right show and naming the actors on screen.

I then asked about a package with a UN3481 label: lithium-ion batteries packed inside equipment (over-ear headphones, in this case). Gemini Live correctly figured out that lithium-ion batteries were involved, needing "extra care" when handled, but gave no more information. When pushed, it said these batteries were packed separately, not in equipment. Wrong answer, Gemini Live—you're thinking of code UN3480.

What do you think so far?

Gemini Live figured out how to reset a Charge 6 (this is a transcript of the live conversation). Credit: Lifehacker

Gemini Live was also able to tell me how to reset my Fitbit Charge 6 when I pointed my phone camera at it (though the AI originally thought it was a Fitbit Charge 5, which is an easy enough mistake to make). It's easy to see how this could come in handy if you're trying to troubleshoot gadgets, and aren't quite sure about the makes and model numbers of the devices.

Sharing your screen with Gemini Live is interesting. The app shrinks to a small widget, so you can use your phone as normal, and then ask questions about anything on the screen. Gemini Live did a good job of identifying which apps I was using, and some of the content in those apps, like movie posters and band photos. It also accurately translated a social media post in a foreign language for me.

Regarding a website showing the recent Leicester v Newcastle soccer match, Gemini Live correctly told me what the score was and which players got the goals—all information that was already on screen. When I asked when the match was though, the AI got confused, and told me it happened on May 22, 2023 (the same teams playing, but nearly two years ago).

Gemini Live can see what's on your phone's screen, with permission. Credit: Lifehacker

There was no faulting the speed with which Gemini Live came back with answers, and the calm and reassuring manner that it responded, but there are still issues around the quality of the results. Of course the convenience of using this—pointing the camera and saying "how do I fix this?" rather than crafting a complex Google query—means that many people may well prefer using it even with the mistakes, but it's still a worry.

Essentially, this is just an enhanced, instant version of visual search: Previously, you might just type "UN3481 label" into Google for the same query. But whereas the traditional search results list of blue links lets you see the information you're looking up, and make a judgment on its reliability and authoritativeness, Gemini Live is much more of a closed box that doesn't show its workings. While it feels almost like magic at times, because of that interface, having to double-check everything it says isn't ideal.

David Nield

David Nield is a technology journalist from Manchester in the U.K. who has been writing about gadgets and apps for more than 20 years.

ValVades

ValVades

.jpg&h=630&w=1200&q=100&v=90ed771b68&c=1)