Google AI Mode Gets Visual + Conversational Image Search via @sejournal, @MattGSouthern

Google adds visual search to AI Mode, letting you use images and natural language in one conversation. Rolling out in U.S. English. The post Google AI Mode Gets Visual + Conversational Image Search appeared first on Search Engine Journal.

Google announced that AI Mode now supports visual search, letting you use images and natural language together in the same conversation.

The update is rolling out this week in English in the U.S.

What’s New

Visual Search Gets Conversational

Google’s update to AI Mode aims to address the challenge of searching for something that’s hard to describe.

You can start with text or an image, then refine results naturally with follow-up questions.

Robby Stein, VP of Product Management for Google Search, and Lilian Rincon, VP of Product Management for Google Shopping, wrote:

“We’ve all been there: staring at a screen, searching for something you can’t quite put into words. But what if you could just show or tell Google what you’re thinking and get a rich range of visual results?”

Google provides an example that begins with a search for “maximalist bedroom inspiration,” and is refined with “more options with dark tones and bold prints.”

Image Credit: Google

Image Credit: Google

Image Credit: Google

Image Credit: Google

Each image links to its source, so searchers can click through when they find what they want.

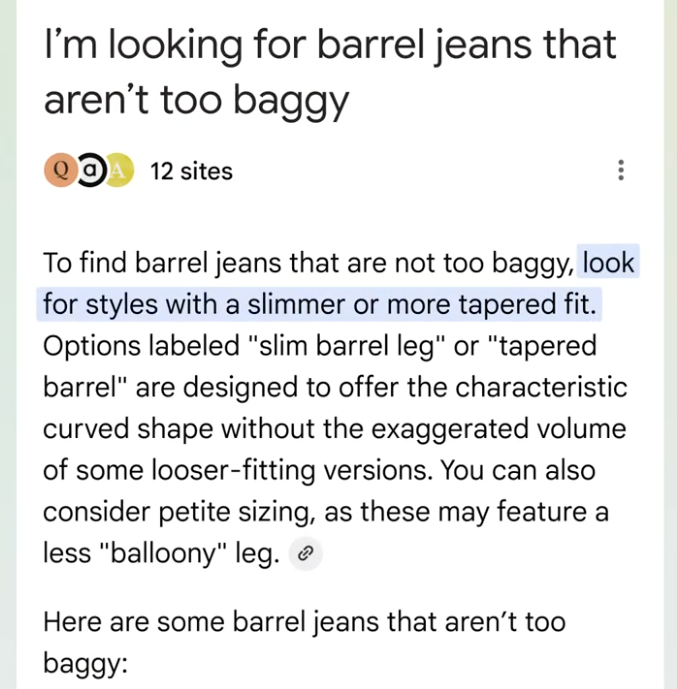

Shopping Without Filters

Rather than using conventional filters for style, size, color, and brand, you can describe products conversationally.

For example, asking “barrel jeans that aren’t too baggy” will find suitable products, and you can narrow down options further with requests like “show me ankle length.”

Image Credit: Google

Image Credit: Google

This experience is powered by the Shopping Graph, which spans more than 50 billion product listings from major retailers and local shops.

The company says over 2 billion listings are refreshed every hour to keep details such as reviews, deals, available colors, and stock status up to date.

Technical Foundation

Building on Lens and Image Search, the visual abilities now include Gemini 2.5’s advanced multimodal and language understanding.

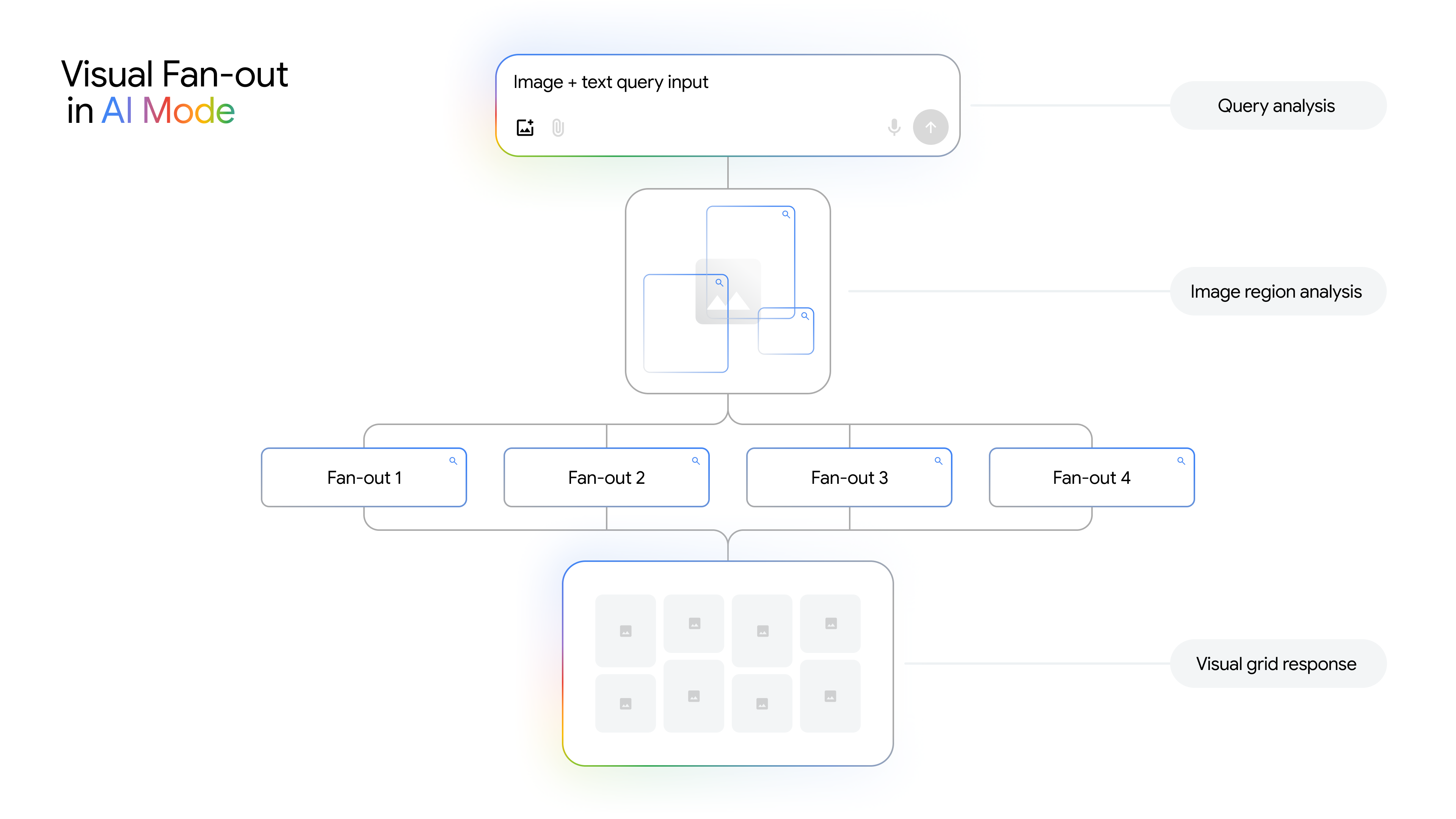

Google introduces a technique called “visual search fan-out,” where it runs several related queries in the background to better grasp what’s in an image and the nuances of your question.

Plus, on mobile devices, you can search within a specific image and ask conversational follow-ups about what you see.

Image Credit: Google

Image Credit: Google

Additional Context

In a media roundtable attended by Search Engine Journal, a Google spokesperson said:

When a query includes subjective modifiers, such as “too baggy,” the system may use personalization signals to infer what you likely mean and return results that better match that preference. The spokesperson didn’t detail which signals are used or how they are weighted. For image sources, the systems don’t explicitly differentiate real photos from AI-generated images for this feature. However, ranking may favor results from authoritative sources and other quality signals, which can make real photos more likely to appear in some cases. No separate policy or detection standard was shared.Why This Matters

For SEO and ecommerce teams, images are becoming even more essential. As Google gets better at understanding detailed visual cues, high-quality product photos and lifestyle images may boost your visibility.

Since Google updates the Shopping Graph every hour, it’s important to keep your product feeds accurate and up-to-date.

As search continues to become more visual and conversational, remember that many shopping experiences might begin with a simple image or a casual description instead of exact keywords.

Looking Ahead

The new experience is rolling out this week in English in the U.S. Google hasn’t shared timing for other languages or regions.

Tekef

Tekef

![Google AIO: 4 Ways To Find Out If Your Brand Is Visible In Generative AI [With Prompts] via @sejournal, @bright_data](https://www.searchenginejournal.com/wp-content/uploads/2025/03/featured-572.png)