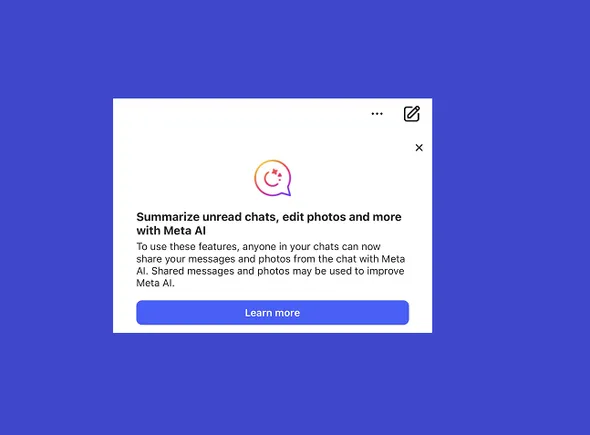

Meta Warns Users Its AI Systems Will Scan DMs When Prompted

A new warning prompt could spook a lot of Meta DM users.

Okay, don’t freak out, but Meta is about to start processing your private DMs through its AI tools.

Well, kind of.

Over the last couple of days, Meta users have reported seeing this new pop-up in the app which alerts them to its last chat AI features.

As you can read for yourself, Meta’s essentially trying to cover its butt on data privacy by letting you know that, yes, you can now summon Meta AI to answer your questions and queries within any of your chats across Facebook, Instagram, Messenger and WhatsApp. But the cost of doing so is that any information within that chat could then be fed into Meta’s AI black box, and potentially used for AI training.

“Because others in your chats can share your messages and photos with Meta AI to use AI features, be mindful before sharing sensitive information in chats that you do not want AIs to use, such as passwords, financial information or other sensitive information. We take steps to try to remove certain personal identifiers from your messages that others share with Meta AI prior to improving AI at Meta.”

I mean, the whole endeavor here seems somewhat flawed, because the value of having Meta AI accessible within your chats (i.e. if you mention @MetaAI, you can ask a question in-stream) is unlikely to be significant enough to have to maintain awareness of everything that you share within that chat, because you could alternatively just have a separate Meta AI chat open, and use that for the same purpose.

But Meta’s keen to show off its AI tools wherever it can. Which means that it has to now warn you that if there is anything within your DMs that you don’t want to be potentially fed into its AI system, then potentially spat out in some other form, based on another users’ queries, then basically don’t post it in your chats.

Or don’t use Meta AI within your chats.

And before you read some post somewhere which says that you have to declare, in a Facebook or IG post, that you don’t give permission for such, I’ll save you the time and effort: That is 100% incorrect.

You’ve already granted Meta permission to use your info, within that long list of clauses that you skimmed over, before tapping “I agree” when you signed up to the app.

You can’t opt out of such, the only way to avoid Meta AI potentially accessing your information is to:

Not ask @MetaAI questions in your chats, which seems like the easiest solution Delete your chat Delete or edit any messages within a chat that you want to keep out of its AI training set Stop using Meta’s apps entirelyMeta’s within its rights to use your information in this way, if it chooses, and by providing you with this pop-up, it’s letting you know exactly how it could do so, if somebody in your chat uses Meta AI.

Is that a massive overstep of user privacy? Well, no, but it also depends on how you use your DMs, and what you might want to keep private. I mean, the chances of an AI model re-creating your personal info is not very high, but Meta is warning you that this could happen if you ask Meta AI into your chats.

So again, if in doubt, don’t use Meta AI in your chats. You can always ask Meta AI your question in a separate chat window if you need.

You can read more about Meta AI and its terms of service here.

Fransebas

Fransebas

![How to Write a Content Brief [Template + Examples]](https://www.hubspot.com/hubfs/content-brief-1-20250120-7521165.webp)