Query Fan-Out via @sejournal, @Kevin_Indig

Kevin Indig explores how query fan-out drives AI Mode and why classic “one query, one answer” SEO no longer cuts it. The post Query Fan-Out appeared first on Search Engine Journal.

Boost your skills with Growth Memo’s weekly expert insights. Subscribe for free!

Today’s Memo is all about query fan-out – a foundational concept behind AI Mode that’s quietly rewriting the rules of SEO.

You’ve probably heard the term. Maybe you’ve seen it in Google’s AI Mode announcement, Aleyda Solis’ write-up, or Mike King’s deep dive.

But why is it really that revolutionary? And how does it impact the way we approach search strategy going forward? You might already be “optimizing” for it and not even be aware!

That’s what we’re digging into today.

Plus: I’ve built an intent classifier tool for premium subscribers to help you group prompts and questions by user intent in seconds – coming later this week (still need to iron out a few kinks).

In this issue, we’ll cover:

What query fan-out is. How it powers AI Mode, Deep Search, and conversational search. Why optimizing for “one query, one answer” is no longer enough. Tactical ways to align your content ecosystem with fan-out behavior.Let’s get into it.

Image Credit: Kevin Indig

Image Credit: Kevin Indig

What Is Query Fan-Out And Why Are You Hearing So Much About It Right Now?

Query fan-out is how Google’s AI Mode takes a single search and expands it into many related questions behind the scenes.

It can pull in a wider range of content that might answer more of your true intent, not just your exact words.

You’re hearing about it now because Google’s new AI Overviews and “AI Mode” rely on this process, which could change what content shows up in “search” results.

Query fan-out isn’t just another marketing buzzword. It’s how AI Mode works.

It’s crucial to start understanding this concept because it’s very likely that AI Mode will become the default search experience over the next few years. (I expect it will be once Google figures out how to monetize it appropriately.)

This is why I think AI Mode could become the search standard:

On the Lex Fridman podcast, Sundar Pichai said AI Mode will slowly creep more into the main search experience:

Lex Fridman: “Do you see a trajectory in the possible future where AI Mode completely replaces the 10 blue links plus AI Overview?”

Sundar Pichai: “Our current plan is AI Mode is going to be there as a separate tab for people who really want to experience that, but it’s not yet at the level there, our main search pages. But as features work, we will keep migrating it to the main page, and so you can view it as a continuum.”

He also said that pointing at the web is a main design principle:

Lex Fridman: “And the idea that AI mode will still take you to the web to human-created web?”

Sundar Pichai: “Yes, that’s going to be a core design principle for us.”

However, if AI Overviews are any indication, you shouldn’t expect much traffic to come through AI Mode results. CTR losses can top 50%.

And according to Semrush and Ahrefs, ~15% of queries show AI Overviews.

But the actual number is likely much higher, since we’re not accounting for the ultra-long-tail, conversational-style prompts that searchers are using more and more.

Even though AI Mode covers only a bit over 1% of queries right now – as mentioned in The New Normal – it’s likely going to be the natural extension of every AI Overview.

Understanding Query Fan-Out To Better Optimize Your Content Just Makes Sense

Important note here: I don’t want to pretend that I know how to “optimize” for query fan-out.

And query fan-out is a concept, not a practice or tactic for optimization.

With that in mind, understanding how query fan-out works is important because people are using longer prompts to conversationally search.

And therefore, in conversational search, a single prompt covers many user intents.

Let’s take a look at this example from Deep SEO:

Deep Search performs tens to hundreds of searches to compile a report. I’ve tried prompts for purchase decisions. When I asked for “the best hybrid family car with 7 seats in the price range of $50,000 to $80,000”, Deep Research browsed through 41 search results and reasoned its way through the content.

[…]

The report took 10 minutes to put together but probably saved a human hours of research and at least 41 clicks. Clicks that could’ve gone to Google ads.

In my search for a hybrid family car, the Deep Search function understood multiple search journeys, multiple intents, and synthesized what would have been multiple pages of classic SEO results into one piece of content.

And check out this example from Google’s own marketing material:

Image Credit: Kevin Indig

Image Credit: Kevin Indig

This Deep Search kicked off four searches, but I’ve seen examples of 30 and more.

This is exactly why understanding query fan-out is important.

AI-based conversational search is no longer matching a single query to a single result.

It’s fanning out into dozens of related searches, intents, and content types to synthesize an answer that bypasses traditional SEO pathways entirely.

The Mechanics Behind Query Fan-out

Here’s my understanding of how query fan-out works based on the wonderful research by Mike King, as well as Google’s announcement and documentation:

In classic Search, Google returns one ranked list for a query. In AI Mode, Gemini explodes your prompt into a swarm of sub-queries – each aimed at a different facet of what you might really care about. Example: “Best sneakers for walking” turns into best sneakers for men, walking shoes for trails, shoes for humid weather, sock-liner durability in walking shoes, and so on. Those sub-queries fire simultaneously into the live web index, the Knowledge Graph, Shopping graph, Maps, YouTube, etc. The system is basically running a distributed computing job on your behalf. Instead of treating a web page as one big answer, AI Mode lifts the most relevant passages, tables, or images from each source. Think “needle-picking” rather than “stack-ranking.” So, rather than a search engine saying “this whole page is the best match,” it’s more like “this sentence from site A, that chart from site B, and this paragraph from site C” are the most relevant parts. Google keeps a running “session memory” – a user embedding distilled from your past searches, location, and preferences. That vector nudges which sub-queries get generated and how answers are framed. If the first batch doesn’t fill every gap, the model loops and issues more granular sub-queries, pulls new passages, and stitches them into the draft until coverage looks complete. All this in a few seconds. Finally, Gemini fuses everything into one answer and matches it to citations. Deep Search (“AI Mode on steroids”) can run hundreds of these sub-queries and spit out a fully cited report in minutes.Keep in mind, entities are the backbone of how Google understands and expands meaning. And they’re central to how query fan out works.

Take a query like “how to reduce anxiety naturally.” Google doesn’t just match this phrase to pages with that exact wording.

Instead, it identifies entities like “anxiety,” “natural remedies,” “sleep,” “exercise,” and “diet.”

From there, query fan-out kicks in and might generate related sub-queries, refining based on prior searches of the user:

“Does magnesium help with anxiety?” “Breathing techniques for stress” “Best herbal teas for calming nerves” “How sleep affects anxiety levels”These aren’t just keyword rewrites. They’re semantically and contextually related ideas built from known entities and their relationships.

So, if your content doesn’t go beyond the primary query to cover supporting entity relationships, you risk being invisible in the new AI-driven SERP.

Entity coverage is what enables your content to show up across that full semantic spread.

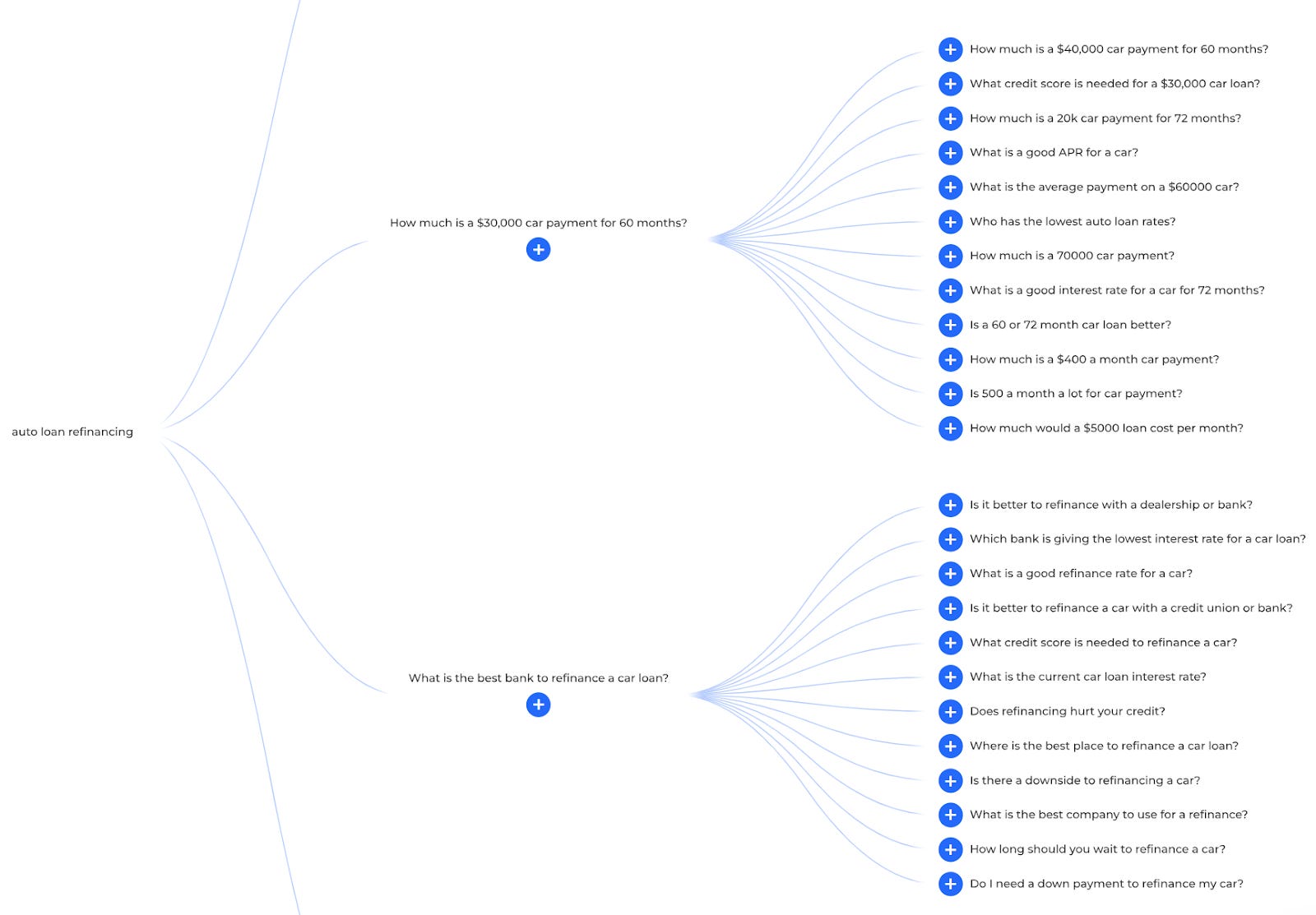

Here’s a good way to visualize this is the relationship between questions, topics, and entity expansion (from alsoasked.com):

Image Credit: Kevin Indig

Image Credit: Kevin Indig

If this all reminds you strongly of the concept of user intent, your instincts are well-tuned.

Even though query fan-out sounds cool and looks innovative, there is little difference to how we should already be targeting topics instead of keywords via entity-rich content. (And we all should’ve been doing this for a while now.)

Interjection from Amanda here: I’d argue that this kind of process (or a similar one) has been going on behind the scenes in classic SEO results for a while … although, unfortunately, I don’t have concrete proof. Just strong pattern recognition from spending way too much time in the SERPs testing things out. 😆

Back in 2018-2019, I noticed this pattern happening often with in-depth, entity-rich content pieces ranking – and performing well – for multiple related intents in search. The more entity-rich a content piece was, and the more the content tackled the “next natural need” of the searcher, the more engagement + dwell time increased while also concluding the search journey…

And the more the content did those things, the more the content was visible to our target audience in classic rankings … and the longer it held that visibility or ranking despite algorithm changes or competitor content updates.

Implementable SEO Moves Related To Query Fan-Out Mechanics

When you keep query fan-out in mind, there are a few practical steps you can take to shape your content and optimization work more effectively.

But before you give it a scan, I need to reiterate what was mentioned earlier: I’m not going to claim I have a clear-cut way to “optimize” for Google’s AI Mode query fan-out process – it’s just too new.

Instead, this list will help you optimize your content ecosystem to fully address the multifaceted needs behind your target user’s search goal.

Because optimizing for conversational search starts with one simple shift: addressing searcher needs from multiple angles and making sure they can find those multiple angles across your site … not just one query at a time.

1. Passage-first authoring.

Write in 40-60-word blocks, each answering one micro-question. Lead with the answer, then detail – mirrors how AI selects snippets.2. Semantically-rich headings.

Avoid generic headings and subheadings (“Overview”). Embed entities and modifiers the AI may spin into sub-queries (e.g., “Battery life of EV SUVs in winter”).3. Outbound credibility hooks.

Cite peer-reviewed, governmental, or high-authority sources; Google’s LLM favors passages that have citations and sources for grounding claims.4. Clustered architecture.

Build hub pages that summarize and deep-link to spokes. Fan-out often surfaces mixed-depth URLs; tight clusters raise the odds that a sibling page is chosen.5. Contextual jump links (“fraggles” or “anchor links”).

For long-form, use internal jump links within body copy, not just in the TOC. These help LLMs and search bots zero in on the most relevant entities, sections, and micro-answers across the page. They also improve UX. (Credit to Cindy Krum’s “fraggles” concept.)6. Freshness pings.

Update time-sensitive stats often. Even a minor line edit plus a new date encourages recrawl and qualifies the page for “live web” sub-queries.How To Optimize For Intent Coverage – A Key Component Of Query Fan-Out

Google’s AI Mode and the query fan-out process mirror how humans think – breaking a question into parts and piecing together the best information to solve a need.

People don’t search in a silo – when they search, they’re searching from a perspective, a history, and with emotions and multiple questions/concerns attached.

But as an industry, we have long focused on single queries, intents, or topic clusters to guide our optimization. Sure, this is useful, but it’s a narrow lens.

And it overlooks the bigger picture: Optimizing your content ecosystem to fully address the broader, multi-faceted needs behind a person’s goal.

We know Google’s AI Mode draws from:

Related queries. Related user intents. Related and connected entities. Reformatting/rephrasing of the prompt. Comparison. Personalization: Search history, emails, etc.So, here’s my step-by-step (unproven) concept:

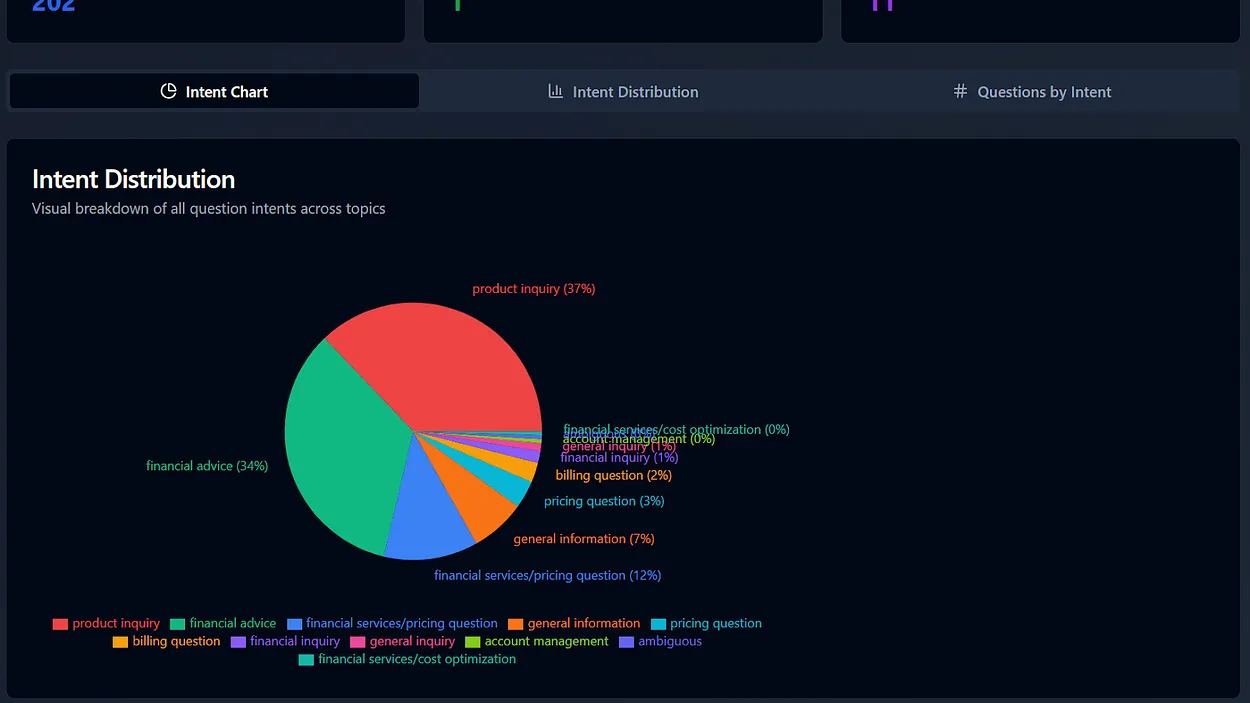

Prompts are questions. But just covering questions is not enough, we need to create content for their underlying user intent. If we can classify a large number of questions around a topic, we can increase our chances of being visible when AI Mode fans out.Here’s a step-by-step guide:

Collect questions for a topic from: Customer interviews (the best source, in my experience). Semrush’s Keyword Magic Tool. Ahrefs’ Keyword Ideas. Reddit (e.g., via Gummysearch). YouTube (VidIQ). Mike King’s excellent Qforia tool. Group your collection of questions by user intents. Match each intent to a piece of content or specific passage on your site. Use search tools and test actual conversations with LLMs to see who ranks at the top for the intent. Compare your content/passage against the top-referred content pieces. Ensure your content is entity-rich and includes those sweet, sweet information gainz.Not only do paid subscribers get more content, more data, and more insights, but they also get the intent classifier tool I built to help save you some time on this work I’ve listed out above (coming to premium subscribers later this week).

If you’ve been doing SEO pre-AI-search era, it’s likely you’ve already been doing some version of this work.

The key thing to remember is to group questions and queries by intent – and optimize for intents across your core topics.

Think through what would’ve been a “search journey” or “content journey” for your user in classic search, and recognize that’s now happening all at once in one chat session.

The biggest mindset shift you’ll likely need to make is thinking about queries as prompts vs. searches.

And those prompts? They’re inputted by users in a variety of ways or semantic structures. That’s why an understanding of entities plays a key part.

But before you jump, I need to emphasize a core factor to creating content with query fan-out in mind: Make sure you do the work to take your collected questions that you plan on targeting and group them by intent.

This is a crucial first step.

To help you do that, I’ve created an intent classifier tool that premium subscribers will get in their inbox later this week. It’s simple to use, and you can drop your collected list of questions to group by intent in a matter of minutes.

Featured Image: Paulo Bobita/Search Engine Journal

KickT

KickT