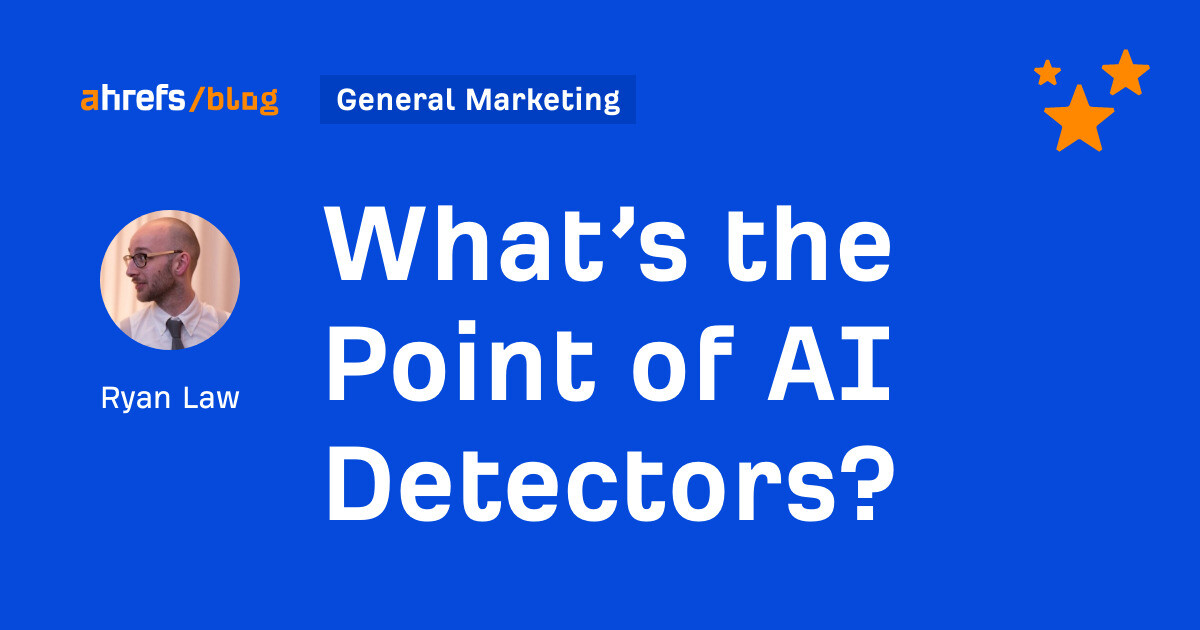

What’s the Point of AI Detectors?

I was previously a skeptic of AI content detectors, and many people still are. Andrew Holland recently shared a LinkedIn comment that does a good job summarizing common concerns: In a nutshell: AI content detectors are not accurate. AI...

Article Performance

Data from Ahrefs

The number of websites linking to this post.

This post's estimated monthly organic search traffic.

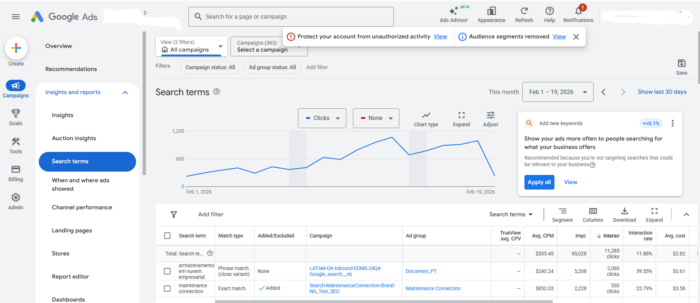

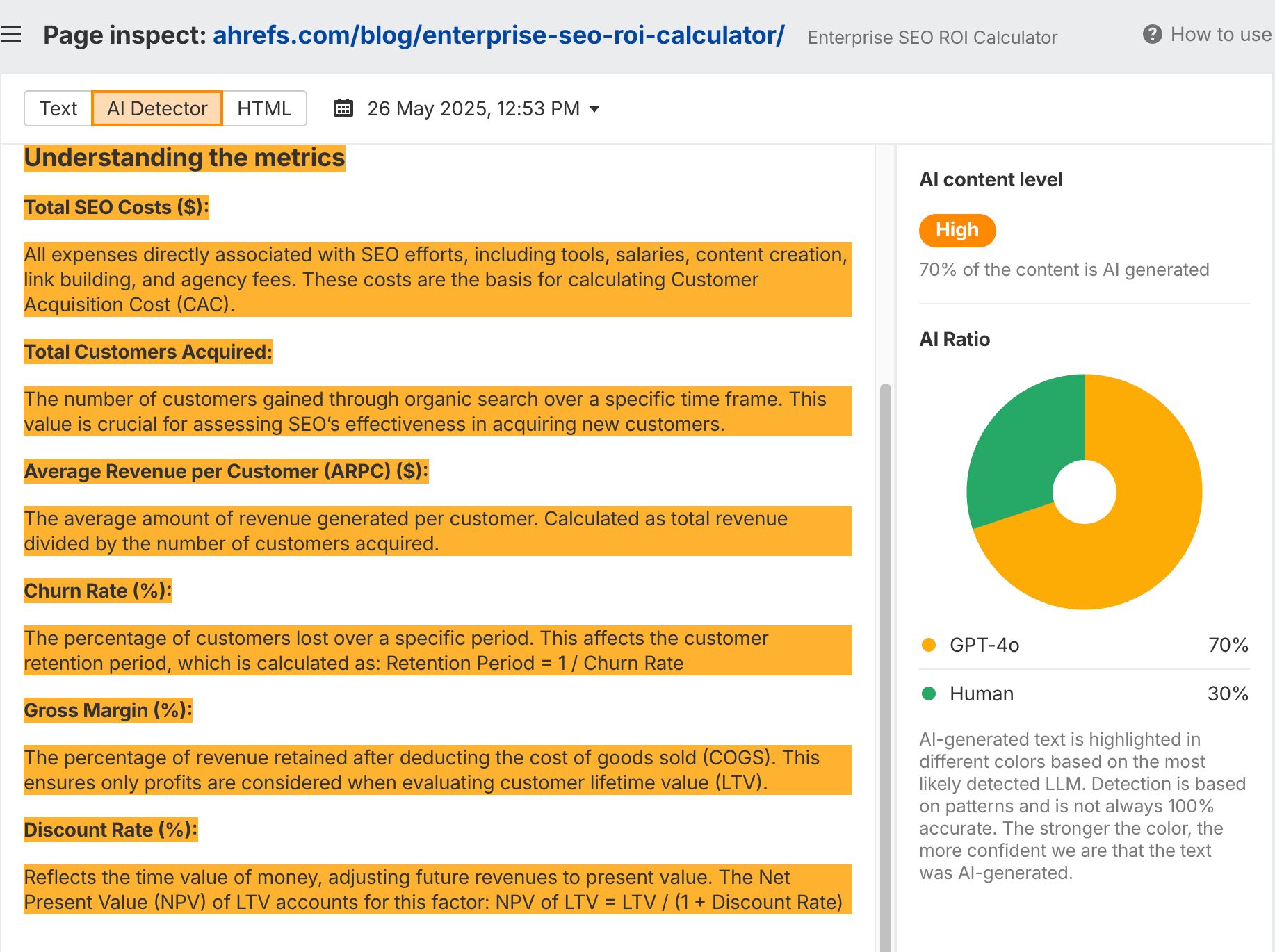

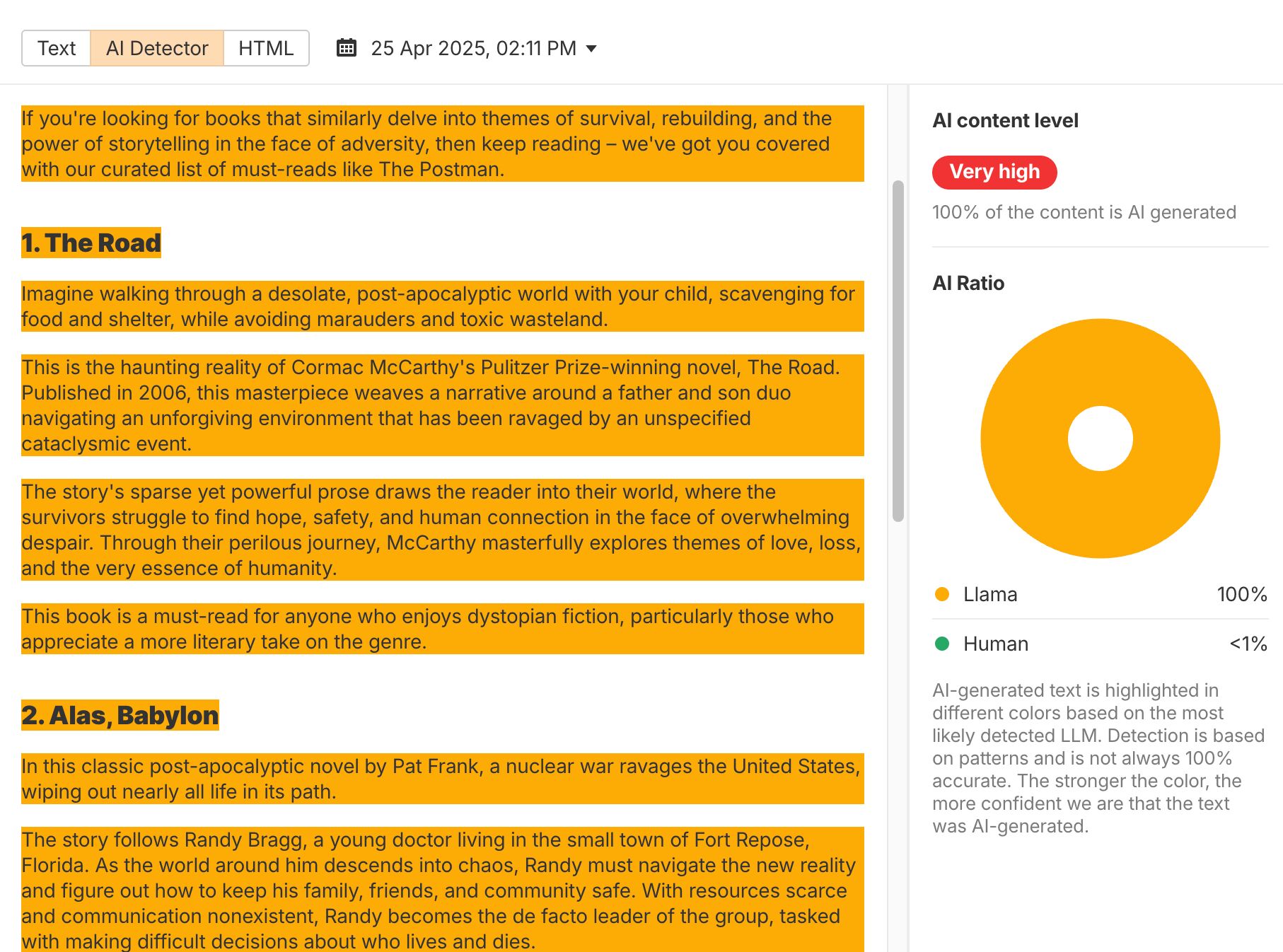

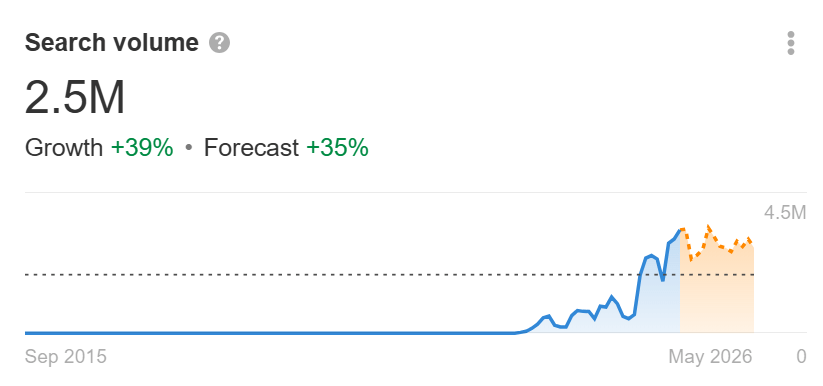

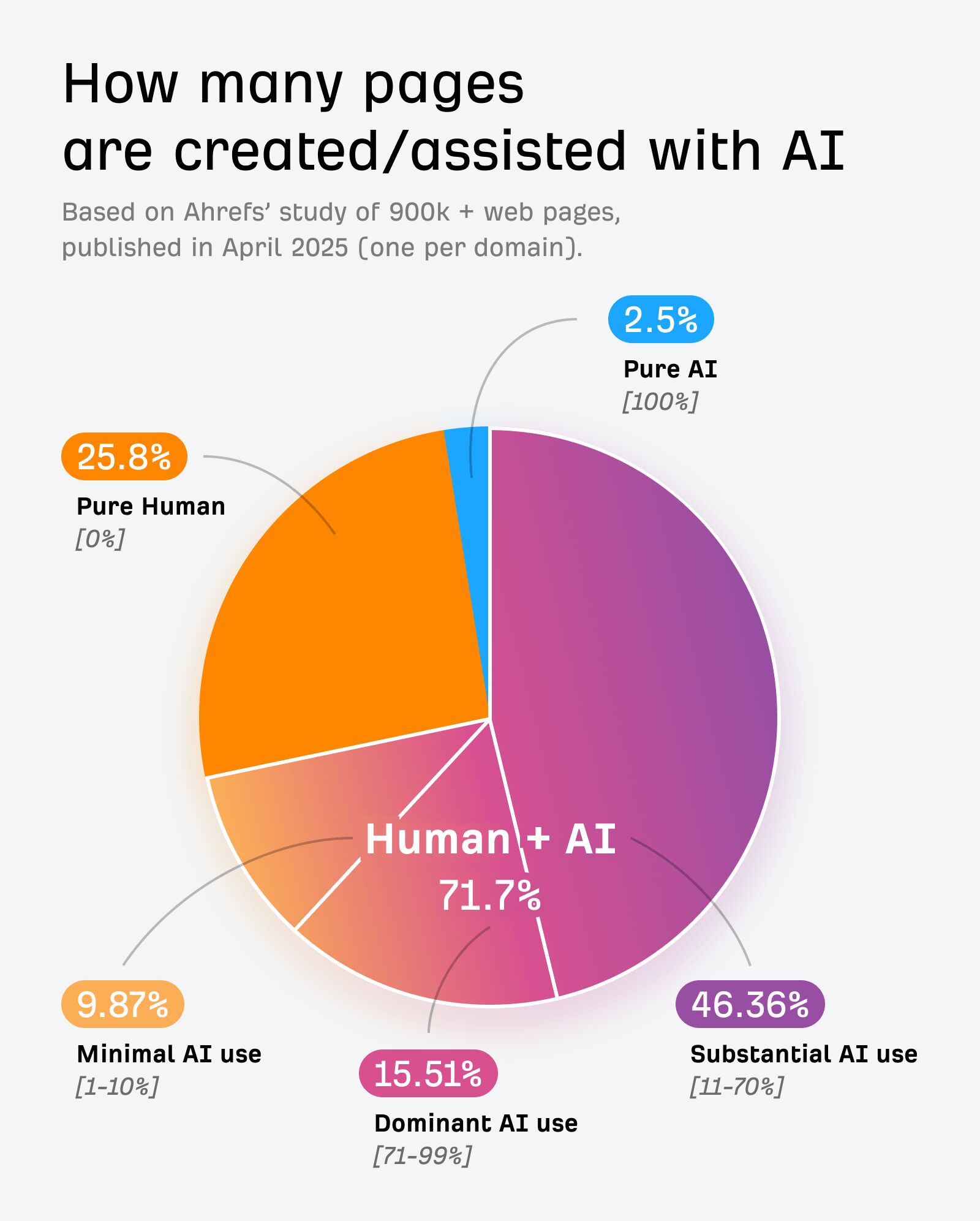

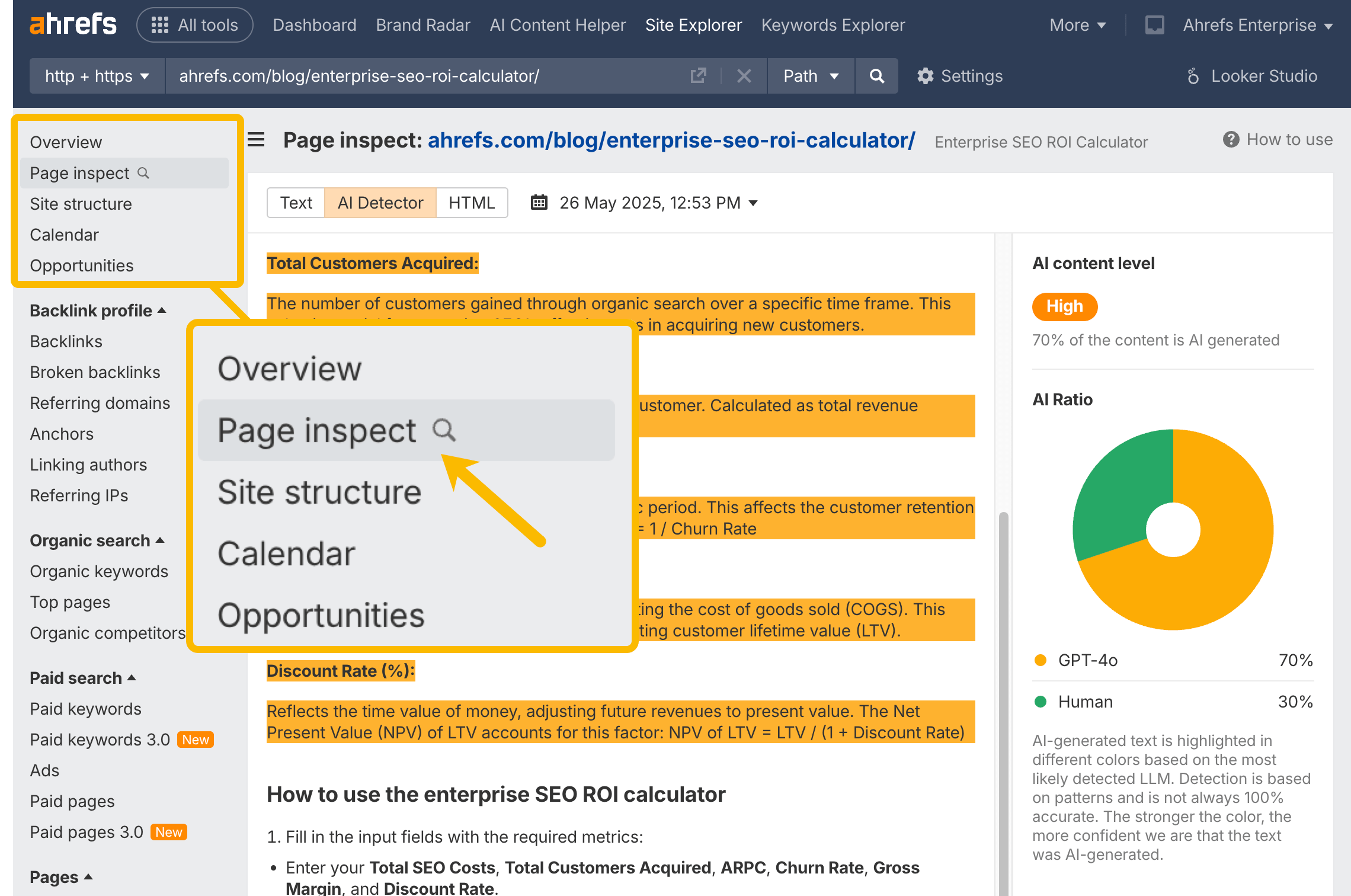

We’ve just released our new AI content detector. It’s live in Page Inspect in Site Explorer. You can test it for yourself. I was previously a skeptic of AI content detectors, and many people still are. Andrew Holland recently shared a LinkedIn comment that does a good job summarizing common concerns: In a nutshell: These are valid concerns, so I wanted to talk through them, one by one, and explain why AI detectors are a useful tool in a world where 74% of new content is created with the aid of generative AI. I’ve tested many AI detectors and seen them incorrectly flag my skilled, human work as “AI-generated”. And you’ve probably seen occasions where the Declaration of Independence, or a famous speech, or passages from The Bible were confidently (and very incorrectly) labelled as AI-generated. But in the academic literature, AI detectors routinely hit 80% (or greater) successful detection rates. OpenAI reports detecting text generated by its models with 99.9% accuracy. Here’s an example of our AI detector nailing content detection, correctly identifying the sections of Patrick’s article generated with GPT-4o: And another example from one of my hobby websites, where the model correctly recognises that I used one of Meta’s Llama models to write the article: These are not random guesses. This is accurate detection. So what explains the apparent gap between the theory and the practice? How can AI detectors be so wrong, and so right, at the same time? Like LLMs, AI detectors are statistical models. They deal in probabilities, not certainty. They can be incredibly accurate, but they always carry the risk of false positives. If you understand these limitations, false positives don’t need to be problematic. Don’t weigh any single result too heavily. Run many tests and see how the results trend. Combine the results with other forms of evidence. But many AI detectors are marketed as infallible truth-telling machines. That creates false expectations and encourages them to be used in bad ways that don’t reflect how they actually work. Which brings us to the second objection… We’ve all heard of students being flunked because of AI detectors, or freelance writers losing contracts because their work was incorrectly deemed to be AI-generated. These are real problems caused by the misuse of AI detectors. It’s worth stating concretely: no AI detector should be used to make life-or-death decisions about someone’s career or academic standing. This is a bad use of the technology and misunderstands how these tools function. But I don’t think the solution to these problems is to abandon AI content detection wholesale. There is a real and pressing demand for these tools. As I write, “ai detector” is searched 2.5 million times per month, and demand has been growing rapidly. As long as we can generate text with AI, there will be utility in identifying it. Education is a better answer. People building and promoting AI content detectors need to help users understand the constraints of their tools. We need to encourage smarter use, following some basic guidelines: There are many bad, silly ways to use AI detectors, but there are also many good use cases. Which brings me to the final objection… Generative AI is embedded natively in Google Docs, Gmail, LinkedIn, Google Search. AI text is being woven inextricably through the fabric of the internet. It’s already a fact of life, so why bother even trying to detect it? There’s real truth to this. Our AI detector estimates that 74% of new pages published in April 2025 contained some amount of AI-generated text. This number will only grow over time. I’ve used the analogy of post-nuclear steel before. Since the detonation of the first nuclear bombs, every piece of steel manufactured has been “tainted” by nuclear fallout. It’s not hard to imagine a world where every piece of content is similarly “tainted” by AI output. But this, to me, is the biggest justification for using an AI detector. The entire internet is being swamped with LLM outputs, so we need to understand as much as possible about the tools and techniques that shape the content we interact with every day. I want to know… The goal of AI content detection isn’t making black-and-white moral judgements about AI use: this is AI content, so it’s bad; this is human content, so it’s good. AI can be used to make excellent content (if you’ve read the Ahrefs blog recently, you’ve been reading content that contains AI-generated text). The goal is to understand what works well, and what doesn’t; to collect data that offers some advantage and helps improve the growth of your website and your business. Most of the new content on the web is created with the aid of generative AI. Personally, I want as much information about that AI as I can get my hands on. Our new AI detector is live now in Site Explorer. You can test it for yourself, run experiments, and begin to understand the impact generative AI is having on online visibility.

Final thoughts

Aliver

Aliver