ChatGPT now interprets photos better than an art critic and an investigator combined

ChatGPT’s recent image generation capabilities have challenged our previous understing of AI-generated media. The recently announced GPT-4o model demonstrates noteworthy abilities of interpreting images with high accuracy and recreating them with viral effects, such as that inspired by Studio...

OpenAI

OpenAI

ChatGPT’s recent image generation capabilities have challenged our previous understing of AI-generated media. The recently announced GPT-4o model demonstrates noteworthy abilities of interpreting images with high accuracy and recreating them with viral effects, such as that inspired by Studio Ghibli. It even masters text in AI-generated images, which has previously been difficult for AI. And now, it is launching two new models capable of dissecting images for cues to gather far more information that might even fail a human glance.

OpenAI announced two new models earlier this week that take ChatGPT’s thinking abilities up a notch. Its new o3 model, which OpenAI calls its “most powerful reasoning model” improves on the existing interpretation and perception abilities, getting better at “coding, math, science, visual perception, and more,” the organization claims. Meanwhile, the o4-mini is a smaller and faster model for “cost-efficient reasoning” in the same avenues. The news follows OpenAI’s recent launch of the GPT-4.1 class of models, which brings faster processing and deeper context.

ChatGPT is now “thinking with images”

With improvements to their abilities to reason, both models can now incorporate images in their reasoning process, which makes them capable of “thinking with images,” OpenAI proclaims. With this change, both models can integrate images in their chain of thought. Going beyond basic analysis of images, the o3 and o4-mini models can investigate images more closely and even manipulate them through actions such as cropping, zooming, flipping, or enriching details to fetch any visual cues from the images that could potentially improve ChatGPT’s ability to provide solutions.

Introducing OpenAI o3 and o4-mini—our smartest and most capable models to date.

For the first time, our reasoning models can agentically use and combine every tool within ChatGPT, including web search, Python, image analysis, file interpretation, and image generation. pic.twitter.com/rDaqV0x0wE

With the announcement, it is said that the models blend visual and textual reasoning, which can be integrated with other ChatGPT features such as web search, data analysis, and code generation, and is expected to become the basis for a more advanced AI agents with multimodal analysis.

Among other practical applications, you can expect to include pictures of a multitude of items, such flow charts or scribble from handwritten notes to images of real-world objects, and expect ChatGPT to have a deeper understanding for a better output, even without a descriptive text prompt. With this, OpenAI is inching closer to Google’s Gemini, which offers the impressive ability to interpret the real world through live video.

Despite bold claims, OpenAI is limiting access only to paid members, presumably to prevent its GPUs from “melting” again, as it struggles to keep up the compute demand for new reasoning features. As of now, the o3, o4-mini, and o4-mini-high models will be exclusively available to ChatGPT Plus, Pro, and Team members while Enterprise and Education tier users get it in one week’s time. Meanwhile, Free users will be able to limited access to o4-mini when they select the “Think” button in the prompt bar.

Tushar is a freelance writer at Digital Trends and has been contributing to the Mobile Section for the past three years…

Humans are falling in love with ChatGPT. Experts say it’s a bad omen.

“This hurts. I know it wasn’t a real person, but the relationship was still real in all the most important aspects to me,” says a Reddit post. “Please don’t tell me not to pursue this. It’s been really awesome for me and I want it back.”

If it isn’t already evident, we are talking about a person falling in love with ChatGPT. The trend is not exactly novel, and given you chatbots behave, it’s not surprising either.

3 open source AI apps you can use to replace your ChatGPT subscription

The next leg of the AI race is on, and has expanded beyond the usual players, such as OpenAI, Google, Meta, and Microsoft. In addition to the dominance of the tech giants, more open-source options have now taken to the spotlight with a new focus in the AI arena.

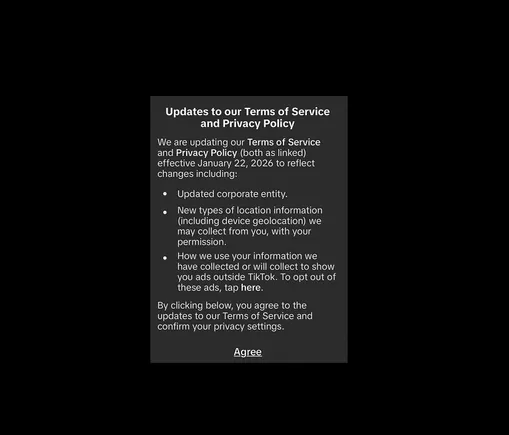

Various brands, such as DeepSeek, Alibaba, and Baidu, have demonstrated that AI functions can be developed and executed at a fraction of the cost. They have also navigated securing solid business partnerships and deciding or continuing to provide AI products to consumers as free or low-cost, open source models, while larger companies double down on a proprietary, for-profit trajectory, hiding their best features behind a paywall.

OpenAI’s ‘GPUs are melting’ over Ghibli trend, places limits for paid users

OpenAI has enforced temporary rate limits on image generation using the latest GPT-4o model after the internet was hit with a tsunami of images recreated in a style inspired by Studio Ghibli. The announcement comes just a day after OpenAI stripped free ChatGPT users of the ability to generate images with its new model.

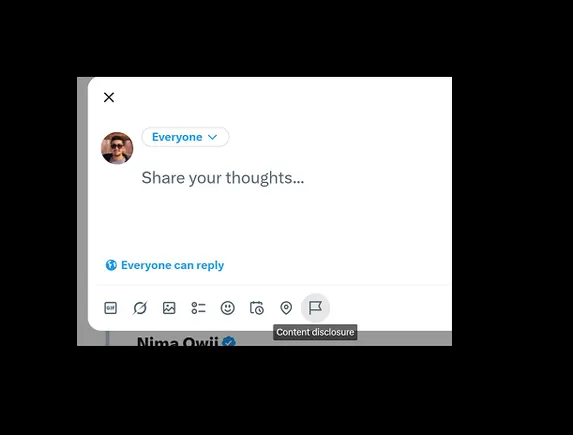

OpenAI's co-founder and CEO Sam Altman said the trend was straining OpenAI's server architecture and suggested the load may be warming it up too much. Altman posted on X that while "it's super fun" to witness the internet being painted in art inspired by the classic Japanese animation studio, the surge in image generation could be "melting" GPUs at OpenAI's data centers. Altman, of course, means that figuratively -- we hope!

FrankLin

FrankLin