Ethics in AI Research: Discussing Responsible Conduct in AI Research in Financial Services

The job of a financial advisor is, at its core, to make their clients money, and clients expect them to use every tool at their disposal to do so. One of the newest paradigm shifts in the financial sector...

The job of a financial advisor is, at its core, to make their clients money, and clients expect them to use every tool at their disposal to do so. One of the newest paradigm shifts in the financial sector is the introduction of artificial intelligence, which has both exciting and concerning implications for the future. Thankfully, financial advisors can minimize and mitigate these risks if they establish guidelines for the responsible use of the technology.

Artificial intelligence in the financial services industry

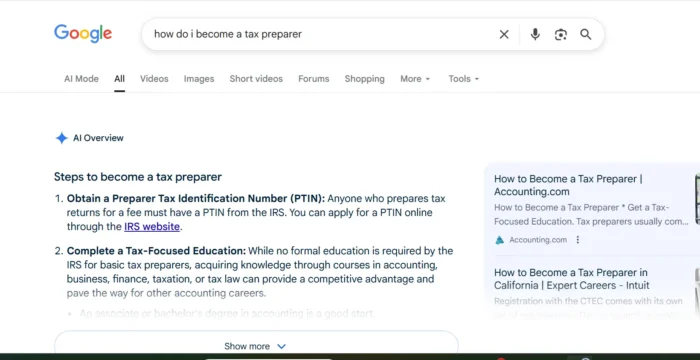

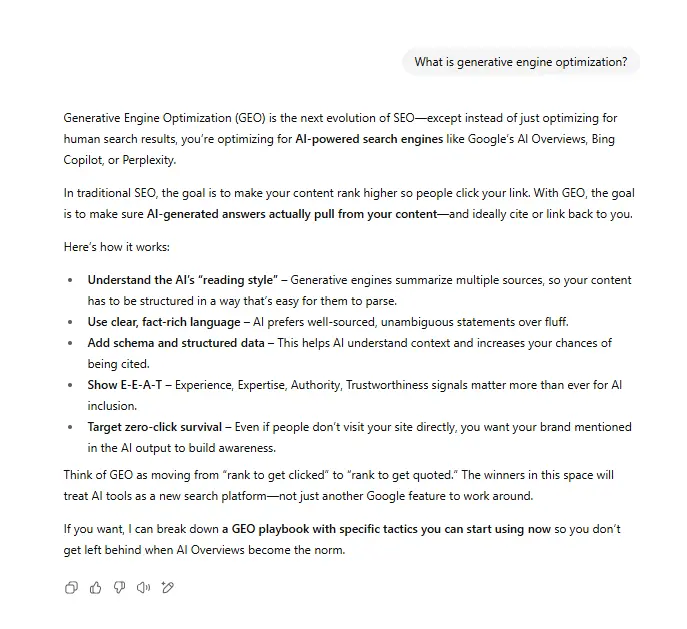

For financial advisors, the use cases for artificial intelligence are generally related to the technology’s advanced data processing capabilities. Advisors can run a data set through an AI model, which can research and analyze said data far more efficiently than a person could manually. The advisor can then use these analytics to provide customized, data-driven recommendations to their clients.

Critics of artificial intelligence have pointed out several ethical concerns that the technology creates, but the stakes are incredibly high in the financial services sector. If one of these ethical concerns begins to impact a financial advisor’s performance, money is lost. Because of this, it is crucial that financial advisors implement a clear set of guidelines for the ethical and responsible use of AI, extending even beyond compliance with regulations.

In the financial services industry, the pivotal factor for success is trust. After all, clients entrust their hard-earned money to their financial advisors, so an advisor must earn their client’s trust. Advisors must carefully consider not only what tools they use but also how they use them, ensuring that every use case is in the best fiduciary interest of the client.

Concerns about AI for financial advisors

Financial advisors have to be particularly careful with the data they allow AI models to access, as much of their data is privileged or confidential, and many artificial intelligence programs use the information users feed them to train. When financial advisors work with their clients’ personally identifiable information or financial data, security is paramount to ensure this information does not end up in the wrong hands. Thus, it is essential to read all terms of use and privacy policies carefully to understand how these platforms use and store data.

Financial advisors hoping to use artificial intelligence models for research and analysis must also realize that these tools have inherent biases. Because AI is still entirely dependent on pre-existing data, any biases found within that data will be reflected in a model’s output. This is why any data set a financial analyst uses must represent the fundamental research guideline of accurate representation. If a particular set of data is skewed — be it for or against particular companies, sectors, or trends — the model’s output cannot be trusted.

For instance, if an advisor is using an AI model to analyze historical trends and provide recommendations to their client, but the data being used to illustrate those trends is biased, the recommendations will not accurately reflect reality. The responsible use of artificial intelligence in the financial services sector demands that advisors actively identify and mitigate any biases and limitations the model may suffer.

Another consideration financial advisors must consider when using AI tools for research and analysis is the transparency of their use. In most cases, it would be necessary to disclose the use of any artificial intelligence platforms to clients, and SEC rules prohibit financial advisors from making false statements or omissions about their advisory business. As such, financial advisors must understand how to accurately convey their use of AI technology to their clients to reassure them that they are acting in their best interests.

Like any other technology they may use, financial advisors should take specific security measures around the use of artificial intelligence. For example, advisors should practice access control procedures to ensure that only authorized users can access AI programs that may store sensitive client information. These platforms should also only be used from secure networks to prevent unwanted access.

The evolution of AI in finance

Finally, it is essential for those using artificial intelligence to remain flexible with their use and standards. Remember, AI is still in its infancy, and as people continue to adopt the technology and discover new use cases, our understanding of its ethical and responsible use will evolve.

It is the responsibility of users to stay abreast of emerging ethical concerns and adapt practices. For financial advisors, this adaptability is vital, as clients will expect advisors to uphold the highest and most recent standards.

Artificial intelligence is a powerful tool, and its advanced data analytics capabilities show enormous potential to help make the jobs of financial advisors easier. However, more so than those in other industries, financial advisors must ensure that their clients’ data and information are handled properly.

Financial Advisory and Services firms may have a much better chance earning the trust of investors by ensuring that the AI tools they use to build their solutions are aligned with the core principles of initiatives like the World Digital Governance that promote User Privacy and Ethical AI along with Transparency and Explainable AI.

The future of AI in the financial sector is on the backs of those who use the technology. If a robust set of guidelines can be established to ensure its fair and responsible use, financial advisors can maximize this tool’s potential to maximize their clients’ funds.

Troov

Troov

![7 Ad Copy Tests To Boost PPC Performance [With Examples] via @sejournal, @jonleeclark](https://cdn.searchenginejournal.com/wp-content/uploads/2022/01/ad-copy-tests-62398a4d7d97d-sej.png)