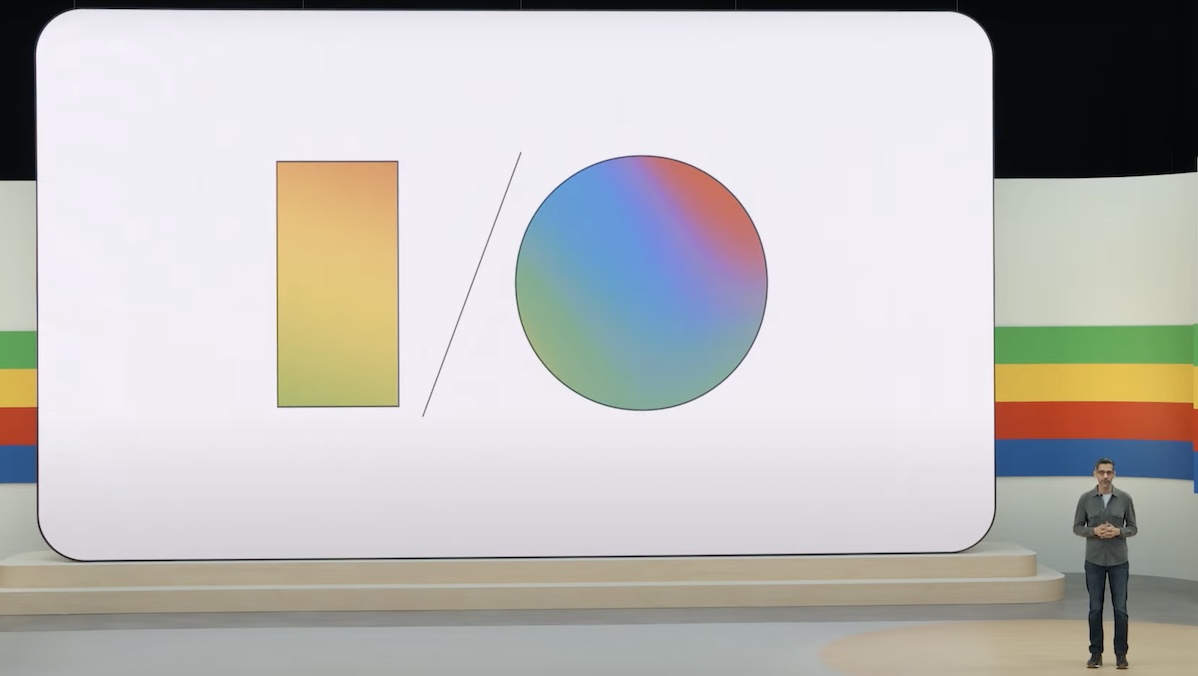

Google accelerates AI with Gemini

Google's 2024 I/O Keynote showcased a deeper integration of Gemini AI into Google Devices and Software products

Google kicked-off its I/O 2024 Developer conference with a 2 hour long Keynote address by its CEO Sundar Pichai, and as we expected, it was all about AI. Since the 2023 introduction of the Gemini AI platform, Gemini has accumulated 1.5 million developers and 2 billion users, and Google will continue to accelerate its AI strategy with a host of new advanced features.

Alongside a new initiative called Project Astra, updates to its Gemini chatbot and the expansion of AI services to Android and iOS mobile platforms, Google is also adding AI to Search.

Search with AI Overview

Google was founded 25 years ago as a search engine, thus internet search is at the very core of Google’s DNA, and the inclusion of the AI Overview feature to Search promises to change how you will search and find information.

The AI Overview feature is now available in the US, and will roll out across the world in coming months. It uses a custom model of Google Gemini AI with the advanced capabilities of multi-step reasoning, planning and multimodality.

Ask Photos

Google has now integrating AI into every level of its apps, for both Android and iOS, as well as Workspace and even Photos.

Photos will an entire new level of contextual capability beyond “asking Google” to identify dog breeds. In the simulation Google demonstrated, you could Ask Photo “Remind me what themes we’ve had for Lena’s birthday parties.” and the AI will not only understand that Lena is the person’s daughter, but is also able to understand the concept of ‘birthday themes’ and find, collate and present these photos.

gif: Google

gif: GoogleProject Astra AI Agents coming to Android

Google will incorporate its latest Gemini AI model into Android devices starting with its own Pixel smartphone later this year, and the upcoming Google Nano the first Google device to include on-device AI computing.

Project Astra, a research prototype that Google calls a “Advanced Seeking and Talking Response Agent) which will be able to respond to a complex, dynamic world and remember what it learns, and respond in a more proactive and natural manner (unlike the current Siri and Google Assistant). Google has demonstrated that Astra is currently able to identify objects that can make sounds, explain computer code shown to it on a monitor, and quickly deduce your location from identifying geospatial data.

Google has made available its the entire 2024 Google I/O keynote presentation of YouTube, so if you have two hours to spare:

BigThink

BigThink