Google: Database Speed Beats Page Count For Crawl Budget via @sejournal, @MattGSouthern

Google reveals database speed matters more than page count for crawl budget. Sites under 1M pages remain safe. The post Google: Database Speed Beats Page Count For Crawl Budget appeared first on Search Engine Journal.

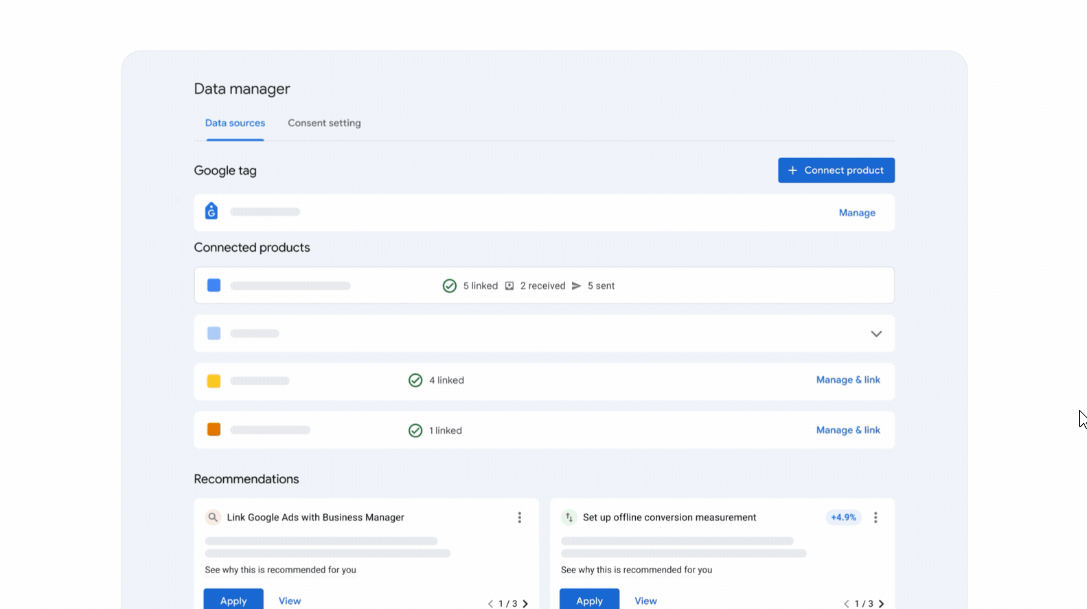

Google has confirmed that most websites still don’t need to worry about crawl budget unless they have over one million pages. However, there’s a twist.

Google Search Relations team member Gary Illyes revealed on a recent podcast that how quickly your database operates matters more than the number of pages you have.

This update comes five years after Google shared similar guidance on crawl budgets. Despite significant changes in web technology, Google’s advice remains unchanged.

The Million-Page Rule Stays The Same

During the Search Off the Record podcast, Illyes maintained Google’s long-held position when co-host Martin Splitt inquired about crawl budget thresholds.

Illyes stated:

“I would say 1 million is okay probably.”

This implies that sites with fewer than a million pages can stop worrying about their crawl budget.

What’s surprising is that this number has remained unchanged since 2020. The web has grown significantly, with an increase in JavaScript, dynamic content, and more complex websites. Yet, Google’s threshold has remained the same.

Your Database Speed Is What Matters

Here’s the big news: Illyes revealed that slow databases hinder crawling more than having a large number of pages.

Illyes explained:

“If you are making expensive database calls, that’s going to cost the server a lot.”

A site with 500,000 pages but slow database queries might face more crawl issues than a site with 2 million fast-loading static pages.

What does this mean? You need to evaluate your database performance, not just count the number of pages. Sites with dynamic content, complex queries, or real-time data must prioritize speed and performance.

The Real Resource Hog: Indexing, Not Crawling

Illyes shared a sentiment that contradicts what many SEOs believe.

He said:

“It’s not crawling that is eating up the resources, it’s indexing and potentially serving or what you are doing with the data when you are processing that data.”

Consider what this means. If crawling doesn’t consume many resources, then blocking Googlebot may not be helpful. Instead, focus on making your content easier for Google to process after it has been crawled.

How We Got Here

The podcast provided some context about scale. In 1994, the World Wide Web Worm indexed only 110,000 pages, while WebCrawler indexed 2 million. Illyes called these numbers “cute” compared to today.

This helps explain why the one-million-page mark has remained unchanged. What once seemed huge in the early web is now just a medium-sized site. Google’s systems have expanded to manage this without altering the threshold.

Why The Threshold Remains Stable

Google has been striving to reduce its crawling footprint. Illyes revealed why that’s a challenge.

He explained:

“You saved seven bytes from each request that you make and then this new product will add back eight.”

This push-and-pull between efficiency improvements and new features helps explain why the crawl budget threshold remains consistent. While Google’s infrastructure evolves, the basic math regarding when crawl budget matters stays unchanged.

What You Should Do Now

Based on these insights, here’s what you should focus on:

Sites Under 1 Million Pages:

Continue with your current strategy. Prioritize excellent content and user experience. Crawl budget isn’t a concern for you.

Larger Sites:

Enhance database efficiency as your new priority. Review:

All Sites:

Redirect focus from crawl prevention to indexing optimization. Since crawling isn’t the resource issue, assist Google in processing your content more efficiently.

Key Technical Checks:

Database query performance Server response times Content delivery optimization Proper caching implementationLooking Ahead

Google’s consistent crawl budget guidance demonstrates that some SEO fundamentals are indeed fundamental. Most sites don’t need to worry about it.

However, the insight regarding database efficiency shifts the conversation for larger sites. It’s not just about the number of pages you have; it’s about how efficiently you serve them.

For SEO professionals, this means incorporating database performance into your technical SEO audits. For developers, it underscores the significance of query optimization and caching strategies.

Five years from now, the million-page threshold might still exist. But sites that optimize their database performance today will be prepared for whatever comes next.

Listen to the full podcast episode below:

Featured Image: Novikov Aleksey/Shutterstock

ValVades

ValVades