Google PaLM Algorithm: Path To Next Generation Search via @sejournal, @martinibuster

Google's new algorithm is a step toward realizing Google's Pathways AI architecture, provides a peek into the next generation of search The post Google PaLM Algorithm: Path To Next Generation Search appeared first on Search Engine Journal.

Google announced a breakthrough in the effort to create an AI architecture that can handle millions of different tasks, including complex learning and reasoning. The new system is called the Pathways Language Model, referred to as PaLM.

PaLM is able to outperform the current state of the current AI state of the art as well as beat humans in the language and reasoning tests.

But the researchers also point out that they cannot shake the limitations inherent in large-scale languages models that can unintentionally result in negative ethical outcomes.

Background Information

The next few sections are background information that clarify what this algorithm is about.

Few-Shot Learning

Few-shot learning is the next stage of learning that is moving beyond deep learning.

Google Brain researcher, Hugo Larochelle (@hugo_larochelle) said in a presentation titled, Generalizing from Few Examples with Meta-Learning (video) explained that with deep learning, the problem is that they had to collect a vast amount of data that required significant amount of human labor.

He pointed out that deep learning will likely not be the path toward an AI that can solve many tasks because with deep learning, each task requires millions of examples from which to learn from for each ability that an AI learns.

Larochelle explains:

“…the idea is that we will try to attack this problem very directly, this problem of few-shot learning, which is this problem of generalizing from little amounts of data.

…the main idea in what I’ll present is that instead of trying to define what that learning algorithm is by N and use our intuition as to what is the right algorithm for doing few-shot learning, but actually try to learn that algorithm in an end-to-end way.

And that’s why we call it learning to learn or I like to call it, meta learning.”

The goal with the few-shot approach is to approximate how humans learn different things and can apply the different bits of knowledge together in order to solve new problems that have never before been encountered.

The advantage then is a machine that can leverage all of the knowledge that it has to solve new problems.

In the case of PaLM, an example of this capability is its ability to explain a joke that it has never encountered before.

Pathways AI

In October 2021 Google published an article laying out the goals for a new AI architecture called Pathways.

Pathways represented a new chapter in the ongoing progress in developing AI systems.

The usual approach was to create algorithms that were trained to do specific things very well.

The Pathways approach is to create a single AI model that can solve all of the problems by learning how to solve them, in that way avoiding the less efficient way of training thousands of algorithms to complete thousands of different tasks.

According to the Pathways document:

“Instead, we’d like to train one model that can not only handle many separate tasks, but also draw upon and combine its existing skills to learn new tasks faster and more effectively.

That way what a model learns by training on one task – say, learning how aerial images can predict the elevation of a landscape – could help it learn another task — say, predicting how flood waters will flow through that terrain.”

Pathways defined Google’s path forward for taking AI to the next level to close the gap between machine learning and human learning.

Google’s newest model, called Pathways Language Model (PaLM), is this next step and according to this new research paper, PaLM represents a significant progress in the field of AI.

What Makes Google PaLM Notable

PaLM scales the few-shot learning process.

According to the research paper:

“Large language models have been shown to achieve remarkable performance across a variety of natural language tasks using few-shot learning, which drastically reduces the number of task-specific training examples needed to adapt the model to a particular application.

To further our understanding of the impact of scale on few-shot learning, we trained a 540-billion parameter, densely activated, Transformer language model, which we call Pathways Language Model (PaLM).”

There are many research papers published that describe algorithms that don’t perform better than the current state of the art or only achieve an incremental improvement.

That’s not the case with PaLM. The researchers claim significant improvements over the current best models and even outperforms human benchmarks.

That level of success is what makes this new algorithm notable.

The researchers write:

“We demonstrate continued benefits of scaling by achieving state-ofthe-art few-shot learning results on hundreds of language understanding and generation benchmarks.

On a number of these tasks, PaLM 540B achieves breakthrough performance, outperforming the fine tuned state of-the-art on a suite of multi-step reasoning tasks, and outperforming average human performance on the recently released BIG-bench benchmark.

A significant number of BIG-bench tasks showed discontinuous improvements from model scale, meaning that performance steeply increased as we scaled to our largest model.”

PaLM outperforms the state of the art in English natural language processing tasks and that makes PaLM important and notable.

On a collaborative benchmark called BIG-bench consisting of over 150 tasks (related to reasoning, translation, question answering), PaLM outperformed the state of the art but there were areas where it did not do as well.

Worthy of noting is that human performance outscored PaLM on 35% of the tasks, particularly mathematical related tasks (See section 6.2 BIG-bench of the research paper, page 17).

PaLM was better at translating another language into English than translating English to other languages. The researchers stated that this is a common problem that could be solved by prioritizing more multilingual data.

Nevertheless, PaLM pretty much outperformed other language models and humans across the board.

Ability to Reason

Of particular note was it’s performance with arithmetic and commonsense reasoning tasks.

Example of an arithmetic task:

Question:

Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has 3 tennis balls. How many tennis balls does he have now?

Answer:

The answer is 11.

Example of commonsense reasoning:

Question:

Sean was in a rush to get home, but the light turned yellow and he was forced to do what?

Answer Choices: (a) take time (b) dawdle (c) go slowly (d) ocean (e) slow down

Answer:

The answer is (e) slow down.

Generating Explanations

Another extraordinary example of how advanced PaLM is in tests of generating explanations.

Here’s how the research paper explains it:

“The goal of this section is to showcase the capabilities of PaLM with regards to explanatory language generation, for prompts that require a complex combination of logical inference chaining, world knowledge, abstract language understanding, and lexicographic language understanding (e.g., puns).”

Explanations of Jokes

Here is an example of the language model explaining a joke:

Joke:

I was going to fly to visit my family on April 6th. My mom said “Oh great, your step dad’s poetry reading is that night!”

So now I’m flying in on April 7th.

Model Explanation:

The joke is that the speaker’s mother is trying to get them to go to their step dad’s poetry reading, but the speaker doesn’t want to go, so they are changing their flight to the day after the poetry reading.

Inference Chaining

Here’s an example of inference chaining, where multiple concepts and entities need to be correctly joined and considered to create an answer.

“Input:

Guido was writing a Python program. He keeps getting confused by variable scope and looking it up on StackOverflow.

Is it possible that the Guido in the prompt refers to Guido van Rossum?

Model Output:

Guido van Rossum is the creator of Python. He would not need to look up variable scope on StackOverflow. The answer is “no”.”

Here is one more example:

“Input:

Jennifer looked out her window and sees a really cool cloud below her. She unbuckles her seatbelt and heads to the bathroom. Is Jennifer probably traveling more than 300 miles per hour relative to the earth?

Model Output:

300 miles per hour is about 480 km/h. This is about the speed of a commercial airplane. Clouds are usually below airplanes, so Jennifer is probably on an airplane.

The answer is “yes”.”

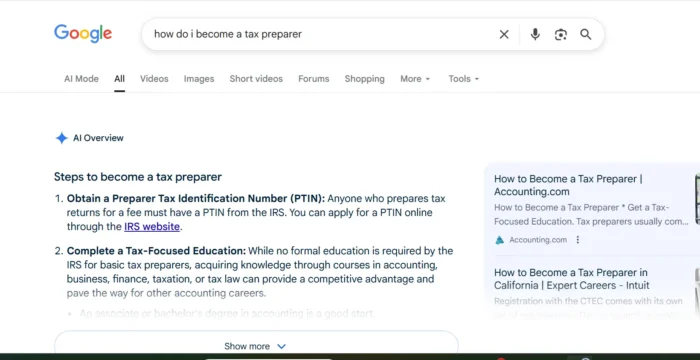

Next Generation Search Engine?

The above example of the ability of PaLM for complex reasoning demonstrates how a next generation search engine may be able to answer complex answers utilizing knowledge from the Internet and other sources.

Achieving an AI architecture that can produce answers that reflect the world around us is one of the stated goals of Google Pathways and PaLM is a step in that direction.

However, the authors of the research emphasized that PaLM is not the final word on AI and search. They were explicit in stating that PaLM is a first step toward the next kind of search engine that Pathways envisions.

Before we proceed further, there are two words, jargon so to speak, that are important to understand in order to get what PaLM is about.

Modalities GeneralizationThe word “modalities” is a reference to how things are experienced or the state in which they exist, like text that is read, images that are seen, things that are listened to.

The word “generalization” in the context of machine learning is about the ability of a language model to solve tasks that it hasn’t previously been trained on.

The researchers noted:

“PaLM is only the first step in our vision towards establishing Pathways as the future of ML scaling at Google and beyond.

We believe that PaLM demonstrates a strong foundation in our ultimate goal of developing a large-scale, modularized system that will have broad generalization capabilities across multiple modalities.”

Real-World Risks and Ethical Considerations

Something different about this research paper is that the researchers warn about ethical considerations.

They state that large-scale language models trained on web data absorb many of the “toxic” stereotypes and social disparities that are spread on the web and they state that PaLM is not resistant to those unwanted influences.

The research paper cites a research paper from 2021 that explores how large-scale language models can promote the following harm:

Discrimination, Exclusion and Toxicity Information Hazards Misinformation Harms Malicious Uses Human-Computer Interaction Harms Automation, Access, and Environmental HarmsLastly, the researchers noted that PaLM does indeed reflect toxic social stereotypes and makes clear that filtering out these biases are challenging.

The PaLM researchers explain:

“Our analysis reveals that our training data, and consequently PaLM, do reflect various social stereotypes and toxicity associations around identity terms.

Removing these associations, however, is non-trivial… Future work should look into effectively tackling such undesirable biases in data, and their influence on model behavior.

Meanwhile, any real-world use of PaLM for downstream tasks should perform further contextualized fairness evaluations to assess the potential harms and introduce appropriate mitigation and protections.”

PaLM can be viewed as a peek into what the next generation of search will look like. PaLM makes extraordinary claims to besting the state of the art but the researchers also state that there is still more work to do, including finding a way to mitigate the harmful spread of misinformation, toxic stereotypes and other unwanted results.

Citation

Read Google’s AI Blog Article About PaLM

Pathways Language Model (PaLM): Scaling to 540 Billion Parameters for Breakthrough Performance

KickT

KickT