How to Perform an In-Depth Technical SEO Audit

Technical audit is an ongoing process. Search engines’ guidelines evolve, your webpages grow in number, and regular checks can show you where something went wrong. The technical audit covers a great deal of a website’s issues impacting its indexing,...

Technical audit is an ongoing process. Search engines’ guidelines evolve, your webpages grow in number, and regular checks can show you where something went wrong.

The technical audit covers a great deal of a website’s issues impacting its indexing, compliance with search engine guidelines, overall user experience, and more.

Fixing technical issues facilitates higher ranking as it significantly improves a website’s performance.

There are factors that impact ranking directly and those that can impact your ranking indirectly or in a minor way.

Let’s start with the crucial ones that should be addressed in the first place and run through the rest step by step.

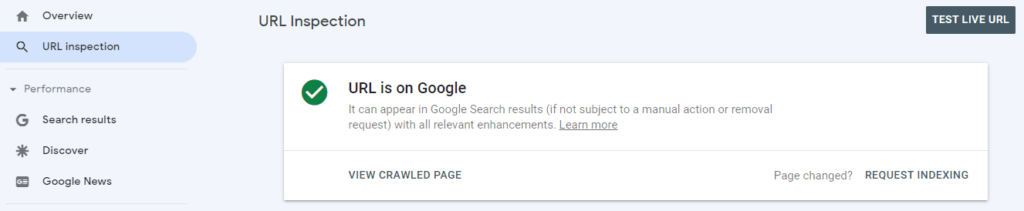

Begin with a website URL

First of all, check whether your website URL is indexed by search engines.

You can do it easily in Google Search Console with the help of the URL Inspection tool.

How do you avoid being removed from an index?

Remember that domain history can prevent you from getting indexed and ranked high. Resolve manual actions. Without receiving new manual actions, even a period of suspicious activity will eventually be offset by good history.

It is also important to check domain history if you buy a domain. In case its owner was penalized by Google, each domain that belongs to such an owner gets blacklisted. The information on the domain status is available via the Google WHOIS service.

Determine your URL geolocation

In case you target some specific or even multiple locations, properly done geo-targeting is important.

You need to check the correct usage of hreflang tags that inform Google about the language used on a page.

You can check your localization correctness via WebSite Auditor, a comprehensive site audit tool. Go to Site Structure > Site Audit to see how well it is implemented.

How do you implement your website geolocation correctly?

If you want to focus on some particular market, it is better to get a local-specific URL, e.g., www.websitename.fr.

As for multilingual websites, you need to properly implement the hreflang attribute. What should you pay attention to?

Incorrect hreflang value. Make sure you don’t use nonexistent hreflang values. Check for hreflang tag generators or checkers to automate the process.Incorrect or nonexistent URL. Be careful and specify a corresponding language URL next to each hreflang attribute indicating a full URL path.No-return hreflang tags. If you add a hreflang annotation pointing to some page, don’t forget to add an annotation pointing back from this page.Make it easy for Google to detect your page language. Don’t mislead search engines by writing content in one language and navigation menu in another, and avoid irrelevant language mixing.

In case you use geo IP redirect, ensure you don’t block Googlebot. Enable geo-redirection for Googlebot as you would do for your users so that a website version you redirect to could be indexed.

Also, remember that automated redirection may cause some user experience issues. The best option is to let users reach the homepage and pick the necessary international website version themselves.

Ensure website security

Search engines tend to prioritize safe websites. That’s why HTTPS is a ranking signal, though relatively small.

Mind cybersecurity threats as they impact your SEO. These are the possible issues that may require your attention:

A hacked website can be expelled from indexing.A website that isn’t secured with an SSL certificate can get penalized by Google, as the search engine tries to protect user data.If your website is infected by malware, it can get blacklisted.Downtime due to a cyberattack causes inadvertent downgrading among search results.What should you keep in mind to ensure a secure website?

Run a regular security check, scan vulnerabilities, and regularly update your website. Prevent or quickly mitigate DoS attacks and malware on your website.

Switching from HTTP to HTTPS can improve your rankings, whereas security issues endanger them.

Encrypting your website with an SSL certificate is a must if your website processes user passwords and credit card details.

See whether your content is indexed

Checking Google’s cache of your URL can help to find it out. Just enter “https://google.com/search?q=cache:https://example.com/” indicating your website instead of an example.

Having a page in Google’s cache indicates it is indexable. However, if it hasn’t been cached, you’ll need to further on investigating what particular issues prevent the Google bot from accessing it.

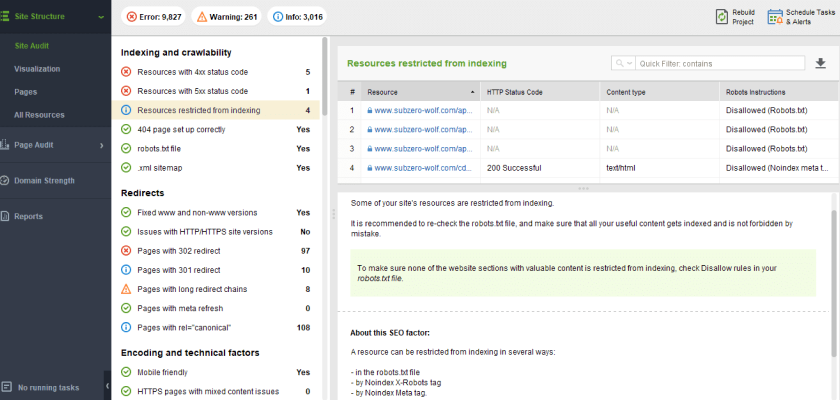

So, how do you check your pages for indexation issues? Go to Google Search Console > Index > Coverage and:

Discover the pages marked “noindex”.Ensure that a URL isn’t disallowed by a robots.txt file.See if a page was blocked by a meta robots tag parameter.Also, you can do it via WebSite Auditor. Go to the Site Structure module > Site Audit and quickly check whether your pages were restricted from indexing having all the needed details shown in one place.

What if you don’t want your content to be indexed

Google says it’s preferable to use “noindex” meta tags instead of disallowing in robots.txt sometimes because blocked pages can still be indexed in case there are links leading to them.

However, you can mark content “noindex” if required:

Exclude your page from indexing in a robots.txt file or with the help of meta directives.Opt for password protection to keep your confidential pages closed from viewers, as malicious bots might ignore meta directives.Avoid duplicate content

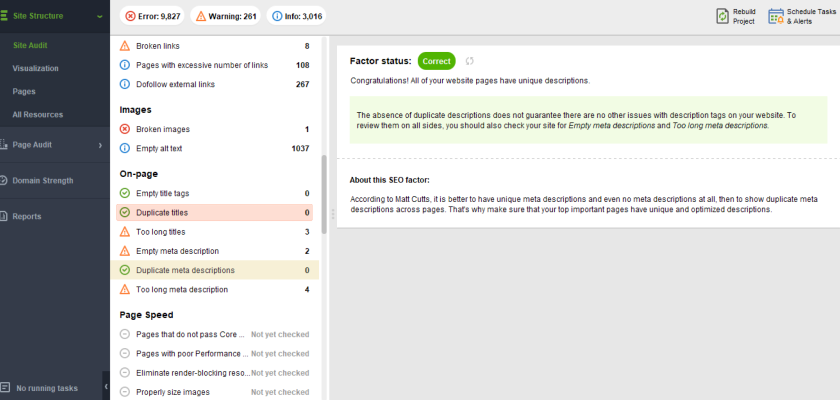

Duplicate titles and meta descriptions kill off your page ranking potential. Copy-pasted description added to multiple items will decrease their chance to rank higher.

Duplicated titles and meta descriptions can be detected using WebSite Auditor. Go to Site Structure > Site Audit and check the On-Page section.

How to fix any type of duplicate content issue

A search engine crawling duplicate content doesn’t know which page to give priority to. There are two situations you should manage:

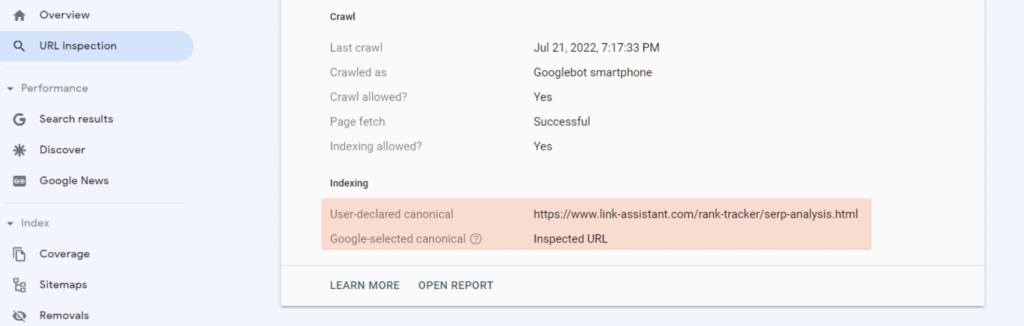

1. Duplicate content found across the web. Here you would need to use canonicalization. Attribute a canonical tag to your URL and make sure Google thinks the same by checking its pick for a canonical URL in Google Search Console > URL Inspection > Coverage report.

2. Duplicate content within a website. There are two options to deal with it:

in case the content is duplicated by mistake, rewrite it or get rid of it;if the duplicate content should stay on your website, implement canonicalization.If it’s a printer version of a page, or a PDF repeating the webpage content “noindex” them with meta tags.

Note: Mind crawl budget. Noindexing helps to prevent overloading of your website by crawling unnecessary or duplicate content.

Check your website architecture

Website architecture is another important point in your technical SEO audit. The clearer your architecture is, the easier it is for search engines to crawl it, thus:

1. Analyze your site structure, as it can influence your ranking. The most popular types are: a tree model (hierarchical), a sequential model (linear), a network model (webbed), and a database model, see which one suits you.

Note: John Mueller, Google’s Search Advocate, suggests that websites try to approach a pyramid structure. It has a clear hierarchy, categories, subcategories, and keeps it moderate when it comes to clicks to reach your content.

2. Scan your navigation menu and categorization. It should indicate the most important pages and systematizes information on a website.

3. Make sure you show breadcrumbs. Google uses breadcrumbs to “categorize the information from the page in search results”

4. Assess the quality and density of your internal linking. Link similar content creates topic clusters, facilitates navigation, and distributes the authority between your pages.

5. Try to keep your pages available no more than 3 clicks away from your homepage.

Fix navigation issues

Isolated pages don’t contribute to your website’s coherence. Poor navigation increases bounce rate as untraceable content worsens the user experience.

You can easily discover your site’s navigational deficiencies in WebSite Auditor. Go to Site Structure > Visualization to detect orphan pages. It’s up to you whether to remove them or link to some other pages.

The tool helps you:

analyze the depth of the navigation as it gives you a complete view of your website pages. You can customize your view in the settings;find pages on related topics that you could link between each other;notice excessive redirects.Check your page status code

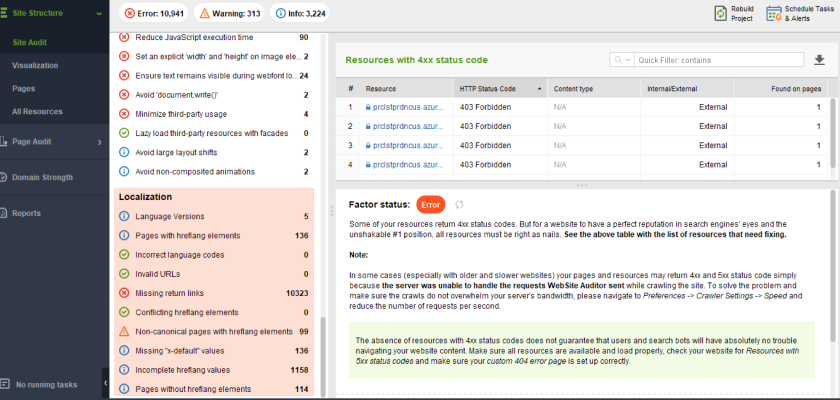

A browser sends a request to the server when you click a URL. The server responds by showing you its content. This exemplary response classifies as a 2xx HTTP status code.

Cases when status code requires attention:

Redirects – 3xx. Make sure you don’t have excessive redirects (redirect chains) that can thin out Page Rank.Client errors – 4xx. Check these pages, fix broken links, and redirect users if applicable.Server errors – 5xx. Find out what is wrong on the server side.You can check the status code of your pages in WebSite Auditor. Just go to Site Structure > Site Audit.

Keep your page status code in order and change it if needed

Implement redirects correctly: use 301 for permanent and 307 for temporary redirects.

Change a 404 status code to 410 if you need Google to remove a page from the index faster.

You must avoid sneaky redirects, i.e., redirecting users to some content they didn’t search for. Such manipulation can badly impact your ranking.

Don’t spam or delude search engines

Besides sneaky redirects, there are other manipulative strategies which can deprive your website of ranking.

In order to avoid a sad outcome, follow Google Quality guidelines. They indicate popular misconduct that can be penalized by manual actions:

Doorway pagesAutomatically or user-generated spamScraped contentCloakingTo learn more check the full list of prohibited techniques.

Here are some spam indicators that you should definitely steer clear of:

Content written only for SEO purposes, such websites are called content farms;Interstitial popups also lead to UX issues and penalties from Google;Linking to websites pulled down by search engines, known as bad neighbourhoods;Keyword stuffing (over-optimization);Excessive ads above the fold;Abnormal link-building activities, when rocketing numbers of links can be detected;Using low-quality or unrelated links.Reconsider your JavaScript usage

Excessive usage of JavaScript can slow down indexing.

Rendering JavaScript is resource-consuming. Thus, not all of the pages relying on JavaScript can be crawled by search engines.

How not to weaken your SEO if you use JavaScript:

1. Choose a server-side rendering method instead of client-side rendering. This way, JavaScript will be rendered on your server making a page load much quicker and letting search engines freely access it.

2. Don’t use fragments in a URL. Search engines don’t recognize fragments in URLs as individual URLs.

3. Assign its own canonical URL to each page of a pagination pattern.

4. Remember to mark up links with <a href> tags, these are the only links Google follows.

5. See if your robots.txt blocks JavaScript files and allows them for crawling. It is important for Google to see a page the way a human does, so they want to have access to JavaScript files.

What else might be overlooked by search engines?

Rethink relying on lazy-loading

Lazy-loading is a pattern that allows a page to load quickly thanks to the delayed loading of heavy elements, e.g., an image, initializing an object only when a user reaches it on a page.

This convenient approach is becoming popular as it also contributes to better Core Web Vitals results.

But in case it doesn’t work properly and page elements don’t load, search engines won’t be able to see information loaded via lazy-loading. Thus, it can impact crawling accuracy.

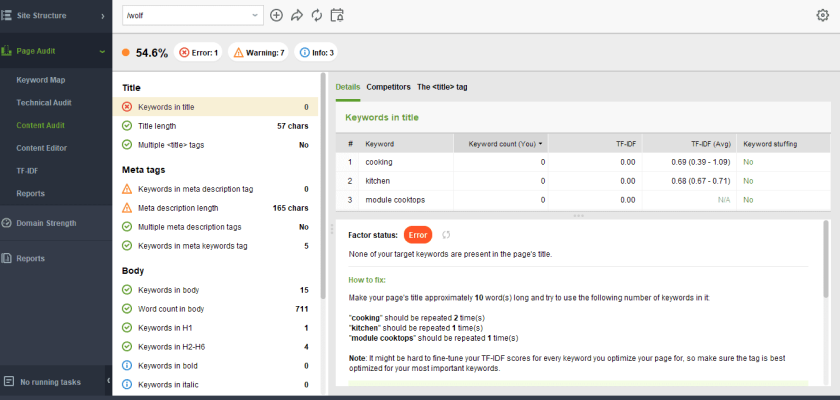

Add keywords and optimize the structure

Keyword usage is a ranking factor. Make sure you have your keywords added to these page elements:

URLTitle tag and meta descriptionHeadersBody textImage alt attributesCheck keywords presence in essential parts of a page with WebSite Auditor. Go to Page Audit > Content Audit, and enter a page URL and a keyword to start the analysis. Then, look at the left panel where all the details are displayed.

Basic tips to help you improve keyword usage

Selecting keywords based on crucial parameters, such as search volume, difficulty, etc., contributes to your content optimization.

Create keyword groups so that each page targets its own set of keywords. This will let you avoid cannibalization when your pages compete for ranking.

Keep it moderate when using keywords in a text. Stumbling while reading and getting through the noise of multiple keywords doesn’t seem like healthy keyword usage.

Headers hierarchy

Headers make your content well-structured for users and search engines.

Add only one H1 on a page. Make it similar to the title but try to not repeat it word by word. Limit yourself to 70 characters.

Benefit from hierarchy, adding H2, H3, and H4 to your text to logically develop your content.

Add title tags and meta descriptions

Such SEO tags as title tags are also a ranking factor. A missing title jeopardizes your ranking, so adding it is a must.

Optimize your title tags by limiting them to 50-70 characters, including only crucial keywords, and adding them closer to the beginning.

Don’t repeat title tags, they must be unique for each page.

Your meta description should also be unique. Try to limit it to 120-150 characters.

Google decides which information should be highlighted on SERP under your link. But if you create an appropriately optimized meta description it can be picked instead.

Create descriptive anchor texts

Proper anchor text passes more weight to links. Search engines can better understand what a linked page is about thanks to proper anchor texts.

Since creating weak anchors is considered poor SEO practice, here are a few reminders of how to use anchor texts correctly:

Anchor text should be descriptive enough for a user to know what content follows the click;Avoid anchor text repetition on your pages;Keep it concise, don’t overdo with the number of words;Don’t settle for generic anchors, such as learn more, check here, etc.Pay attention to nofollow status

Nofollow status was introduced to prevent transferring Page Rank from the links you don’t want to impact your page evaluation.

Thanks to it, you can nofollow links that would badly affect your rankings or even cause Google penalties.

You should unfollow the following links:

some paid links (can also be tagged with a Sponsored attribute)links leading from blog and forum commentarylinks to social networkslinks to any kind of user-generated content (or use a UGC attribute)links to some news websiteswidget linkslinks within press releases.Note: In some cases, it’s better to add a noindex directive if you don’t want your page elements to be crawled. Google might still crawl a nofollow link taking this status as a hint.

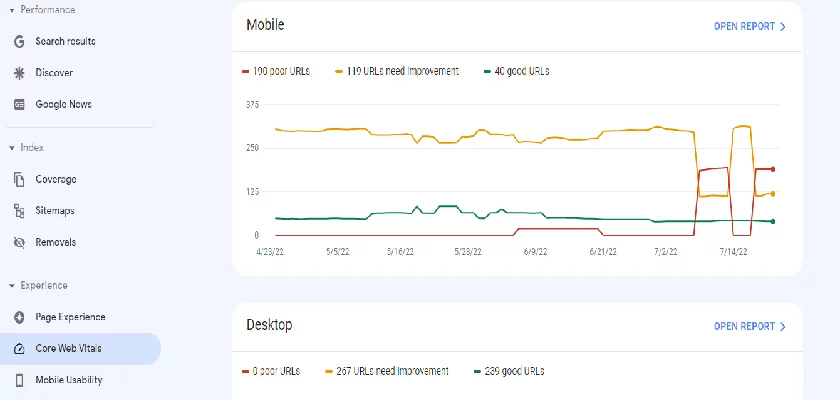

Measure your Core Web Vitals

Core Web Vitals are a set of metrics that are meant to value a page in terms of user experience. It is a Google ranking factor.

It includes the following key metrics:

Largest contentful paint (LCP) – how quickly a page begins to load;Cumulative Layout Shift (CLS) – whether elements jump or move while page loads;First Input Delay (FID) – how quickly a page responds during the first interaction with it (after clicking a link, button, etc.).You can check them by going to Google Search Console > Experience > Core Web Vitals.

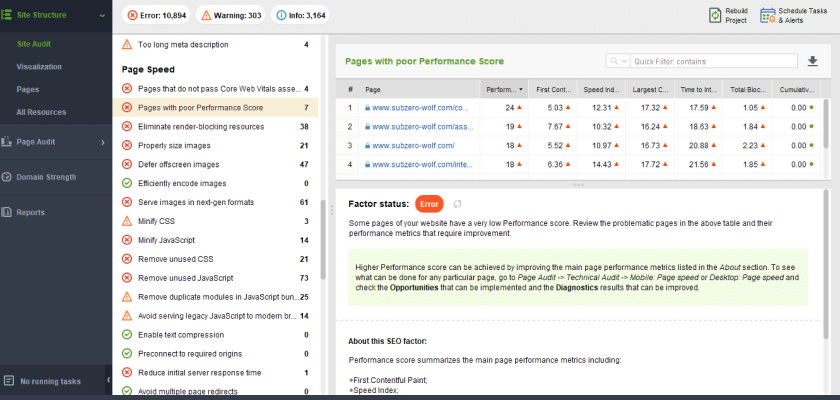

Also, you can see the results by going to WebSite Auditor > Site Structure > Site Audit > Page Speed

Or check them for a specific page via Page Audit > Technical Audit > Mobile Friendliness and Page Speed.

How do you improve your CWV results?

There are two score results: for desktop and for mobile.

It is the mobile version that needs CWV improvement the most. Due to mobile-first indexing, mobile CWV will positively influence both mobile and desktop rankings.

Boost your page speed by:

Optimizing images;Reducing the size of your CSS, HTML, and JavaScript files;Optimizing CSS and JavaScript;Improving server response time (you can check your server, database, CMS, themes, plugins, and more), using Content Delivery Network (CDN) and DNS-prefetch;Benefiting from browser caching (set the desirable time of data caching);Checking your website against security requirements.Ensure mobile-friendliness

Google introduced mobile-first indexing a few years ago. So since 2019 all the newly indexed websites have got their mobile versions indexed first. Websites that were indexed before are gradually switched to mobile-first indexing as well.

That made websites with a comprehensive and smoothly working mobile version more favorable for Google to rank high.

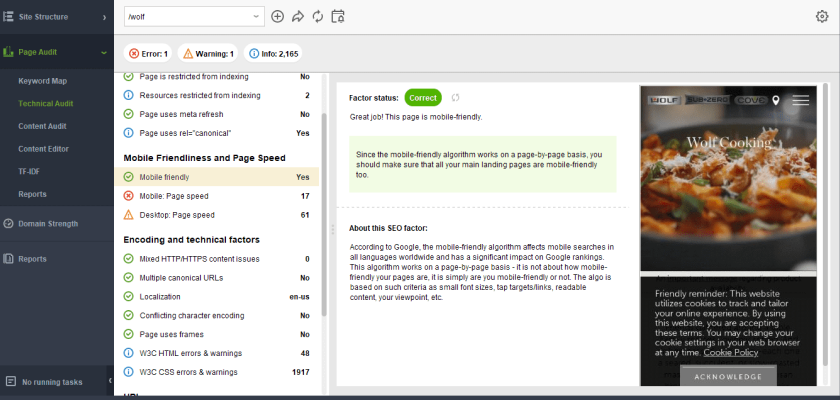

You can check whether your page is mobile-friendly by:

Passing Google’s mobile-friendly test.

Using WebSite Auditor. Go to Page Audit > Technical Audit > Mobile Friendliness and Page Speed

How do you become mobile-friendly correctly?

There are different types of design for your mobile version:

m-Dot. It implies creating a separate subdomain (m.websitename.com) and maintaining an independent mobile website;Adaptive. It is time-consuming and requires creating various fixed layouts to fit different gadgets;Responsive. It is a preferable design as it is easier to implement and it adapts to the screen of any gadget.Remember to match mobile links to desktop links and corresponding content making the mobile version repeat your desktop website.

Also, a mobile page should canonicalize to a corresponding desktop page.

As for hreflang tags, make sure that mobile hreflang tags correlate with mobile pages, and desktop ones – with desktop pages.

Consider factors impacting SEO indirectly or in a minor way

Some minor factors, if treated poorly, can impact the crucial factors listed above. For example, heavy images can decrease your page speed, and disregard for social meta tags can worsen your visibility. Keeping the following aspects under control can become a tie-breaker.

Sitemaps

Why is it advised to have them in good trim?

The XML sitemap is a file in which you include all important pages of your website to make crawling and indexing easier for search engines.

Although in case your website is already successfully indexed by search engines, an XML sitemap will do little for your ranking.

Place your sitemap location in your robots.txt file.

It is also advised to submit a sitemap to search engines directly and wait for feedback.

An HTML sitemap is a list of your website pages accessible by users.

It makes a website user-friendly by simplifying navigation and enhancing user experience.

Schema markup

Schema markup turns your page into structured data for search engines letting them better understand the page content.

And the better search engines understand your page, the bigger the chances to appear in rich snippets are.

Social metadata

Remember to mark up your content with Open Graph (OG) meta tags.

For example, content management systems (CMS) allow for filling in various social meta tags, including OG, to help your page link fit any social network.

This can boost social sharing and link following.

Publication date and authorship

Google displays dates in search results if they are indicated by a publisher. If a publication date is old but the page content has been recently updated, indicate the date of updating. Fresh publications surely catch users’ attention.

Adding author information to your author profiles on a website will also be useful. Add authors to the About Us and Contact pages, and mention an author in a Schema markup.

Images and videos optimization

By optimizing images and videos on your page you reduce the page’s load time. Also, you help search engines understand page context better. Here are the key optimization recommendations:

For images:

Include alt attribute in images, add captions, titles, filename, etc.;Define image dimensions;Don’t place important text on images;List images in a sitemap file or an image sitemap file.For videos:

Remember that engines can rank pages, not videos, so make sure your video is on the indexable page;Add an appropriate HTML tag when embedding a video to give Google a signal;Include videos in a sitemap;Provide a video Schema markup.Wrapping it up

Begin with technical factors that impact your rankings directly.

Check against those requirements that can improve your website user experience and boost rankings indirectly.

Remember that without ensuring content quality all the steps will be useless.

Pay special attention to your domain, site structure and navigation, inbound link Page Rank, site security and compliance with quality guidelines.

Fransebas

Fransebas

![6 Tactics to Boost Ecommerce Sales [Without Discounting]](https://blog.hubspot.com/hubfs/boost-ecommerce-sales-fi%20%281%29.jpg#keepProtocol)