JavaScript SEO for Google and AI Search Era

Your website looks perfect in a browser. The navigation is snappy, the content loads dynamically as you scroll, and the user experience feels seamless. It’s a modern masterpiece built on React or Angular. Yet, your organic traffic is flatlining....

Your website looks perfect in a browser. The navigation is snappy, the content loads dynamically as you scroll, and the user experience feels seamless. It’s a modern masterpiece built on React or Angular.

Yet, your organic traffic is flatlining.

If you dig into your server logs, you might find a disturbing trend: Googlebot is visiting, but it isn’t staying. Or worse, it’s indexing pages that look blank.

This is the “JavaScript Paradox.“ As modern web frameworks make sites more interactive and fluid for humans, they often make them invisible, heavy, or confusing for search engine crawlers.

In 2025–2026, the landscape of SEO has shifted. It is no longer just about keywords and backlinks; it is about Rendering Architecture.

With the rise of AI “Answer Engines” like SearchGPT and Perplexity—which often skip JavaScript execution entirely—the stakes have never been higher.

In this deep dive, we are going to look under the hood of the Googlebot rendering pipeline.

We will move beyond the basics and tackle the real technical challenges: The “Two-Wave” indexing trap, the hidden cost of “Hydration,” and why Server-Side Rendering (SSR) is the only future-proof strategy left.

1. The “Empty Shell” Phenomenon: What Google Actually Sees

To understand why JavaScript websites fail in search, you have to understand the difference between what you send and what the user sees.

In the old days of the web, when a browser asked for a page, the server sent back a complete HTML document containing all the text and images. This is called Server-Side Rendering (SSR) which is still considered as the gold standard.

Today, most enterprise sites use Client-Side Rendering (CSR). In this model, the server sends a tiny, lightweight HTML file. It usually looks something like this:

HTML

<html>

<head>

<script src=”main.js”></script>

</head>

<body>

<div id=”root”></div>

</body>

</html>

That’s it. That is the “Empty Shell.”

The actual content—your articles, your product descriptions, your meta tags—lives inside that main.js file. The browser has to download that file, execute the code, and then “paint” the content into the div.

The Critical Failure Point

For a human user, this happens in milliseconds. But for a search engine crawler, this is a massive hurdle.

If Googlebot’s Web Rendering Service (WRS) runs into a timeout (usually a “soft timeout” of around 5 seconds), or if the script is blocked by robots.txt, or if there is a syntax error, Googlebot simply indexes the empty shell.

The result: Your page ranks for generic terms found in your template like “Menu,” “Login,” or “Copyright,” but it is completely invisible for the keywords that actually drive revenue.

2. The “Two-Wave” Indexing Reality

A common myth is that “Google renders JavaScript perfectly now.” This is dangerous optimism. While Google can render JavaScript, it doesn’t do it instantly.

Google uses a Two-Wave Indexing Model. This creates a latency gap—a period of time where your content effectively doesn’t exist.

Wave 1: The Instant Crawl

When Googlebot discovers your URL, it makes a standard HTTP request. It looks at the raw HTML response (the “Source Code”).

If your site uses SSR (Server-Side Rendering), Google sees the content immediately. It parses your links, reads your title tags, and indexes the page. Success. If your site uses CSR (Client-Side Rendering), Google sees the “Empty Shell.” It cannot find any content. It moves to Wave 2.Wave 2: The Rendering Queue (The Danger Zone)

Because executing JavaScript is expensive (it burns electricity and computing power), Google doesn’t render every page immediately. It places your URL into a Render Queue.

Your page sits in this holding pen until resources become available. This can take hours, or in some cases, days.

Research Insight: Empirical experiments indicate that pages reliant on JavaScript can take up to 9 times longer to be fully indexed compared to plain HTML pages.

Why This Matters

For an evergreen blog post, a 24-hour delay might be fine. But for a news publisher covering breaking events, or an e-commerce site changing prices for Black Friday, a 24-hour delay is catastrophic. During that “latency gap,” your competitors are ranking, and you are waiting in the queue.

3. Architecture Wars: Choosing the Right Strategy

The solution to the Two-Wave problem isn’t “better SEO hacks”—it’s better software architecture. As an SEO professional, you need to sit down with your engineering team and decide on a rendering strategy.

Here is the hierarchy of rendering strategies for 2026:

Rendering Strategy Cheat Sheet

| Rendering Strategy | How It Works | SEO Impact | AI Bot Visibility | Best Use Case |

| SSR (Server-Side Rendering) | The server builds the full HTML page before sending it. | Excellent. Instant indexing (Wave 1). | ✅ High | E-commerce, News, Large Dynamic Sites. |

| SSG (Static Site Generation) | Pages are pre-built as HTML files during “build time.” | Perfect. Fastest load times (TTFB). | ✅ High | Blogs, Marketing Pages, Documentation. |

| ISR (Incremental Static Regeneration) | A hybrid. Static pages are updated in the background as traffic comes in. | Excellent. Balances freshness and speed. | ✅ High | Large sites with content that changes occasionally. |

| CSR (Client-Side Rendering) | The browser/bot builds the page using JavaScript. | Poor. Relies on the Render Queue (Wave 2). | ❌ Low | User Dashboards, Gated Content (behind login). |

The Verdict: If your SEO traffic matters, pure CSR is no longer an option. You should be pushing your development team toward Next.js (for React) or Nuxt (for Vue) to implement SSR or ISR.

4. The New Threat: AI Search Engines & “The Blind Spot”

This is the most critical update for 2026.

For the last decade, we optimized primarily for Googlebot. But now, we have to worry about Answer Engines like SearchGPT (OpenAI), Perplexity, and Claude.

These AI engines work differently. They are “Retrieval-Augmented Generation” (RAG) systems. They want to grab text quickly to synthesize an answer. They do not have the same massive rendering infrastructure that Google has.

The AI Blind Spot

When an AI bot like GPTBot crawls a Client-Side Rendered (CSR) page:

It fetches the initial HTML. It sees the empty shell. It usually stops there.Most AI crawlers skip the rendering phase to save costs and speed up data ingestion. If your content requires JavaScript to load, these AI engines effectively hallucinate that your page has no content.

Strategic Implication: Even if you rank #1 on Google because Google renders your JS eventually, you might be completely absent from AI-generated answers. To future-proof your brand for the AI era, Server-Side Rendering (SSR) is mandatory.

5. Performance as a Ranking Factor: The Cost of “Hydration”

JavaScript doesn’t just hide content; it hurts performance. And in 2026, performance is a ranking factor, specifically via Core Web Vitals.

The metric you need to watch is INP (Interaction to Next Paint). This measures responsiveness. Does the site feel “sticky” or “frozen”?

The Hydration Bottleneck

In modern frameworks, a process called “Hydration” occurs. This is when the JavaScript wakes up and attaches itself to the HTML to make buttons clickable.

The Problem: Hydration is heavy. It blocks the browser’s “Main Thread.” The User Experience: The user sees the page (it looks ready), but when they tap “Menu,” nothing happens for 2 seconds because the browser is busy hydrating.The SEO Impact: If your JavaScript bundle is too large, your INP score will skyrocket (which is bad). Poor Core Web Vitals can demote your rankings regardless of how good your content is.

The Fix: Ask your developers about “Selective Hydration” or “Code Splitting.” This ensures the browser only loads the JavaScript needed for the current screen, rather than the code for the entire website at once.

6. The “Silent Killers”: Common JavaScript SEO Traps

Beyond the architecture, there are specific coding patterns that act as traps for search engines.

A. The “Link” That Isn’t a Link

Developers love using divs and buttons for navigation because they are easy to style.

Bad Code: <div onclick=”goToProductPage()”>View Product</div> Why it fails: Googlebot does not click buttons. It does not trigger onclick events. It looks for <a href> tags. If your navigation is built on onclick events, your site structure is a dead end to a crawler.B. The “Soft 404” Crisis

Single Page Applications (SPAs) often fake the navigation experience. When you click a link, the page doesn’t reload; the content just swaps.

The Scenario: A user visits a URL for a product that is out of stock and deleted: example.com/products/old-shoe. The JS Behavior: The app displays a nice “Sorry, this product is gone” component. The Server Behavior: The server sends a 200 OK status code because the app loaded successfully.Why this ruins SEO: Google sees a 200 OK code and thinks, “This is a valid page.” It indexes your “Sorry” page. If you have 10,000 expired products, you just flooded Google’s index with 10,000 thin, duplicate pages. This destroys your quality score and wastes your crawl budget.

The Solution: You must handle routing on the server side to return a 404 Not Found header before the application loads.

C. Lazy Loading disasters

Lazy loading images is great for speed. But if you lazy load text content or internal links using scroll events, Googlebot won’t see them.

Rule: Googlebot generally does not scroll. It resizes the viewport, but it doesn’t trigger scroll events. Fix: Ensure that text content is loaded initially. If you have “Infinite Scroll” on a blog, you must implement Paginated URLs (History API) so Google can click ?page=2 to find older articles.7. The Audit Toolkit: How to Diagnose Your Site

How do you know if you are suffering from these issues? You need to perform a “Diff” audit. You need to compare what the server sends vs. what the browser renders.

Step 1: The “View Source” Test

Right-click your page and select View Page Source.

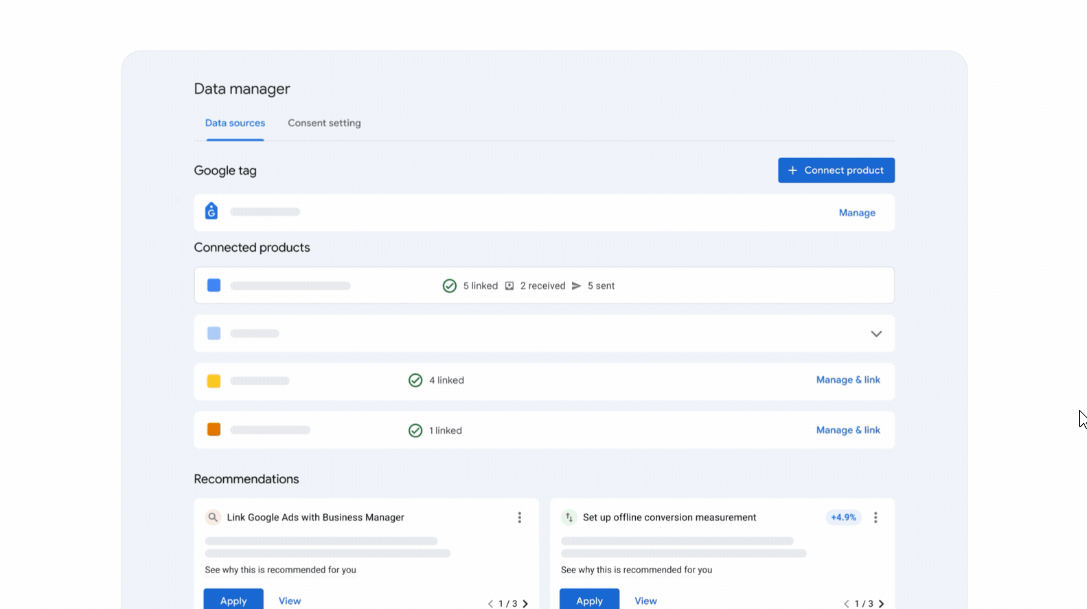

Action: Control+F (Find) your main H1 title or a key paragraph of text. Analysis: If you can’t find your content in the Source Code, you are relying on Client-Side Rendering. You are in the “Danger Zone” of Wave 2 indexing.Step 2: Google Search Console (Live Test)

Go to GSC and use the URL Inspection Tool. Click “Test Live URL.”

Once the test is done, click “View Tested Page” -> “Screenshot.” Analysis: Look at the screenshot Google took. Is the content there? Is it a blank white screen? Is there a popup blocking the text? This is exactly how Google sees your page.Step 3: Chrome DevTools (Network Waterfall)

Open Chrome DevTools (F12), go to the Network tab, and filter by “JS.”

Analysis: Look at the size of your bundles. Are you loading a 2MB JavaScript file? That is enormous. That file has to be downloaded, parsed, and executed before your page works. This is likely killing your INP score.The optimization of JavaScript for search engines has evolved from a niche workaround to a fundamental pillar of web architecture.

In the past, we could get away with sloppy rendering because Google was the only game in town, and they were generous with their rendering resources. That era is ending.

In 2026, the challenge is three-fold:

Speed: You need SSR/SSG to bypass the render queue. Responsiveness: You need optimized bundles to pass INP checks. AI Visibility: You need raw HTML content to be visible to answer engines.The Bottom Line: Don’t ask your developers to “fix the SEO tags.” Ask them to review the rendering architecture. By aligning your tech stack with the capabilities of modern crawlers, you aren’t just getting indexed—you are future-proofing your business against the next decade of search volatility.

What’s Next?

Is your site suffering from the “Empty Shell” syndrome? Stan Ventures specializes in technical SEO audits that dig deep into rendering performance.

Contact us today for a JavaScript SEO Assessment.

Ananyaa Venkat is a seasoned content specialist with over eight years of experience creating industry-focused content for diverse brands. At Stan Ventures, she blends SEO insight with strategic storytelling to shape a compelling brand voice. She has contributed to several leading SEO publications and stays attuned to evolving trends to ensure her content remains authoritative, relevant, and high-quality.

Aliver

Aliver

.jpg&h=630&w=1200&q=100&v=4908ac7b80&c=1)