The 6 best Nvidia GPUs of all time

Nvidia sets a very high standard for what truly is a great GPU. Here are the 6 best cards they've made since the very beginning.

Nvidia sets the standard so high for its gaming graphics cards that it’s actually hard to tell the difference between an Nvidia GPU that’s merely a winner and an Nvidia GPU that’s really special.

Nvidia has long been the dominant player in the graphics card market, but the company has from time to time been put under serious pressure by its main rival AMD, which has launched several of its own iconic GPUs. Those only set Nvidia up for a major comeback, however, and sometimes that led to a real game-changing card.

It was hard to choose which Nvidia GPUs were truly worthy of being called the best of all time, but I’ve narrowed down the list to six cards that were truly important and made history.

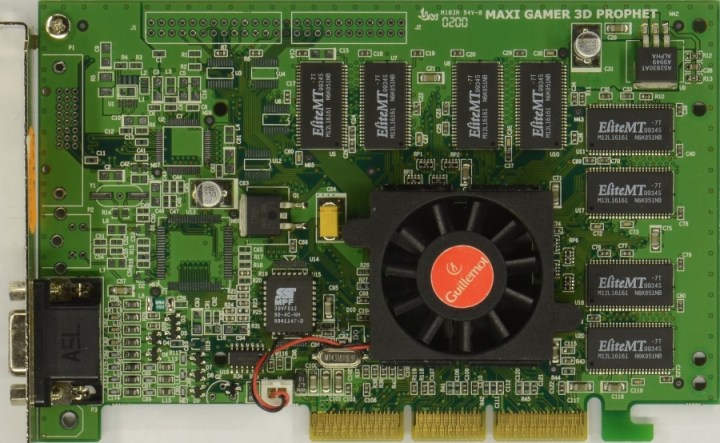

GeForce 256

The very first

VGA Museum

VGA MuseumAlthough Nvidia often claims the GeForce 256 was the world’s first GPU, that’s only true if Nvidia is the only company that gets to define what a GPU is. Before GeForce there were the RIVA series of graphics cards, and there were other companies making their own competing graphics cards then, too. What Nvidia really invented was the marketing of graphics cards as GPUs, because in 1999 when the 256 came out, terms like graphics card and graphics chipset were more common.

Nvidia is right that the 256 was important, however. Before the 256, the CPU played a very important role in rendering graphics, to the point where the CPU was directly completing steps in rendering a 3D environment. However, CPUs were not very efficient at doing this, which is where the 256 came in with hardware transforming and lighting, offloading the two most CPU intensive parts of rendering onto the GPU. This is one of the primary reasons why Nvidia claims this is the first GPU.

As a product, the GeForce 256 wasn’t exactly legendary: Anandtech wasn’t super impressed by its price for the performance at the time of its release. Part of the problem was the 256’s memory, which was single data rate, or SDR. Due to other advances, SDR was becoming insufficient for GPUs of this performance level. A faster dual data rate or DDR (the same DDR as in DDR5) launched just before the end of 1999, which finally met Anandtech’s expectations for performance, but the increased price tag of the DDR version was hard to swallow.

The GeForce 256, first of its name, is certainly historical, but not because it was an amazing product. The 256 is important because it inaugurated the modern era of GPUs. The graphics card market wasn’t always a duopoly; back in the 90s, there were multiple companies competing against each other, with Nvidia being just one of them. Soon after the GeForce 256 launched, most of Nvidia’s rivals exited the market. 3dfx’s Voodoo 5 GPUs were uncompetitive and before it went bankrupt many of its technologies were bought by Nvidia; Matrox simply quit gaming GPUs altogether to focus on professional graphics.

By the end of 2000, the only other graphics company in town was ATI. When AMD acquired ATI in 2006, it brought about the modern Nvidia and AMD rivalry we all know today.

GeForce 8800 GTX

A monumental leap forward

VGA Museum

VGA MuseumAfter the GeForce 256, Nvidia and ATI attempted to best the other with newer GPUs with higher performance. In 2002, however, ATI threw down the gauntlet by launching its Radeon 9000 series, and at a die size of 200mm squared, the flagship Radeon 9800 XT was easily the largest GPU ever. Nvidia’s flagship GeForce4 Ti 4600 at 100mm had no hope of beating even the midrange 9700 Pro, which inflicted a crushing defeat on Nvidia. Making a GPU was no longer just about the architecture, the memory, or the drivers; in order to win, Nvidia would need to make big GPUs like ATI.

For the next four years, the size of flagship GPUs continued to increase, and by 2005 both companies had launched a GPU that was around 300mm. Although Nvidia had regained the upper hand during this time, ATI was never far behind and its Radeon X1000 series was fairly competitive. A GPU sized at 300mm was far from the limit of what Nvidia could do, however. In 2006 Nvidia released its GeForce 8 series, led by the flagship 8800 GTX. Its GPU, codenamed G80, was nearly 500mm and its transistor count was almost three times higher than the last GeForce flagship.

The 8800 GTX did to ATI what the Radeon 9700 Pro and the rest of the 9000 series did to Nvidia, with Anandtech describing the moment as “9700 Pro-like.” A single 8800 GTX was almost twice as fast as ATI’s top-end X1950 XTX, not to mention much more efficient. At $599, the 8800 GTX was more expensive than its predecessors, but its high level of performance and DirectX 10 support made up for it.

But this was mostly the end of the big GPU arms race that had characterized the early 2000s for two main reasons. Firstly, 500mm was getting pretty close to the limit of how large a GPU could be, and even today 500mm is relatively big for a processor. Even if Nvidia wanted to, making a bigger GPU just wasn’t feasible. Secondly, ATI wasn’t working on its own 500mm GPU anyway, so Nvidia wasn’t in a rush to get an even bigger GPU to market. Nvidia had basically won the arms race by outspending ATI.

That year also saw the acquisition of ATI by AMD, which was finalized just before the 8800 GTX launched. Although ATI now had the backing of AMD, it really seemed like Nvidia had such a massive lead that Radeon wouldn’t challenge GeForce for a long time, perhaps never again.

GeForce GTX 680

Beating AMD at its own game

Nvidia

NvidiaNvidia’s next landmark release came in 2008 when it launched the GTX 200 series, starting with the GTX 280 and GTX 260. At nearly 600mm squared the 280 was a worthy monstrous successor to the 8800 GTX. Meanwhile, AMD and ATI signaled that they would no longer be launching high-end GPUs with big dies in order to compete, rather focusing on making smaller GPUs in a gambit known as the small die strategy. In its review, Anandtech said “Nvidia will be left all alone with top performance for the foreseeable future.” As it turned out, the next four years were pretty rough for Nvidia.

Starting with the HD 4000 series in 2008, AMD assaulted Nvidia with small GPUs that had high value and almost flagship levels of performance, and that dynamic was maintained throughout the next few generations. Nvidia’s GTX 280 wasn’t cost effective enough, then the GTX 400 series was delayed, and the 500 series was too hot and power hungry.

One of Nvidia’s traditional weaknesses was its disadvantage when it came to process, the way processors are manufactured. Nvidia was usually behind AMD, but it had finally caught up by using the 40nm node for the 400 series. AMD, however, wanted to regain the process lead quickly and decided its next generation would be on the new 28nm node, and Nvidia decided to follow suit.

AMD won the race to 28nm with its HD 7000 series, with its flagship HD 7970 putting AMD back in first place for performance. However, the GTX 680 launched just two months later, and not only did it beat the 7970 in performance, but also power efficiency and even die size. As Anandtech put it, Nvidia had “landed the technical trifecta” and that completely flipped the tables on AMD. AMD did reclaim the performance crown yet again by launching the HD 7970 GHz Edition later in 2012 (notable for being the first 1GHz GPU), but having the lead in efficiency and performance per millimeter was a good sign for Nvidia.

The back and forth battle between Nvidia and AMD was pretty exciting after how disappointing the GTX 400 and 500 series had been, and while the 680 wasn’t an 8800 GTX, it signaled Nvidia’s return to being truly competitive against AMD. Perhaps most importantly, Nvidia was no longer weighed down by its traditional process disadvantage, and that would eventually pay off in a big way.

GeForce GTX 980

Nvidia’s dominance begins

Bill Roberson/Digital Trends

Bill Roberson/Digital TrendsNvidia found itself in a very good spot with the GTX 600 series, and it was because of TSMC’s 28nm process. Under normal circumstances, AMD would have simply gone to TSMC’s next process in order to regain its traditional advantage, but this was no longer an option. TSMC and all other foundries in the world (except for Intel) had an extraordinary amount of difficulty progressing beyond the 28nm node. New technologies were needed in order to progress further, which meant Nvidia didn’t have to worry about AMD regaining the process lead any time soon.

Following a few years of back and forth and AMD floundering with limited funds, Nvidia launched the GTX 900 series in 2014, inaugurated by the GTX 980. Based on the new Maxwell architecture, it was an incredible improvement over the GTX 600 and 700 series despite being on the same node. The 980 was between 30% and 40% faster than the 780 while consuming less power, and it was even a smidge faster than the top-end 780 Ti. Of course, the 980 also beat the R9 290X, once again landing the trifecta of performance, power efficiency, and die size. In its review, Anandtech said the 980 came “very, very close to doing to the Radeon 290X what the GTX 680 did to Radeon HD 7970.”

AMD was incapable of responding. It didn’t have a next-generation GPU ready to launch in 2014. In fact, AMD wasn’t even working on a complete lineup of brand-new GPUs to even the score with Nvidia. AMD instead was planning on rebranding the Radeon 200 series as the Radeon 300 series, and would develop one new GPU to serve as the flagship. All of these GPUs were to launch in mid 2015, giving the entire GPU market to Nvidia for nearly a full year. Of course, Nvidia wanted to pull the rug right from under AMD and prepared a brand-new flagship.

Launching in mid 2015, the GTX 980 Ti was about 30% faster than the GTX 980, thanks to its significantly higher power consumption and larger die size at just over 600mm squared. It beat AMD’s brand-new R9 Fury X a month before it even launched. Although the Fury X wasn’t bad, it had lower performance than the 980 Ti, higher power consumption, and much less VRAM. It was a demonstration of how far ahead Nvidia was with the 900 series; while AMD was hastily trying to get the Fury X out the door, Nvidia could have launched the 980 Ti any time it wanted.

Anandtech put it pretty well: “The fact that they get so close only to be outmaneuvered by Nvidia once again makes the current situation all the more painful; it’s one thing to lose to Nvidia by feet, but to lose by inches only reminds you of just how close they got, how they almost upset Nvidia.”

Nvidia was basically a year ahead of AMD technologically, and while what they had done with the GTX 900 series was impressive, it was also a bit depressing. People wanted to see Nvidia and AMD duke it out like they had done in 2012 and 2013, but it started to look like that was all in the past. Nvidia’s next GPU would certainly reaffirm that feeling.

GeForce GTX 1080

The GPU with no competition but itself

Nvidia

NvidiaIn 2015, TSMC had finally completed the 16nm process, which could achieve 40% higher clock speeds than 28nm at the same power or half the power of 28nm at similar clock speeds. However, Nvidia planned to move to 16nm in 2016 when the node was more mature. Meanwhile, AMD had absolutely no plans to utilize TSMC’s 16nm but instead moved to launch new GPUs and CPUs on GlobalFoundries’s 14nm process. But don’t be fooled by the names: TSMC’s 16nm was and is better than GlobalFoundries’s 14nm. After 28nm, nomenclature for processes became based in marketing rather than scientific measurements. This meant that for the first time in modern GPU history, Nvidia had the process advantage against AMD.

The GTX 10-series launched in mid-2016, based on the new Pascal architecture and TSMC’s 16nm node. Pascal wasn’t actually very different from Maxwell, but the jump from 28nm to 16nm was massive, like Intel going from 14nm on Skylake to 10nm on Alder Lake. The GTX 1080 was the new flagship, and it’s hard to overstate how fast it was. The GTX 980 was a little faster than the GTX 780 Ti when it came out. By contrast, the GTX 1080 was over 30% faster than the GTX 980 Ti, and for $50 less, too. The die size of the 1080 was also extremely impressive, at just over 300mm squared, nearly half the size of the 980 Ti.

With the 1080 and the rest of the 10-series lineup, Nvidia effectively took the entire desktop GPU market for itself. AMD’s 300 series and the Fury X were simply no match. At the midrange, AMD launched the RX 400 series, but these were just three low to mid-range GPUs that were a throwback to the small die strategy, minus the part where Nvidia’s flagship was in striking distance like with the GTX 280 and the HD 4870. In fact, the 1080 was nearly twice as fast as the RX 480. The only GPU AMD could really beat was the mid-range GTX 1060, as the slightly cut down GTX 1070 was just a little too fast to lose to the Fury X.

AMD did eventually launch new high-end GPUs in the form of RX Vega, a full year after the 1080 came out. With much higher power consumption and the same selling price, the flagship RX Vega 64 beat the GTX 1080 by a hair but wasn’t very competitive. However, the GTX 1080 was no longer Nvidia’s flagship; with relatively small die size and a full year to prepare, Nvidia launched a brand-new flagship a whole three months before RX Vega even launched; it was a repeat of the 980 Ti. The new GTX 1080 Ti was even faster than the GTX 1080, delivering yet another 30% improvement to performance. As Anandtech put it, the 1080 Ti “further solidifie[d] Nvidia’s dominance of the high-end video card market.”

AMD’s failure to deliver a truly competitive high-end GPU meant that the 1080’s only real competition was Nvidia’s own GTX 1080 Ti. With the 1080 and the 1080 Ti, Nvidia achieved what is perhaps the most complete victory we’ve seen so far in modern GPU history. Over the past 4 years, Nvidia kept increasing its technological advantage over AMD, and it was hard to see how Nvidia could ever lose.

GeForce RTX 3080

Correcting course

After such a long and incredible streak of wins, perhaps it was inevitable that Nvidia would succumb to hubris and lose sight of what made Nvidia’s great GPUs so great. Nvidia did not follow up the GTX 10 series with yet another GPU with a stunning increase in performance, but with the infamous RTX 20 series. Perhaps in a move to cut AMD out of the GPU market, Nvidia focused on introducing hardware accelerated ray tracing and A.I. upscaling instead of delivering better performance in general. If successful, Nvidia could make AMD GPUs irrelevant until the company finally made Radeon GPUs with built-in ray tracing.

RTX 20-series was a bit of a flop. When the RTX 2080 and 2080 Ti launched in late 2018, there weren’t even any games that supported ray tracing or deep learning super sampling (DLSS). But Nvidia priced RTX 20-series cards as if those features made all the difference. At $699, the 2080 had a nonsensical price, and the 2080 Ti’s $1,199 price tag was even more insane. Nvidia wasn’t even competing with itself anymore.

The performance improvement in existing titles was extremely disappointing, too; the RTX 2080 was only 11% faster than the GTX 1080, though at least the RTX 2080 Ti was around 30% faster than the GTX 1080 Ti.

The next two years were a course correction for Nvidia. The threat from AMD was starting to become pretty serious; the company had finally regained the process advantage by moving to TSMC’s 7nm and the company launched the RX 5700 XT in mid-2019. Nvidia was able to head it off once again by launching new GPUs, this time the RTX 20 Super series with a focus on value, but the 5700 XT must have worried Nvidia. The RTX 2080 Ti was three times as large yet was only 50% faster, meaning AMD was achieving much higher performance per millimeter. If AMD made a larger GPU, it could be difficult to beat.

Both Nvidia and AMD planned for a big showdown in 2020. Nvidia recognized AMD’s potential and pulled out all the stops: the new 8nm process from Samsung, the new Ampere architecture, and an emphasis on big GPUs. AMD meanwhile stayed on TSMC’s 7nm process but introduced the new RDNA 2 architecture and would also be launching a big GPU, its first since RX Vega in 2017. The last time both companies launched brand-new flagships within the same year was 2013, nearly a decade ago. Though the pandemic threatened to ruin the plans of both companies, neither company was willing to delay the next generation and launched as planned.

Nvidia fired first with the RTX 30-series, led by the flagship RTX 3090, but most of the focus was on the RTX 3080 since at $699 it was far more affordable than the $1,499 3090. Instead of being a repeat of the RTX 20-series, the 3080 delivered a sizeable 30% bump in performance at 4K over the RTX 2080 Ti, though the power consumption was a little high. At lower resolutions, the performance gain of the 3080 was somewhat less, but since the 3080 was very capable at 4K, it was easy to overlook this. The 3080 also benefitted from a wider variety of games supporting ray tracing and DLSS, giving value to having an Nvidia GPU with those features.

Of course, this wouldn’t matter if the 3080 and the rest of the RTX 30-series couldn’t stand up to AMD’s new RX 6000 series, which launched two months later. At $649, the RX 6800 XT was AMD’s answer to the RTX 3080. With nearly identical performance in most games and at most resolutions, the battle between the 3080 and the 6800 XT was reminiscent of the GTX 680 and the HD 7970. Each company had its advantages and disadvantages, with AMD leading in power efficiency and performance while Nvidia had better performance in ray tracing and support for other features like A.I. upscaling.

The excitement over a new episode in the GPU war quickly died out though, because it quickly came apparent that nobody could buy RTX 30 or RX 6000 or even any GPUs at all. The pandemic had seriously reduced supply while crypto increased demand and scalpers snatched up as many GPUs as they could. At the time of writing, the shortage has mostly ended, but most Nvidia GPUs are still selling for usually $100 or more over MSRP. Thankfully, higher-end GPUs like the RTX 3080 can be found closer to MSRP than lower-end 30 series cards, which keeps the 3080 a viable option.

On the whole, the RTX 3080 was a much-needed correction from Nvidia. Although the 3080 has marked the end of Nvidia’s near total domination of the desktop GPU market, it’s hard not to give the company credit for not losing to AMD. After all, the RX 6000 series is on a much better process and AMD has been extremely aggressive these past few years. And besides, it’s good to finally see a close race between Nvidia and AMD where both sides are trying really hard to win.

So what’s next?

Unlike AMD, Nvidia always keeps its cards close to its chest and rarely ever reveals information on upcoming products. We can be pretty confident the upcoming RTX 40 series will launch sometime this year, but everything else is uncertain. One of the more interesting rumors is that Nvidia will utilize TSMC’s 5nm for RTX 40 GPUs, and if this is true then that means Nvidia will have parity with AMD once again.

But I think that as long as RTX 40 isn’t another RTX 20 and provides more low-end and mid-range options than RTX 30, Nvidia should have a good enough product next generation. I would really like for it to be so good that it makes the list of best Nvidia GPUs of all time, but we’ll have to wait and see.

Koichiko

Koichiko