AWS brings easy AI app development to companies

Amazon Web Services (AWS) aims to make creating AI apps easier for companies who want to add their own data to training models.

The future of artificial intelligence is quickly being made into an out-of-box experience that companies can customize based on their specific needs. Optimized chat experiences that are functional far beyond question-and-answer and tools to create AI applications without months of coding development could be the next step outside of introducing new plugins and extensions.

More commonplace tools, such as ChatGPT for information and Midjourney for images rely on public data and consistent developer coding to create an end product. Meanwhile, Amazon Web Services (AWS) is committed to making generative AI that is not only more productive and easier to navigate but also data unique and data secure to the companies that deploy its tools.

Fionna Agomuoh / Digital Trends

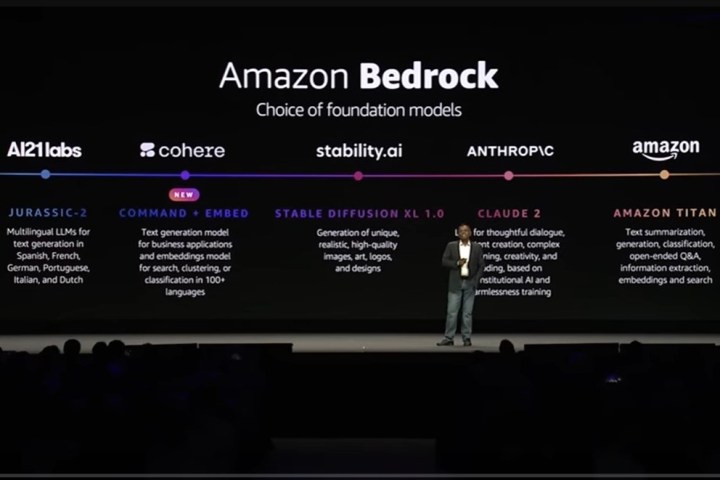

Fionna Agomuoh / Digital TrendsThe brand is using platforms such as Amazon Bedrock to carve out a unique space for itself in the new AI market. Its flagship hub has been available since April and houses several of what it calls Foundation Models (FMs). AWS has initially trained these base-level APIs and offer organizations the standard AI features they desire. Organizations can mix and match their preferred FMs and then continue to develop apps, adding their own proprietary data for their unique needs.

“As a provider, we basically train these models on a large corpus of data. Once the model is trained, there’s a cutoff point. For example, January of 2023, then the model doesn’t have any information after that point, but companies want data, which is private,” Amazon Bedrock Product and Engineering General Manager, Atul Deo told Digital Trends.

Each company and the foundation models it uses will vary, so each resulting application will be unique based on the information organizations feed to a model. FMs are already base templates. To then use open-source information to populate the models can make applications repetitive across companies. AWS’ strategy gives companies the opportunity to make their apps unique by introducing their own data.

“You also want to be able to ask the model some questions and get answers, but if it can only answer questions on some stale public data, that is not very helpful. You want to be able to pass the relevant information to the model and get the relevant answers in real time. That is one of the core problems that it solves,” Deo added.

Foundation models

The several foundation models supported on Amazon Bedrock include Amazon Titan, as well as models from the providers Anthropic, AI21Labs, and StabilityAI, each tackling important functions within the AI space, from text analysis, image generation, and multilingual generation, among other tasks. Bedrock is a continuation of the pre-trained models AWS has already developed on its Stagemaker Jumpstart platform, which has been on the ground floor of many public FMs, including Meta AI, Hugging Face, LightOn, Databricks, and Alexa.

AWS / AWS

AWS / AWSAWS also recently announced new Bedrock models from the brand Cohere at its AWS Summit in late July in New York City. These models include Command, which is able to execute summarization, copywriting, dialog, text extraction, and question-answering for business applications, and Embed, which can complete cluster searches and classify tasks in over 100 languages.

AWS machine learning vice president, Swami Sivasubramanian said during the summit keynote that FMs are low cost, low latency, intended to be customized privately, data encrypted, and are not used to train the original base model developed by AWS.

The brand collaborates with a host of companies using Amazon Bedrock, including Chegg, Lonely Planet, Cimpress, Philips, IBM, Nexxiot, Neiman Marcus, Ryanair, Hellmann, WPS Office, Twilio, Bridgewater & Associates, Showpad, Coda, and Booking.com.

Agents for Amazon Bedrock

AWS also introduced the auxiliary tool, Agents for Amazon Bedrock at its summit, which expands the functionality of Foundational Models. Targeted toward companies for a multitude of use cases, Agents is an augmented chat experience that assists users beyond the standard chatbot question and answer. It is able to proactively execute tasks based on the information on which it is fine-tuned.

AWS gave an example of how it works well in a commercial space. Say a retail customer wanted to exchange a pair of shoes. Interacting with Agent, the user can detail that they want to make a shoe exchange from a size 8 to a size 9. Agents will ask for their order ID. Once entered, Agents will be able to access the retail inventory behind the scenes, tell the customer their requested size is in stock, and ask if they would like to proceed with the exchange. Once the user says yes, Agents will confirm that the order has been updated.

“Traditionally to do this would be a lot of work. The old chatbots were very rigid. If you said something here and there and it’s not working — you’d say let me just talk to the human agent,” Deo said. “Now because large language models have a much richer understanding of how humans talk, they can take actions and make use of the proprietary data in a company.”

The brand also gave examples of how an insurance company can use Agents to file and organize insurance claims. Agents can even assist corporate staff with tasks such as looking up the company policy on PTO or actively scheduling that time off, with a now commonly known style of AI prompt, such as, “Can you file PTO for me?”

Agents particularly captures how foundational models allow users to focus on the aspects of AI that are most important to them. Without having to spend months developing and training one language model at a time, companies can spend more time tweaking information that is important to their organizations in Agents, ensuring that it is up to date.

“You can fine-tune a model with your proprietary data. As the request is being made, you want the latest and greatest,” Deo said.

As many companies overall continue to shift toward a more business-centered strategy for AI, AWS’ aim goal simply appears to be helping brands and organizations to get their AI-integrated apps and services up and running sooner. Cutting app development time could see a spring of new AI apps on the market, but could also see many commonly used tools getting much-needed updates.

FrankLin

FrankLin

.jpg&h=630&w=1200&q=100&v=a905e78df5&c=1)