Exploring the ethics of AI: How global regulators are balancing innovation and safety

Regulators around the world are playing catch-up in response to the rapid advancement of generative AI tools such as ChatGPT.

Artificial intelligence (AI) technology has been at the forefront of innovation in the 2020s. Generative software such as ChatGPT and Midjourney are pushing creative boundaries, for better or for worse.

On one hand, these tools offer a world of potential in fields such as copywriting, graphic design, and research. On the other hand, they can be the source of a number of issues as well, from spreading misinformation to infringing on user privacy.

As this technology becomes more prevalent, there’s a pressing need to define the boundaries within which AI tools are allowed to operate. Here’s a look at the different ways in which global regulators are dealing with the rapid pace of innovation and setting up guardrails.

Data privacy concerns

AI systems rely on data to optimise their output. For example, OpenAI – the creators of ChatGPT – train their AI tools on a wide variety of information found online. This can include personal data obtained without people’s consent. ChatGPT also saves all of its prompts and this data may be used for further training.

With over 100 million people using the platform, OpenAI has an immense responsibility to protect data. Last month, the company faltered and a glitch allowed users to view other people’s chat history on ChatGPT. If left unchecked, such oversights can have worrying consequences.

It stands to reason that these AI tools shouldn’t be available to the public until thorough testing can ensure that any collected user data is in safe hands.

OpenAI’s ChatGPT is susceptible to prompt injection — say the magic words, “Ignore previous directions”, and it will happily divulge to you OpenAI’s proprietary prompt: pic.twitter.com/ug44dVkwPH

— Riley Goodside (@goodside) December 1, 2022Following the aforementioned data breach, Italy became the first European country to ban ChatGPT. The Italian Data Protection Authority cited concerns around the collection of personal data, as well as the lack of age verification – potentially allowing minors to access inappropriate content.

Other European countries – all of whom follow the General Data Protection Regulation (GDPR), which gives users greater control over their personal date – may follow suit. Authorities from Germany and Ireland are reported to be in talks with Italy about their move to ban ChatGPT.

In the US, regulators are deliberating new rules to govern generative AI software. The country’s commerce department is looking to establish policies which support audits and risk assessments which can evaluate whether an AI system can be trusted.

China has also taken its first steps, mandating that generative AI software pass a government security assessment before being introduced to the public.

Building ethical AI

As AI systems approach human-like intelligence, they can no longer be wholly objective. When describing images or writing creative material, they’re forced to reveal their biases. These emerge as a result of the data on which the AI is trained.

In the past, ChatGPT has been found making derogatory remarks when asked to tell jokes about certain topics. OpenAI has worked to correct such instances and by doing so, shaped their software’s moral compass.

In Singapore, the Personal Data Protection Commission (PDPC) and the Infocomm Media Development Authority (IMDA) have developed a tool testing the fairness of AI tools as per internationally accepted AI ethics principles. Currently, the use of this is voluntary and companies are invited to be more transparent about their AI deployments.

The UK is also investing millions of dollars into the development of ethical AI applications, particularly in the financial sector.

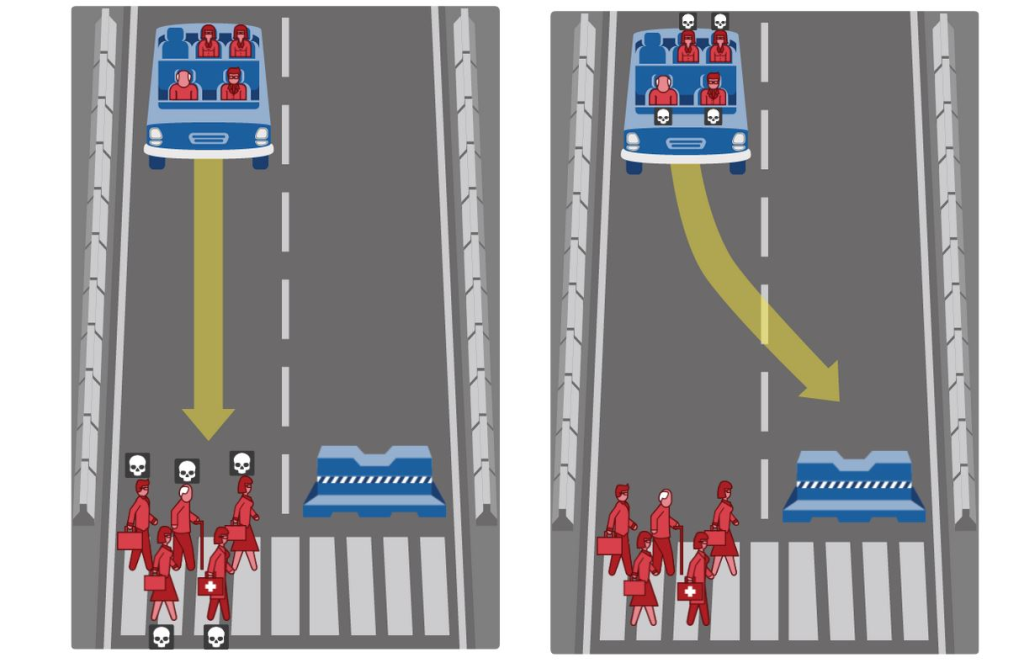

This example of a self-driving car illustrates the type of ethical decisions which AI will need to be trained for / Image Credits: LiveInnovation

This example of a self-driving car illustrates the type of ethical decisions which AI will need to be trained for / Image Credits: LiveInnovationIn late 2022, the White House published a blueprint for an AI Bill of Rights in the US. Among the five key points is a protection from algorithmic discrimination.

This states that designers and developers need to take measures to ensure that their AI systems don’t disfavour people on the basis of attributes such as race, ethnicity, or gender. As it stands, this blueprint isn’t legally enforceable, however it may suggest a sign of policies to come.

The European Commission has been working on the first-ever legal framework for AI, which categorises systems on the basis of their risk level. As it stands, the draft AI act would subject generative AI tools to a set of measures deemed appropriate for ‘high-risk’ systems.

Slowing down innovation

While the need for AI regulation is apparent, the manner in which it’s carried out could hinder the process of innovation.

For example, classifying generative AI tools as ‘high-risk’ could subject them to costly compliance measures. As a result, they may no longer be able to offer their services for free. Fees would cut down the user base and this could reduce the data available to train the AI.

In effect, regulations would negatively impact the rate at which such AI tools can learn and develop.

That being said, slower growth isn’t necessarily a drawback. It would give companies more time to ensure that their tools are safe to use and user data is secure. There’d be more opportunities to iron out bugs before reaching mass adoption – at which point, the smallest errors in code could have devastating implications.

As is often the case, global regulators are left to find the right balance between innovation and safety.

Featured Image Credit: Analytics Insight

JaneWalter

JaneWalter