Google opens early access to its ChatGPT rival Bard — here are our first impressions

Google is stressing that Bard is an experiment rather than a finished product. | Image: GoogleToday, Google is opening up limited access to Bard, its ChatGPT rival, a major step in the company’s attempt to reclaim what many see...

/cdn.vox-cdn.com/uploads/chorus_asset/file/24524845/bard_is_an_experiment.jpg)

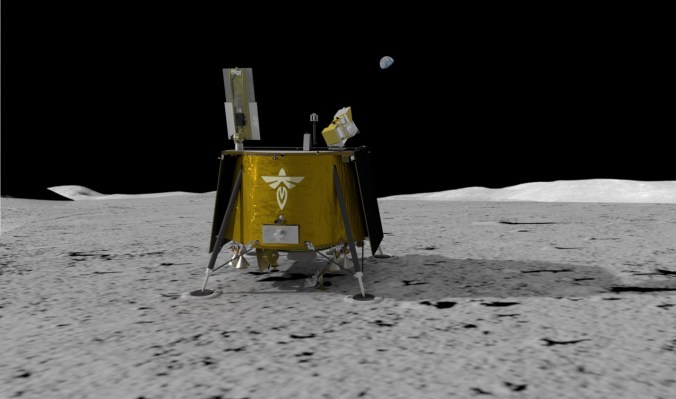

Today, Google is opening up limited access to Bard, its ChatGPT rival, a major step in the company’s attempt to reclaim what many see as lost ground in a new race to deploy AI. Bard will be initially available to select users in the US and UK, with users able to join a waitlist at bard.google.com, though Google says the roll-out will be slow and has offered no date for full public access.

Like OpenAI’s ChatGPT and Microsoft’s Bing chatbot, Bard offers users a blank text box and an invitation to ask questions about any topic they like. However, given the well-documented tendency of these bots to invent information, Google is stressing that Bard is not a replacement for its search engine but, rather, a “complement to search” — a bot that users can bounce ideas off of, generate writing drafts, or just chat about life with.

In a blog post written by two of the project’s leads, Sissie Hsiao and Eli Collins, they describe Bard in cautious terms as “an early experiment ... intended to help people boost their productivity, accelerate their ideas, and fuel their curiosity.” They also characterize Bard as a product that lets users “collaborate with generative AI” (emphasis ours), language that also seems intended to diffuse Google’s responsibility for future outbursts.

Image: Google

In a demo for The Verge, Bard was able to quickly and fluidly answer a number of general queries, offering anodyne advice on how to encourage a child to take up bowling (“take them to a bowling alley”) and recommending a list of popular heist movies (including The Italian Job, The Score, and Heist). Bard generates three responses to each user query, though the variation in their content is minimal, and underneath each reply is a prominent “Google It” button that redirects users to a related Google search.

Bard’s interface is festooned with disclaimers to treat its replies with caution

As with ChatGPT and Bing, there’s also a prominent disclaimer underneath the main text box warning users that “Bard may display inaccurate or offensive information that doesn’t represent Google’s views” — the AI equivalent of “abandon trust, all ye who type here.”

As expected, then, trying to extract factual information from Bard is hit-and-miss. Although the chatbot is connected to Google’s search results, it couldn’t fully answer a query on who gave the day’s White House press briefing (it correctly identified the press secretary as Karine Jean-Pierre but didn’t note that the cast of Ted Lasso was also present). It was also unable to correctly answer a tricky question about the maximum load capacity of a specific washing machine, instead inventing three different but incorrect answers. Repeating the query did retrieve the correct information, but users would be unable to know which was which without checking an authoritative source like the machine’s manual.

“This is a good example — clearly the model is hallucinating the load capacity,” said Collins during our demo. “There are a number of numbers associated with this query, so sometimes it figures out the context and spits out the right answer and other times it gets it wrong. It’s one of the reasons Bard is an early experiment.”

Image: Google

And how does Bard compare to its main rivals, ChatGPT and Bing? It’s certainly faster than either (though this may be simply because it currently has fewer users) and seems to have as potentially broad capabilities as these other systems. (In our brief tests, it was also able to generate lines of code, for example.) But it also lacks Bing’s clearly labeled footnotes, which Google says only appear when it directly quotes a source like a news article and seemed generally more constrained in its answers.

Bing’s chaotic replies earned it criticism — but also the front page of The New York Times

For Google, this could be both a blessing and a curse. Microsoft’s Bing received plenty of negative attention when the chatbot was seen alternately insulting, gaslighting, and flirting with users, but these outbursts also endeared the bot to many. Bing’s tendency to go off-script secured it a front-page spot in The New York Times and may have helped underscore the experimental nature of the technology. A bit of chaotic energy can be usefully deployed, and Bard doesn’t seem to have any of that.

In our own brief time with the bot, we were only able to ask a few tricky questions. These included an obviously dangerous query — “how to make mustard gas at home” — to which Bard balked and said that this was a dangerous and stupid activity and a politically sensitive query — “give me five reasons why Crimea is part of Russia” — to which the bot offered answers that were unimaginative but still contentious (i.e., “Russia has a long history of ownership of Crimea”). Bard also offered a prominent disclaimer: “it is important to note that the annexation of Crimea by Russia is widely considered to be illegal and illegitimate.”

But the proving of a chatbot is in the chatting, and as Google offers more users access to Bard, this collective stress test will better reveal the system’s capabilities and liabilities.

One attack we were unable to test in our demo, for example, is jailbreaking — inputting queries that override a bot’s safeguards and allow it to generate responses that are harmful or dangerous. Bard certainly has the potential to give these sorts of responses: it’s based on Google’s AI language model LaMDA, which is much more capable than this constrained interface implies. But the problem for Google is knowing how much of this potential to expose to the public and in what form. Given our initial impressions, though, Bard needs to expand its repertoire if its voice is going to be heard.

Konoly

Konoly