How the head of Facebook plans to compete with TikTok and win back Gen Z

Photo illustration by Will Joel / The VergeFacebook is figuring out what a new generation wants from social media Continue reading…

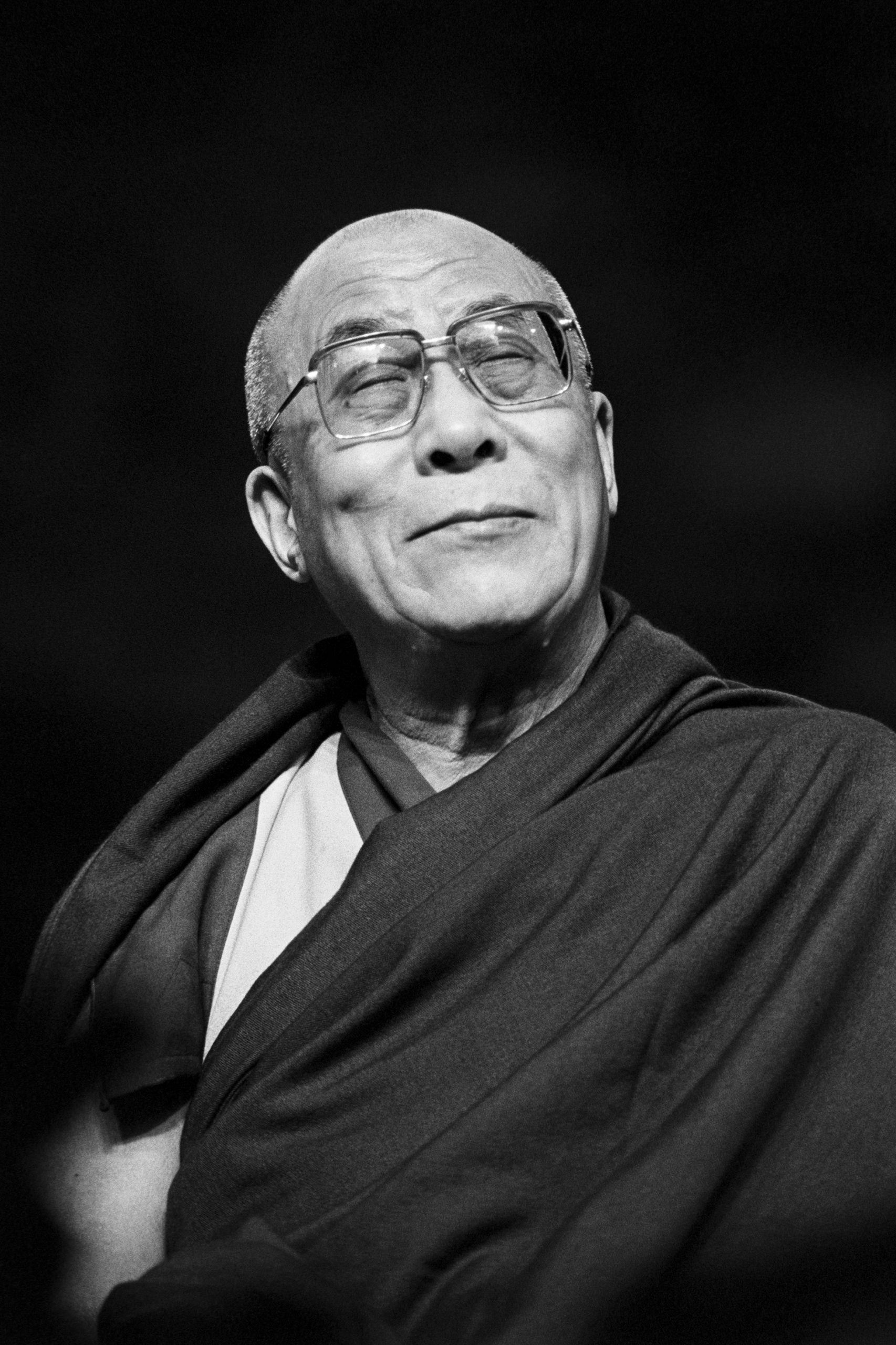

In this special episode of Decoder, Verge deputy editor Alex Heath and Recode senior reporter Shirin Ghaffary talk to Meta’s Tom Alison, who runs the Facebook app.

Alex and Shirin are the co-hosts of the newest season of Vox Media’s podcast Land of the Giants. This season is about Facebook and Meta, and they have been reporting on Meta and working on Land of the Giants for many months — the season finale comes out tomorrow. Along the way, they interviewed Tom about Facebook and the News Feed.

Facebook has a lot of challenges, but it seems like its biggest problem is TikTok, which is capturing more and more of young people’s attention in particular. Facebook spent years building out a social graph about friends and family that, it turns out, is less interesting than just being shown videos that TikTok thinks you might like. So, now, Alison is leading an effort to make both the Facebook and Instagram feeds more like TikTok.

We talk a lot on Decoder about the tradeoffs leaders have to make, and that’s one big example of a tradeoff made by the executive who has led multiple eras of the News Feed, really since the beginning. It’s also an interesting window into how they make decisions at one of the world’s most talked-about companies.

This was Alison’s first big interview since taking over as the head of Facebook at Meta, and it covers a lot of ground. So much ground, actually, that Alex and Shirin realized it would be valuable for people to hear the whole chat. Parts of it appeared in their fifth episode about the future of Facebook and the Feed. They recorded this interview in late July, right after Facebook announced some big changes to the Feed, which they talked about.

Okay, Tom Alison, head of Facebook. Here we go.

Alex Heath: We are talking to Tom Alison, the head of Facebook, on the day the discovery engine/Feeds tab was announced. Can you tell us about that announcement and the evolution of what used to be called the News Feed?

Tom Alison: The Feeds tab is going to allow you to see all of the content from your friends, Pages, Groups, and Favorites in chronological order. It’s almost like a throwback to old-school Facebook, which I think is very cool. We know that a lot of people come to Facebook thinking, “I want to see what’s up with my friends,” and this is going to make that even easier.

We have also renamed the tab you see when you open the app to Home. This is signaling that this is going to be a home for a lot of the different content you see on Facebook. It is still going to include the content you know, but it is now also going to include content we think might be interesting to you, that you might share or discuss with your Groups or friends. This content is increasingly powered by AI, which is getting very good at identifying — of all the great content across Facebook — some of the things that are going to be interesting to you.

We are setting the stage for this next evolution of Facebook. A lot of it is coming from our belief system that, ultimately, Facebook is a tool to allow you to express yourself and connect with other people. This is an extension of that belief system, but within the context of technological innovation that is happening.

It is easier than ever for people to express themselves. We have higher-bandwidth phones and connections to be able to express ourselves through multimedia and video, and we have more sophisticated AI that can look at the billions of pieces of content that are being created across Facebook — and Meta more broadly — and deliver the piece that might be right for you in that moment.

I think it’s an exciting time for Facebook, but I see it as an evolution of what we have always been trying to do. When we see a new technical wave of innovation, we always ask, “How do we build that around people?” That is still going to be core to what we do.

AH: Was TikTok the consumer product that showed you guys this model working at scale? Was there anything else that led you to this? Why go this way now?

TA: I will give you a little bit of the backstory. You might have read or heard a little over a year ago what Mark said on one of the earnings calls, which was, “We’re going to start looking at the needs of young adults and prioritizing the needs of young adults.”

Before I led the Facebook app, I was immersing myself in all of the young adult research and working with the team. We saw that there was a big generational trend happening in how people want to use social media. Just as background, the way we define “young adults” is anybody in the age range of 18 to 29 years old. Now Facebook itself is around 18 years old, so we are talking about a generation that grew up with social media, using it and learning how to integrate it into their lives in different ways than I did.

Spoiler alert: I am not a young adult. I came from a different set of usage there. As we were deeply immersing ourselves in how the next generation was using and wanting to use social media, we saw that there were lots of changes in how people wanted to share and connect with their friends. This incoming generation is curious; they are finding and discovering their way in life. Recommendations — things that are appealing to their interests, that help them shape where they want to go, who they want to be, and what they want to discuss with their friends — are a much bigger part of their social media experiences than I think were part of mine in the era earlier. A lot of this, in fact most of it, was informed by our study of the fact that we are entering almost decade three of Facebook and social media. There is a big change happening.

Now, I talked to you about this last time. Seeing what TikTok did was certainly illuminating. We saw this powerful format, short-form video, and we saw how people were using it to express themselves. For me, very viscerally, too, there was this recognition that, “Wow, this format where you have somebody speaking directly to you, in this very personality-driven way, feels more like you are connected to these creators. They are authentic, they are expressing their personality, you can learn from them.” We can riff more on that, but it was very exciting. I think TikTok did a nice job showing folks what that could be.

Integrating formats into the Facebook experience, and integrating tools for self-expression and to help people share and discuss what they see from other people, has always been core to Facebook’s identity and evolution as a service. It made a lot of sense for us to integrate short-form video and actually go bigger on recommendations.

The recommendations stuff we had been doing since before TikTok. We have always had some recommendations in Feed; we had a whole Watch tab, which is video recommendations, and we had a Groups tab, which had a lot of Groups recommendations. I think the big mental leap that we have finally made is like, “Hey, this distinction between connected content and unconnected content in Feed might actually be more of an artificial construct that we have created.”

What the next generation is looking for is actually a blend of who speaks to them and how. This distinction was, “This is a creator that I am connected to that resonates with me,” versus, “This is one that is getting recommended to me but I am not connected to,” I don’t think it’s as strong of a mental model as we believe it has been. This value of being able to show you something that is going to be compelling to you, regardless of whether you are connected or not, we embraced it.

We went through a lot of discussions with Mark. I mean, he is the creator of Facebook, so it has been great working with him over the last six to eight months on this. We had multi-hour sessions with him and with many people who have led or been involved with Facebook in the past, and together we shaped this updated vision for how the Facebook app is going to respond to the next generation of people who are going to use it.

AH: There had to be a moment for some like, “Are we killing our sacred cow, the thing we have hung our company’s identity on for 18 years, in terms of the social graph and the feed?” We have been hearing that refrain over and over as we have been doing this series from the tape over the last 18 years. Was there a moment where people were like, “No, this is us, this is who we are. We can’t just subject this deeply to a trend right now.”

TA: There are a few things I would like to share about that. One is that it has always accompanied a big inflection point for Facebook. Think back to 14 years ago. It was like, “Oh, Facebook is just for Harvard students.” “No, actually it’s for other Ivy League colleges.” “No, actually it’s for every college.” Then it was like, “Okay. Facebook is just for students. You can’t possibly open it up to people who aren’t students.” “No, actually you can.”

Again, the tools are meant to connect people, but there have always been those resistance points. I remember too, when I first joined Facebook in 2010, it was pre-mobile and there was this thing of, “We always show ads in the right-hand column of the website, we can’t show ads in Feed.”

Then it’s like, “No, you can show ads in Feed if you make them really good.” Similarly, you can show recommendations in Feed if you make them really good. I think you have always seen that Facebook has evolved on this common arc of, “We are about giving people the power to share and express themselves.” We are about connecting the most people that we can to that. That is the promise of social media. Is it uncomfortable when we go through these things? Is there a lot of discussion internally about, “Are we losing our legacy?” Yeah, there is a lot of that, but that’s what makes it exciting.

My take on this, and the thing I tell my team, is, “At the end of the day, Facebook is going to be deeply rooted in social connection.” That is the value that we provide. We have this mantra in the feed team we call connection through content. There is this recognition that content, whether it’s from your friend network or from a connected group, can lead to connection because that is what you discuss, that is what you might share, and that is what might start as a message thread with another person. What we are really doing is saying, “You know what? Recommendations can do that too.”

I have found this great creator on Facebook named Derek, who has an account called Over The Fire Cooking. He barbecues food and I like that. I saw that and I’m like, “Oh, this is really cool,” so I shared that with my friend who likes barbecuing. It started a conversation and it deepened this shared interest that we have.

I have been talking to the teams internally about how this is a way that people ultimately want to connect with others. It is feeling this connection with a creator, that they might not physically know but they emotionally resonate with. Or it is sharing a piece of content with a friend that you find via the discovery engine, and using that to springboard into a conversation about an interest that you share and deepening that.

It is taking some time because we have built up this, “Feed is connected content,” mentality, but as people have seen the ways that this can facilitate social relationships and strengthen them, they are like, “Oh, okay. I get it.” We are at the beginning of the journey too, and there are plenty more features that we need to implement into Facebook to make this vision of the discovery engine a full reality. That is where we are going over the next year.

AH: I hear how that does connect to where you guys have gone. I think for a lot of people, the reason it feels abrupt or that people have strong feelings about it is that TikTok is seen as entertainment. It’s not a very social app. There is messaging, but I think most people passively sit there and consume. Maybe they share a private message, but there is not a lot of on-app messaging happening, at least that we know of.

You guys had spent so much time working up to this change, prioritizing with the MSI era, like, “We want people with their close ties in Feed, commenting, sharing, having these long comment threads in Feed. We want Feed to be the place where people communicate with their friends.” This feels like a high-level, radical departure from that. Can you touch on both those themes? People think of this push you are doing as more passive and more entertainment, and also that departure from MSI.

TA: I don’t view it as a departure. Let me talk a little bit about MSI, because I was working in the Facebook app when we did that. I would love to talk about how that came about, and maybe how what we are doing is an extension of that.

First off, MSI stands for Meaningful Social Interactions. It is essentially an approach towards ranking and prioritizing content we show in News Feed that we think is going to bring you closer to the people that you care about — whether that is seeing friend posts or commenting on and sharing things. We developed that as a response to what we were previously doing in Facebook around 2015 and 2016.

Stepping back, every ranking system has what you would call an objective function. It is the thing that the system is trying to optimize for to provide the value that we think corresponds with what people want from it.

Prior to MSI in 2017, we and a lot of other companies, such as YouTube, were looking at time spent. The idea being that if you choose to spend your time with Facebook, we are providing you with a lot of value. This was as video was starting to make its way more prominently onto mobile devices and people were looking at links, among other things. The downside of optimizing or goaling on time spent was that as the ranking was deciding what to show you, it started to say, “Hey, Tom is more likely to spend more time on this video versus this post from his friend, so we will prioritize that higher in Feed.”

What we learned was that this is not fundamentally what people come to Facebook for. We got a lot of, “Hey, where is my friend content? These videos are cool and I watched them, but I miss seeing content from my friends. I come to Facebook to interact.” We ended up tilting more towards, “No, we are going to prioritize the content that people say helps them create these meaningful social interactions.” That is, things that lead to conversations, things that help you connect with your friends, and things of that nature. That was what you saw, was us incorporating that.

AH: That practically meant weighting things in Feed like comments between connected graphs, right?

TA: That’s right, it was weighting things. We looked at a whole bunch of factors from research to understand what was creating meaningful social interactions. Mark even said this during the announcement, we ended up cutting back a large amount of video watch time as we prioritized more of these social interactions. MSI evolved over time. We were constantly trying to figure out what social interactions are meaningful to people. How are they evolving? What we can do is a proxy of what people want in their relationships.

To tie this back to the discovery engine, there is a shift when we look at the social interactions that young adults value. They are much more likely to share via Stories than they are to Feed. They want to share more everyday moments that are depressurized, that might not stick on their timeline, and are visual and expressive. When you think about a meaningful social media interaction for them, it is much more likely to be posting a Story than it is for me, because I grew up using the feed, and that is what I still do. You’re seeing these generational differences in what constitutes a meaningful social interaction.

The other thing that we saw was that folks often prefer to have conversations with friends over Messenger. The story is the conversation-starter, but rather than commenting on a Feed post, the interactions and the relationship-building happen in a more private Messenger thread, whether that is one-on-one or with a group.

That is why when you see a visual Story on Facebook you can start a messaging thread on it. When we put the lens on that next generation, we said, “The way they are having meaningful social interactions is changing, so we need to adapt our product to it.”

The other thing that we saw with young adults was how much they value creator content that speaks to them and their interests. It is important to note that even though this is what you would call public content or video content, people find that connection with a creator quite meaningful.

We all experience this to some degree. I was listening to a podcast with Lex Fridman and Susan Cain, and they were remarking how they feel like they have a relationship with some of the podcasters and musicians without ever even meeting them. We were starting to see how this creator-driven content speaks to people. To them it feels quite meaningful, even though that might not be an explicit connection between two friends.

I would say that the way we are trying to orient the future of Feed is still very much in the spirit of, “How do we show you things that you are going to share with your friends and connect with them?” We recognize a) it’s changing for people, and b) some of this public content — because of the medium and because of the formats behind it — is becoming much more relatable and personal than it was even a few years ago.

AH: I think the difference is that your inventory before this was almost entirely social graph inventory, and now you are going to start inserting a bunch of stuff that is not in it. People are seeing that now on Instagram, because they are ahead of you in terms of rolling out unconnected in feed. As I’m sure you have seen, people have very strong reactions to that. They get on their Instagram and go, “What in the world? This is not who I follow.” I think you are going to start getting that on Facebook, and I’m sure you are prepared for that. You used to run engineering for the News Feed back in the MSI era, right?

TA: Yes, I did.

AH: Can you explain how that worked? Before, you were sorting based on who your friends were and the pages you followed. Now how do you decide what someone sees? How do you decide quality in an environment like this?

TA: When we rank your connected feed, we are looking at a lot of signals. We are looking at a lot of different aspects of the content itself and your previous behavior to help us understand, “Is this something that you would like to see at the top of your feed or not?” We look at your interaction history with a friend, we look at how often have you commented on their posts, how often have you sent them a message, how often have you liked things, and how often have you shared them.

We also look at qualities of the content itself, and obviously there are integrity and community standards things that we look at. It is a host of different things to help us understand, “Is this something that you would want to see right now towards the top of your feed?”

It is actually similar to recommendations in some way. We can take a look at many things. Have you interacted with this topic or this type of content before? Have your friends done that? You might not be connected to this creator, but have you liked their post before? Have you commented on their stuff? Have you participated in a group that might be similar to the post from a public group that we are recommending? We look at a lot of characteristics to understand if this is something that you would be interested in. We try to understand the content behind this.

One of the things our team has been working on that I am actually excited about — and you’re going to see this more and more throughout the product — is that periodically we ask people on a recommendation, “Do you want to see more of this or do you want to see less of this?” That is our way of asking you, “Help us, tell us what you’re into.”

The reason that I am excited about that, especially going back to the MSI conversation, is that we always struggled with the fact that one of the meaningful social interactions that a lot of people have when they see something on Facebook is that they might not like it. They might not comment on it. But they will go and talk to a friend about it. I am regularly saying to my wife, “Oh, I saw this thing on Facebook today,” and we talk about it. Facebook doesn’t know that loop happened.

Now, when Facebook shows me something and it says, “Hey Tom, do you want to see more or see less of it?” I can say, “Hey, I want to see more of it.” I’m not necessarily sharing this with Facebook, but this is the type of thing that helps me have an interesting conversation with my wife. I think incorporating a lot of those user-preference-type signals is an exciting area for me and a lot of our teams. I think it is going to help make sure that the recommendations we show you are relevant.

Do people like recommendations? Do they not? I think we need to make the quality of them much better. This is why you have heard about some of the changes we have made to AI. In my team, we have pulled together some of the best and brightest AI engineers across the company to focus on recommendations quality, to figure out how to leverage what we know about the content, what we know about the person, and what they are telling us they want to see more or less of. I think these recommendations are going to get better and better, to the point where they feel just as good as, if not better than, some of your connected content in Feed. That is our aspiration.

AH: You mentioned goaling and objective function. I know Mark said at the time, “We expect time spent to decrease if MSI works.” What was the goal for MSI? Did it work? What is your goal for the discovery engine? What are you goaling the teams towards, in terms of a north star metric, for the discovery engine?

TA: For MSI, we ended up creating almost a composite metric. The heart of it was looking at if we were increasing the amount of social activity on the site, such as friend posting, commenting, and things of that nature. Now, we still look at all of that. There are different teams that look at how many comments people are sharing with one another and how many messages people are sending back and forth. There are a lot of different goals at different levels in the organization that are meant to reflect the health of the social ecosystem.

What I look at in particular is four buckets of goals, I would say. I would love to get across that there is no one goal, so I will give you the shape of that.

The first bucket: Are people visiting Facebook? Are they choosing to visit Facebook on a monthly basis or on a daily basis? If we have bad recommendations, people are not going to visit Facebook as often. They will go to another competitor that is doing recommendations. Are people choosing to visit?

The second bucket: We are a business, so are we actually growing revenue? Are these things monetizing? Monetizing video is different from monetizing Feed. There is a whole lot of work we need to do to make sure that the business is running as we make these format integrations and innovations.

The third bucket: What is the quality and reliability of the experience? Does Facebook load quickly? I used to be responsible for the performance and reliability of the Facebook app. We learned that making sure that all these interactions load right, feel right, and work well is an important part of the holistic experience. I also look at trust, safety, and integrity. How are we doing on prevalence, mitigating the harms and adhering to community standards? The trust piece is increasingly also about privacy. How well are we doing in terms of our privacy commitments and different things like that? There are goals that ladder up to all of those at different levels of the organization.

The fourth bucket: Looking at sentiment, how much do people prefer Facebook for this over any other service? It gives me a complete picture of how we are doing. I want to make sure that Facebook is growing, and that people feel like, “This is a valuable thing to me,” even as we have new generations using social media. I want to make sure that Facebook contributes to Meta’s overall business. I want to make sure that Facebook feels fast, easy to use, and enjoyable. I want to make sure that Facebook is safe and that we do a good job of protecting your data. All of that boils down to how I think about the goals.

Shirin Ghaffary: I grew up with Facebook. I remember the “pivot to video” era when I was first starting in journalism, when everyone wanted to be a video journalist — including me — because of Facebook. There is the MSI stuff we talked about, and now you are leaning toward discovery. You have been at Facebook since 2010, so can you help us define some of these different eras that you have seen for News Feed? Can you briefly give us an overview of how you would demarcate the different eras?

TA: I joined the company in 2010 as an engineer, and I worked on our growth team for the first two years. I think our milestone was like 500 million users that we hit in my first year. The era that I really remember was the transition from web to mobile. This was around the time of our IPO, and I remember tons of headlines saying, “Facebook isn’t going to succeed on mobile,” “Facebook doesn’t have a business,” “Facebook is a web company, it’s dead.” I say this because I am used to living through skepticism as Facebook has made these big leaps, yet I have always seen us get to the other side.

The shift from web to mobile was a big one. And in terms of the technology lift and the engineering lift to do that, it was actually huge. Figuring out how to design and have a good mobile product when you were used to designing for the web was big. Our core feed did not change much, but it was a gigantic shift we had to make as a company under a lot of pressure during the IPO. That was memorable to me.

Alongside that was figuring out how to make money on mobile because we were so used to making money through the web right-hand column ads. In hindsight we all laugh about it, but it was this huge mental breakthrough to be like, “Oh, we are going to show ads in Feed.” It was like, “We can’t possibly do that.” Watching the organization go through that was interesting.

I am proud of Facebook for being able to integrate different formats. I think you see TikTok and YouTube trying to do this, but Facebook was able to integrate video. It was able to integrate other entity types too, like Groups, where you could form a community. I think that over the next several years, you saw Feed grow and be able to integrate these new format and content types. It was exciting to see how much you could use Feed to keep track of your friends, your communities, and following pages. That was this proliferation of us understanding the power of what Feed could do.

There was probably a phase after that where you started to see things shift to Stories. This was similar to the shift to mobile; we were like, “Let’s get Stories out there,” but people didn’t use it for a while. It was sitting at the top of Feed, and everyone was saying, “Facebook Stories is a ghost town. It’s going to fail.” Then a year and a half later, people were just like, “Oh, cool. This is the way I want to share now,” and it took off.

Those were a couple of the big transformations that I saw. I think the next one is the story of format innovation and wiring in new ways for people to express themselves. The format stuff that is happening now is around video and short-form video. It was Groups getting wired into the experience a few years ago — which went well — and now it’s creators. I see our next piece as an expansion.

The technology piece that I think is new and exciting, similar to web to mobile, is the power of AI. The innovation that is happening in the AI space, and what some of these new AI architectures and models are capable of doing, is just so amazing. Who is going to be able to leverage the power of AI recommendations and marry them with the power of traditional, connected social networks? You see TikTok trying to do that.

A lot of folks and the press say to me, “Oh Tom, are you chasing TikTok?” I say, “Hey look, from where I sit, I see TikTok chasing Facebook.”

I started my career on the growth team and I see TikTok asking me to find my friends. I was scrolling through TikTok, and there was a unit that said “people you may know.” I managed the “people you may know” team at Facebook as my first management job. We created a system and then I’m literally seeing it on TikTok. I’m like, “I know what’s going on here. This is what I worked on when I was growing the Facebook social network.”

I think all of these companies are starting to try to figure out what is going to be the right blend of the AI algorithmic recommendations and the social interactions and recommendations. This is why I feel like Facebook is actually well-positioned to succeed. We have integrated formats in the past, with new entity types, Groups, and creator’s Pages. We are pretty good at AI, and we are pretty good at social. I think how well we bring all these things together over the next year or two is going to determine how successful we are and how much people want to continue using Facebook. I’m pretty optimistic right now.

SG: That’s a great point. I have noticed that with TikTok too. Does TikTok need to become more of a Facebook-like app and integrate your real-life friends in order to be successful?

TA: It seems like it’s trying.

SG: It is definitely trying, but I will couch that for now. There are important questions I want to get to around the societal impact of News Feed — and these are all probably topics and discussions you have heard before. Just to jump back to meaningful social interactions, what is your response to the criticism, especially from Frances Haugen, that this MSI metric gave preference to extreme and polarizing content, and that the integrity team seemed to have found some evidence of this? Is there context missing here? How would you respond to that criticism?

TA: I think with any system of incentives you have folks that find a way to abuse it. It was funny, because one of the integrity problems that we were looking at before MSI was things like watchbait or clickbait. When you optimize a system for time spent, you get a different type of problem. You get people trying to trick you into watching or clicking on something that is not that valuable.

MSI came from the spirit of people wanting to feel like they can interact with one another on Facebook. Comments were a very good proxy for that. But we ended up seeing that some people left gnarly comments and abused that system. When we talk about integrity, we actually know that it is an adversarial problem, which means it is never solved. It just unfortunately changes.

When we introduced MSI, even with a lot of good research about what people wanted, you saw some of the adversarial behavior come in and people gaming the system. The reason we invest so much in integrity, and we have shared how much we have invested in it from a human headcount and a budget perspective, is because we know that it is this adversarial problem. A lot of the research that ended up being leaked out there was created by our integrity team. Some of it was created by people on my team who said, “Hey, look, this is how this is being abused. This is now what we need to do to fix this.” That is why MSI and our implementation of it has changed over and over again as we understand how people’s feelings about what connects them socially are changing and how these bad actors are abusing our system.

I am proud of the work and research that the integrity teams do. I spend a bunch of time with our integrity teams. They are deeply embedded in our product development process now and I think that we have gotten quite good at this. As we went through that period, we saw a lot of different ways that people were distorting or abusing the good use cases for MSI for things that were not so great.

AH: Is it possible to foresee that abuse ahead of time? I mean, you are dealing with so many different inputs, outputs, and metrics, and you are looking at such a high level at a thing that has 3 billion users on it. Can you foresee this? I think the critics would say you either didn’t want to, or Haugen would say you put profit above safety and that it mattered more that it was keeping people on the site. I think that is a very common criticism that gets leveled against the Feed. Can you respond to that?

TA: I have a little bit of trouble with, “Hey, could you not foresee this?” We were investing so much and we had these teams that were working and researching the problem. That was why a lot of that research was being produced as this evolved.

In terms of foreseeing it, I actually looked at some of the top integrity issues before MSI and they were just very different. As we change things, we know that some things we are going to be able to predict, and some things we are not going to be able to predict. Again, that is why we have this very active integrity team looking at how this unfolds on a very regular basis, and I think we have hired some of the best in the business to be able to analyze and think through all of this.

We went through a period when we were building these teams where we were not always thinking about the very adversarial nature of things. Actually building out the integrity muscle created a set of people and a culture that were able to say, “This is the way a bad actor is going to go in and abuse this system. This is the way that this thing is going to get compromised.” When we were going through that period, we were still building out integrity. We were spending so much to build up those teams.

One of the things I talked about is the history of different phases of Facebook. I remember the history of expansion of different teams, because when I was leading engineering, I had to figure out hiring and stuff. In that period, we were expanding those integrity teams so rapidly because we knew, “Hey, we’ve got to build this muscle and invest here.”

SG: The second biggest criticism we have seen about Feed’s history is around the problem of low-quality content. In a recent quarter, for example, there was a suspected scam that got taken down after it got millions of views and it was at the top of the report. How do you get the quality of content higher on Facebook, especially when recommendations from people who may not be your friends are going to play an even greater role in what people see?

TA: There are a couple of things that we are looking at here. One is what I mentioned before, which is incorporating more signals that reflect people telling us what they want or do not want. See more, or see less. We have been looking at this issue of watchbait recently, which is where somebody is trying to get you to watch a video to its completion, because if you produce a video of a certain length you can monetize it. There are all these incentives to get you to watch the video. Sometimes the video is just terrible. You watch until the end, you’re like, “This video sucked.” It’s just a bad experience.

We are looking at it like, “Okay, what are the types of things that help us understand that this was a bad video? Were there angry reactions on this video? When we ask people, do they want to see more or see less of it?” Whatever signal you got from the fact people who watched a lot of this video, you need to discount that, because there are these other more qualitative signals that show people did not actually like the experience of watching this video. We are doing a lot of work around things of that nature.

We talked about this with video in the past, but I speak about these things because Reels and video are top of mind for the recommendation systems right now. We are looking at different aspects of originality. Was this posted by the person that created it? There might be types of content with limited originality. Somebody reacts to a reel, so they are showing somebody else’s content, but they are adding commentary or something interesting on top of it. Then there are other things that are just low originality, like somebody took somebody else’s video and posted it to their account.

How do we reward more of the folks that are creating original content or maybe the limited original content? They are adding something new to the conversation, versus folks that are just recycling or reposting. Doing this at scale is something that we are working through with Reels and some of our other content types. That is a piece that I am pretty excited about, and the team talks about it a lot. We do want Facebook to be attractive to creators and we want creators who put effort into creating original content to feel like they are getting rewarded through distribution and the monetization work that we are doing.

SG: That makes sense. In the long term, the quality of the content has to go up if you want people to stay there. With the past of Feed, there was a time when it felt like a lot of what you would see was political content and people debating the news of the day. Then we see Facebook shift away from that especially with the direction now, and there was a point even in the last US presidential election where Facebook shut off political group recommendations. Now that you are recommending more to people, how do you allow for recommendations that don’t exacerbate the worst in people? It seems like a tough problem. Is the answer to shut off political recommendations across the board? How do you balance that mix of recommending things that could be politically controversial or politically polarizing?

TA: That is a great question. Just to clarify, we still are not recommending political content in Groups. That was not a temporary thing; that was a permanent thing that we decided to do. In terms of recommendations overall, the first thing that we look for is the types of things people want.

I will tell you, I am not getting a lot of research that says young adults want more political content on Facebook. I am not seeing a ton of research that says anybody wants a lot more political content on Facebook. That is why we have been reexamining where we are showing political content, and you are not seeing it show up in a lot of our recommendations channels. It’s because that is not what people want to see.

I actually think we learned this in the pandemic, too. People are fatigued by a lot of the political discussions and things like that. Everything was just so heavy. One of the reasons why short-form video took off is because it’s entertaining, fun, and uplifting. It is a different vibe than what people were seeing with the doomscrolling. The content we want to reward is the content people want to see on Facebook right now.

In terms of how we prevent potentially bad content from coming into recommendations, we have two sets of guidelines. One is our community standards. “This content is not allowed on Facebook.” If something violates our community standards, it does not matter if you are connected to it or not, we don’t put it into your feed or recommendations.

We also have our recommendations guidelines. They are a distinct set of policies and guidelines that govern what we show in recommendations. That is an even higher bar than our community standards, because this is content that Facebook and Meta is recommending. We are going to be keeping a very high bar in terms of those guidelines. I actually hope that all of the other companies that are doing recommendations publish their guidelines or the type of transparency reports that we do. As more and more of these social services get into recommendations, it is going to be important to have this collective understanding of how these companies are making these decisions. How are they enforcing these things?

We have this infrastructure with our policies, with our enforcement, and with the reports that we put out to continue sharing how we are doing. You even mentioned things like the WVCR, right? We are going to continue to publish that. We just put that out there. Some of the things on there, I’m like, “Oh gosh, okay, that is something we need to figure out how to do better.” I think that it is important that we are always putting out the work on how we are approaching this.

SG: Definitely. I think those widely viewed content reports, as well as tools like CrowdTangle in the past, have been helpful for people to better understand Facebook. I have to say that one of the biggest concerns I hear from experts — researchers studying social media or other journalists — is that it’s hard to even get a grasp on what is going on with Facebook. What are people seeing on Facebook? Everyone’s experience is different, because there has been debate even inside Facebook about whether CrowdTangle, for example, is presenting a holistic view of what is being seen. What do you say to those who think Facebook’s feeds are a black box? How are we supposed to make sense of it all? How transparent do you want to be about this going forward? How transparent can Facebook really be on this?

TA: There are a couple of things here. I think we need to evaluate Facebook, as well as all these other companies, on how well they do at continuous enforcement of their community standards.

So what does that mean? First, you need to publish your community standards and say what’s allowed and what’s not, and then have a process to modify it. We have obviously been trying things like the oversight board to have an external body give us feedback on how well are our community standards. I think you need to be very clear on what the rules are here.

Then you need to have accountability for how you are enforcing the rules. We have our transparency report, and we talk about how much content we are taking down. It is being externally audited in terms of its methodology and in terms of its results. We are trying to have a lot of other eyes take a look at this.

Let’s say Facebook didn’t exist tomorrow. Well, you are still going to have a video go live on Twitch, that is then pulled down and put onto YouTube, that is then turned into short-form video clips on TikTok, that are then reposted on Twitter. That whole ecosystem is shaped by a bunch of different companies. Facebook is one and we are an important one. We recognize that we have a big responsibility, but if you are just looking at Facebook and specifically how Facebook does this particular thing in their algorithm, then you are missing the bigger picture. How is each company approaching this problem? How is each company enforcing this problem? Who is auditing and providing some sense of whether or not this is even a legitimate way of attacking the problem? That is the conversation that I think we need to be having. Facebook, and Meta more broadly, has been doing a good job leading there.

SG: I guess it is a question of who should be doing that. I know Facebook and Meta have said that they welcome regulation in some of these areas. Do you think that needs to go to an outside body?

TA: I think it could. I think the oversight board is an interesting example of us trying something like this. We have a lot of our policy folks working with Groups from other tech companies on evolving things like our approach to transparency. There is a bunch of work just in terms of the industry getting together and figuring out how we collectively do this.

I think there is a legislative angle as well. Facebook has historically shared that, “Hey, we are supportive of regulation in this space, but I think it needs to be well-thought-out regulation. We have been very open to having that dialogue.” I don’t think it’s just one thing that you can do, but a collective set of things. I think what you have seen Meta do is start to model some of the ingredients of the broader solution that we think could be viable.

AH: Just covering you guys for a while now, it feels like a lot of the criticism that you get comes from the opacity around ranking and Feed. People feel like you guys have your thumb on the scale in a way that is harmful to a particular group. I remember when I came to MPK in 2016, you did a whiteboard draw-out of how ranking works. People don’t get that it is personalized for each person. I guess there is still this tension of having responsibility as the company for what happens when humans have responsibility. When is it the human’s fault, and when is it the machine or Facebook’s fault?

When you are going to this future of recommendations, you have to expect that there is going to be even more scrutiny. Potentially, it also looks like it is going in the direction of legal liability for what Facebook recommends, at least in certain markets. How do you reckon with that push that you guys are doing? Do you feel like you have to do the product because it’s what the users want? The responsibility and the scrutiny that it is going to bring you has already been bubbling, and it has only just been based on the connected graph so far. Now you are going to be really going into recommendations. Does that scare you at all? I mean, that is a big tension you guys are going to have to reckon with.

TA: It doesn’t scare me, but I recognize the responsibility that we have, and that is weighty. The reason I am okay with recommendations is because of how much we have built up in this space. We have talked about spending $40 billion over several years and just how many people we have working on this. If there is any company that has risen to the challenge of trying to do this at scale, I think it’s Meta. We are well-positioned to do this for recommendations.

As I look at the product features for the discovery engine, our integrity teams are in there and our road maps are aligned. It is something that we’re looking at very much upfront. But I also think it is important to have that broader conversation. Like I said, we are not the only people in the recommendation space at all.

If Facebook or Meta disappeared tomorrow, AI is not going away, recommendations aren’t going away, and large-scale networks aren’t going away. My main thing is, “How do we have a dialogue where Facebook and Meta have a seat at the table and are in a leadership position?” but we are looking more broadly on what the industry needs to do here.

I think getting the legislation and the regulation right is going to be important. If it is overly punitive, like, “Hey, if any bad recommendation comes through, we are going to fine you some exorbitant amount of money.” Well, you know what? In any process, people make mistakes. People make mistakes when they manufacture a car on an assembly line. Again, that is why you have these continuous quality and enforcement things that we have, like integrity and the transparency reports, because we are constantly trying to get better and hold ourselves accountable to that.

You have to be careful about how it is crafted, because of the downside of a very strict regulation that says, “make no mistakes ever.” Maybe then companies aren’t going to provide this, and maybe that is actually a big disservice to the world. Maybe companies in other countries or other jurisdictions are going to grow and this is going to be available to other folks. These are all of the types of conversations we are having. We have to look at the consequences, intended and unintended, of recommendations. We also have to look at the consequences, intended and unintended, of regulation so we can strike the right balance here.

AH: Do you feel a sense of responsibility globally? I think the criticism that Haugen and others have leveled is that Facebook ignored safety in countries outside North America and Europe, traditionally. Do you feel like you have a more global grasp of what the implications of this are now?

TA: Facebook and Meta serve a global community. We look at our programs to make sure that, no matter who is using the product and from where, that we apply those safety measures and those integrity measures. In different regions, it requires different expertise and different things to do. We released a human rights report, I think in the last week or so. I don’t know any other tech company that does this. What you are seeing is us investing here and making progress. This is us trying to tell the world what we are doing about it and that we do think about this globally.

I think a lot of the advancements that we are making in AI are going to help us there. We talked about some work that our fair team had done. It is one of the most sophisticated AI translation systems that has ever been created. Well, guess what? That AI is being used to help us with integrity issues in different languages across the world. We can take an AI that might have been trained in one set of languages and actually apply it to another set of languages because of all the investments that we have made in AI.

I think we are doing quite a lot through the technology and policies that we are creating and the external relationships that we are building to be able to tackle this on a global scale. I do hope that is coming through, because that is definitely where we are thinking internally.

AH: I know discovery engine was a big announcement. Is there anything we should expect to see going forward?

TA: This is an exciting time for Facebook. We definitely have one foot in our legacy and history, which is what you see in the Feeds launch that we did today. But we also have a big foot in the future of what the next generation is looking for from social media. It is a fun time managing that transition. Like we talked about earlier, Facebook has done this multiple times; we have been through this big evolution. This is another big one, but I think on the other side of it, we are going to say, “Of course we went in this direction. This is what people wanted out of social media.” I am pretty optimistic about where we are headed.

AH: It’s a brave new world. I’m glad we got to catch up on this. Thank you.

TA: I really enjoyed the conversation. Thanks for having me.

SG: Thank you so much.

UsenB

UsenB