Nvidia’s biggest fails of all time

Nvidia might be a mighty titan in the graphics industry, but it's made some major mistakes over the years. Here are Nvidia's biggest ever fails.

Nvidia is the biggest and most successful graphics card manufacturer of all time, despite AMD’s best efforts. But just because it wears the crown, doesn’t mean this king has never faltered. In fact, like its stiffest competition, Nvidia has made some major missteps over the years, leading to embarrassment at best, and billions of dollars wasted, at worst.

If you’re here to poke fun at the bear, you’re in the right place. Here are Nvidia’s biggest fails of all time.

Hyins

HyinsGTX 480

Nvidia has made some amazing graphics cards over the years, and its latest make up some of the best graphics cards available today. But it has made a few poor cards too, and some that were downright terrible. The worst Nvidia GPU ever was arguably the GTX 480. Built on the Fermi architecture, it was incredibly power-hungry — it drew as much as some dual card systems from AMD — and that in turn led to sky-high temperatures.

It might have just about been the fastest single-die graphics card you could buy, but with multi-GPU configurations still viable in 2010, that wasn’t as big a deal as it is today. Combine that with a high — for the time — $500 price tag, and the GTX 480 was dead in the water.

Too expensive, too hot, too power-hungry, and not fast enough to justify any of it.

RTX launch

Today Nvidia’s RTX brand of graphics card hardware and its capabilities might be a mainstay of the industry, with ray tracing performance and upscaler support being major factors in any modern graphics card review. But in 2018, that wasn’t the case. Nvidia faced an uphill battle with its RTX 2000-series; the first generation of GPUs with new hardware accelerators for specific, and, until then, practically unheard-of tasks.

It was a classic chicken and egg problem, and though Nvidia tried to push both out of the nest at the same time, it ended up with the proverbial zygote all over its green face.

The first attempt at deep learning super sampling was impressive, but underwhelming, with far too many visual artifacts to consider it a must-use feature. Ray tracing was even worse, with a limited effect on how good a game looks, whilst crippling performance on even the most expensive of Nvidia’s new cards. And there were precious few games that supported either, let alone both.

Those expensive cards weren’t even that great when they weren’t ray tracing, either. The RTX 2080 was only just about competitive with the GTX 1080 Ti, which had been released a year and a half earlier and cost $100 less. The RTX 2080 Ti was a big performance improvement, but with a price tag close to double that of its predecessor, it was a hard sell and the generation never really recovered, with only a few of the low-end cards ever finding much purchase in gamer’s PCs.

ARM acquisition attempt

In what was set to be one of the biggest technology acquisitions in history, Nvidia announced in 2020 that it was to buy the British-based chip manufacturer, Arm, for a total of $40 billion, including a 10% share of Nvidia. However, Nvidia quickly ran afoul of the UK’s Competition and Markets Authority, which raised concerns over competition, suggesting that Nvidia’s ownership of Arm could see it restrict its competitors’ access to Arm chips.

This was followed in 2022 by the European Commission’s investigation into potential competition concerns, finding that due to Arm’s previous deals with Nvidia’s competitors, the acquisition would give Nvidia too much knowledge of its competition.

These regulatory hurdles ultimately stalled, and then ended any hopes of a deal being done, and both Arm and Nvidia announced it had fallen through in February 2022. Due to stock price changes, at the time of the cancellation, the deal was worth an estimated $66 billion.

cnBeta

cnBetaOver-reliance on crypto mining

Cryptocurrency mining has been a factor in most of the graphics card shortages and subsequent pricing crises in recent years, but the one that ran from 2017 through to 2019 or so, made it clear just how much. Not without Nvidia’s attempts to hide it, though. While it claimed that cryptocurrency mining was only a small portion of its graphics cards sales, it turned out to have been obfuscating the truth by considering purchases of smaller numbers of graphics cards as bought for gaming, regardless of where they might have ended up, eventually.

While this gave ample excuse for Nvidia to sell cards at a premium, as it was selling them hand over fist, regardless, the crypto boom was followed by a crypto winter. When prices crashed in early 2018, GPU sales fell dramatically, and Nvidia’s stock price fell 50% in just a few months. This was followed by further falls later in the year with Nvidia’s lackluster RTX 2000-series launch, with Nvidia making clear its cryptocurrency mining sales had continued to contract.

To top it all off, the U.S. Securities and Exchange Commission (SEC) investigated Nvidia for misleading investors by misreporting how significant its cryptocurrency mining sales were. Nvidia ultimately paid a $5.5 million fine in compensation in 2022.

Jacob Roach / Digital Trends

Jacob Roach / Digital TrendsRTX 4080 12GB unlaunch

Even the lackluster launch of the RTX 2000 series didn’t cause Nvidia to “unlaunch” a product and pretend it never existed, but that’s exactly what happened with the RTX 4080 12GB. Debuted alongside the RTX 4090 and RTX 4080 16GB, it was the subject of immediate derision from gamers and enthusiasts, who cited its high price, cut-down performance, and deliberate misnaming to hide its shortcomings, as major red flags.

This was an RTX 4070 in disguise — indeed, it ultimately relaunched as the RTX 4070 Ti several months later — and nobody was going to pay RTX 4080 prices for it.

Nvidia ultimately had to pay its board partners for all the RTX 4080 12GB packaging that they had to destroy, but that doesn’t account for all the hours spent developing BIOS for the card, custom cooler designs, and marketing campaigns. It was a mess, and nobody was happy about it.

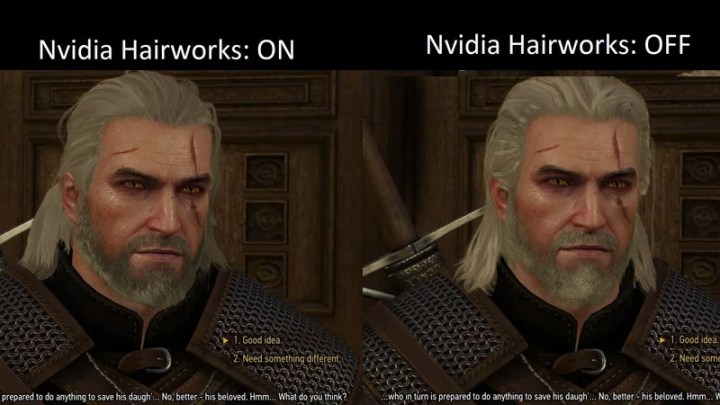

Hairworks

Nvidia has had a number of intriguing software projects over its storied history, but Hairworks could be the most controversial. A further development of the PhysX technology it acquired when it purchased Ageia in 2008, Hairworks was designed to make hair and fur look more realistic in games, and it did. Sometimes.

The first game to support it was The Witcher III: Wild Hunt, a game praised for its incredible graphical fidelity that still holds up well today. But Hairworks was decidedly unimpressive. Not only was it locked to those running Nvidia hardware, but it had a massive effect on performance, knocking 10-20 fps off even high-end gaming PCs when running it at full detail.

And it didn’t even look that good! While there will always be some subjective perspective to consider with visual effects in games, the overly smooth, overly shiny hair of Geralt was too distracting for many gamers, and the performance hit was impossible to justify. Others did quite like the effect, however, particularly the hair and fur on some of the game’s monsters, which looked far more lifelike.

Ultimately, Hairworks hasn’t seen major adoption across games, even those which do use a lot of hair and fur effects, and though it doesn’t have the performance impact it once had, it’s still something many gamers would rather do without.

The EVGA RTX 4090 prototype never made it to production. JayzTwoCents

The EVGA RTX 4090 prototype never made it to production. JayzTwoCentsLosing EVGA

For many PC enthusiasts, this failure hits them just as hard as it will Nvidia. In August 2022, long-standing Nvidia graphics card partner, EVGA announced that it was exiting the GPU business entirely. This ended a more-than 20-year run of graphics card manufacturing and brought an end to many of the most iconic ranges of enthusiast GPUs, including the incredible KINGPIN editions of top cards that regularly broke world records.

This was a huge surprise because as much as 80% of EVGA’s business was related to GPU sales. But it was Nvidia that caused the rift, and ultimately, EVGA’s exit.

EVGA’s CEO, Andrew Han explained at the time, that the relationship between the two companies had soured considerably over the years. He said that Nvidia’s continued price rises, selling of its-own first-party GPUs, and a generation-upon-generation fall in margins for GPUs, made it an industry EVGA no longer wanted to be a part of.

Nvidia undoubtedly shifted stock of its new cards to other board partners, but it lost something special with EVGA. We all did.

Today's tech news, curated and condensed for your inbox

Check your inbox!

Please provide a valid email address to continue.

This email address is currently on file. If you are not receiving newsletters, please check your spam folder.

Sorry, an error occurred during subscription. Please try again later.

JaneWalter

JaneWalter