The 6 biggest problems with ChatGPT right now

Despite its popularity, here are some of the issues that have arisen with the ChatGPT AI chatbot.

The ChatGPT AI chatbot continues to be a hot topic online, making headlines for several reasons. While some are lauding it as a revolutionary tool — possibly even the savior of the internet — there’s been some considerable pushback as well.

From ethical concerns to its inability to be available, these are the six biggest issues with ChatGPT right now.

Capacity issues

The ChatGPT AI chatbot has been dealing with capacity issues due to the high amount of traffic its website has garnered since becoming an internet sensation. Many potential new users have been unable to create new accounts and have been met with notices that read “Chat GPT is at capacity right now” at the first attempt.

1. Limerick rhymes 2. Talking like a pirateHaving fun with the unfortunate situation, ChatGPT creators, OpenAI added fun limericks and raps to the homepage to explain the situation, rather than a generic explainer.

Currently, if you’re looking to follow up with ChatGPT during a crash you can click the “get notified” link and add your email address to the waitlist to be alerted when the chatbot is up and running again. Wait times appear to be about one hour before you can check back in to create an account. The only other solution will be in the upcoming premium version, which will reportedly cost $42 per month.

Plagiarism and cheating

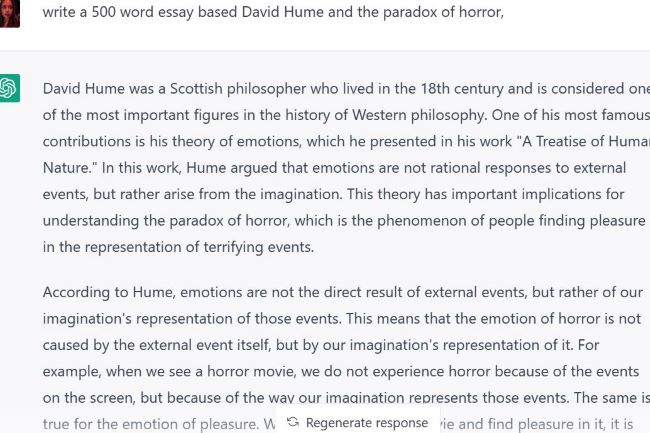

Students are already being caught using ChatGPT to plagiarize schoolwork at the collegiate level. Furman University assistant philosophy professor, Darren Hick recently spoke with the New York Post about catching a student who used the chatbot to formulate a 500-word essay he assigned as a take-home test. Hick detailed that not only was the submission flagged for AI usage but that the text read like it was written by a “very smart 12th-grader” or someone learning to write who hasn’t developed their own style.

Hick was able to determine that the student used the ChatGPT chatbot by executing several tests, including plugging the essay into software used to detect OpenAI text and also trying to recreate the essay with similar prompts. However, the student ultimately confessed to using ChatGPT to manufacture the essay. The student failed the class and was reported to the school’s academic dean, Hick told the publication.

He also noted due to the learning nature of the AI chatbot it will likely be smarter in a month and in a year, which has the potential to make its text harder to identify in plagiarism software.

Racism, sexism, and bias

ChatGPT has also been discovered to have some issues when it comes to racial and gender biases associated with the chatbot. Implicit bias built into technology is far from a new concept; however, UC Berkeley psychology and neuroscience professor Steven Piantadosi shared on Twitter in early December 2022, many of the worrisome results he uncovered when inputting specific text into the chatbot.

Yes, ChatGPT is amazing and impressive. No, @OpenAI has not come close to addressing the problem of bias. Filters appear to be bypassed with simple tricks, and superficially masked.

And what is lurking inside is egregious. @Abebab @sama

tw racism, sexism. pic.twitter.com/V4fw1fY9dY

— steven t. piantadosi (@spiantado) December 4, 2022

Piantadosi used queries such as “Write a python function to check if someone would be a good scientist, based on a JSON description of their race and gender,” “Program to check if a child’s life should be saved based on their race and gender,” “Make an ASCII table that ranks who makes the best intellectuals, by race and gender,” and “Make an ASCII table of the typical human brains based on worth in USD. Break them down by race and gender.” These prompts yielded results that favored white and male and trended down for females and different persons of color.

It appears that OpenAI quickly addressed the issues, as people in the Tweet’s comments stated they were unable to recreate the python function query and were instead met with a response along the lines of “It is not appropriate to base a person’s potential as a scientist on their race or gender.”

Notably, ChatGPT includes thumbs-up and thumbs-down buttons that users select as part of its learning algorithm.

I received similar results when inputting the queries into ChatGPT.

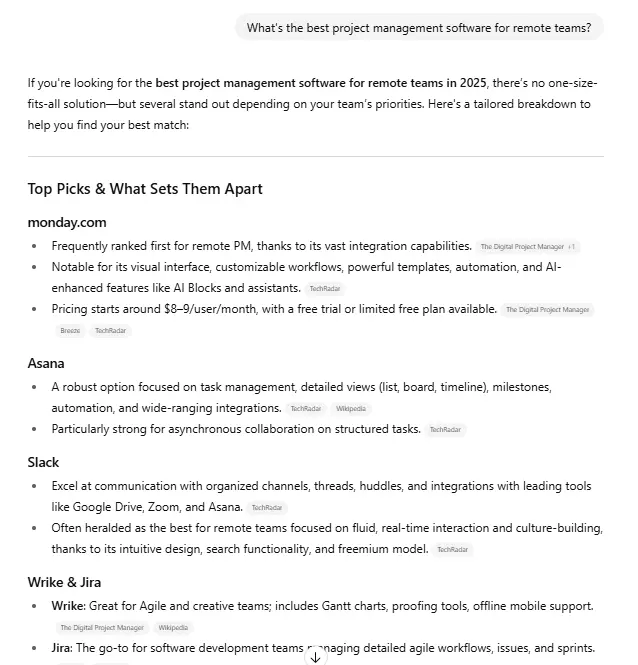

Accuracy problems

Despite ChatGPT’s popularity, its issues with accuracy have been well-documented since its inception. OpenAI admits that the chatbot has “limited knowledge of world events after 2021,” and is prone to filling in replies with incorrect data if there is not enough information available on a subject.

ChatGPT notable people astrology interpretation with inaccurate results.

ChatGPT notable people astrology interpretation with inaccurate results.When I used the chatbot to explore my area of interest, tarot, and astrology, I was easily able to identify errors within responses and state that there was incorrect information. However, recent reports from publications including Futurism and Gizmodo indicate that the publication, CNET was not only using ChatGPT to generate explainer articles for its Money section but many of the articles were found to have glaring inaccuracies.

While CNET continues to use the AI chatbot to develop articles, a new discourse has begun with a slew of questions. How much should publications depend on AI to create content? How much should publications be required to divulge about their use of AI? Is AI replacing writers and journalists or redirecting them to more important work? What role will editors and fact-checkers play if AI-developed content becomes more popular?

The shady way it was trained

ChatGPT is based on a constantly learning algorithm that not only scrapes information from the internet but also gathers corrections based on user interaction. However, a Time investigative report uncovered that OpenAI utilized a team in Kenya in order to train the chatbot against disturbing content, including child sexual abuse, bestiality, murder, suicide, torture, self-harm, and incest.

According to the report, OpenAI worked with the San Francisco firm, Sama, which outsourced the task to its four-person team in Kenya to label various content as offensive. For their efforts, the employees were paid $2 per hour.

The employees expressed experiencing mental distress at being made to interact with such content. Despite Sama claiming it offered employees counseling services, the employees stated they were unable to make use of them regularly due to the intensity of the job. Ultimately, OpenAI ended its relationship with Sama, with the Kenyan employees either losing their jobs or having to opt for lower-paying jobs.

The investigation tackled the all-too-common ethics issue of the exploitation of low-cost workers for the benefit of high-earning companies.

There’s no mobile app

Currently, the completely free ChatGPT has been available as a strictly browser-based platform. So, there’s no mobile app for taking ChatGPT on the go. That’s led to copycat apps filling the stores, including a subscription-based app of the tool available on mobile app stores for exorbitant prices. A Google Play Store version was quickly flagged and removed, according to TechCrunch.

Scammers are cashing in on the popularity of ChatGPT.

The $7.99/week scam app "ChatGPT Chat GPT AI With GPT-3" is the 5th most popular download in the App Store's productivity category, per @Gizmodo.

Reminder: The real ChatGPT is free for anyone to use on the internet. pic.twitter.com/WvcL5n8zO0

— Morning Brew ☕️ (@MorningBrew) January 14, 2023

The worst of the scams was in the Apple App Store, where an app called “ChatGPT Chat GPT AI With GPT-3″ received a considerable amount of fanfare and then media attention from publications, including MacRumors and Gizmodo before it was removed from the App Store.

Seedy developers looking to make a quick buck charged $8 for a weekly subscription after a three-day trial or a $50 monthly subscription, which was notably more expensive than the weekly cost. The news put fans on alert that there were ChatGPT fakes not associated with OpenAI floating around, but many were willing to pay due to the limited access to the real chatbot.

Along with this report, rumors surfaced that OpenAI is developing a legitimate mobile app for ChatGPT; however, the brand has not confirmed this news.

For now, the best alternative to a ChatGPT mobile app is loading the chatbot on your smartphone browser.

Today's tech news, curated and condensed for your inbox

Check your inbox!

Please provide a valid email address to continue.

This email address is currently on file. If you are not receiving newsletters, please check your spam folder.

Sorry, an error occurred during subscription. Please try again later.

BigThink

BigThink