The popularity of ChatGPT may give Nvidia an unexpected boost

It seems that Nvidia may be able to benefit from the rapid rise of OpenAI's ChatGPT in a major way.

The constant buzz around OpenAI’s ChatGPT refuses to wane. With Microsoft now using the same technology to power its brand-new Bing Chat, it’s safe to say that ChatGPT may continue this upward trend for quite some time. That’s good news for OpenAI and Microsoft, but they’re not the only two companies to benefit.

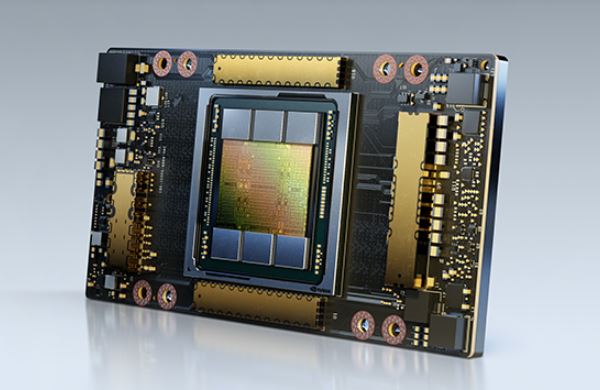

According to a new report, the sales of Nvidia’s data center graphics cards may be about to skyrocket. With the commercialization of ChatGPT, OpenAI might need as many as 10,000 new GPUs to support the growing model — and Nvidia appears to be the most likely supplier.

Nvidia

NvidiaResearch firm TrendForce shared some interesting estimations today, and the most interesting bit pertains to the future of ChatGPT. According to TrendForce, the GPT model that powers ChatGPT will soon need a sizeable increase in hardware in order to scale up the development.

“The number of training parameters used in the development of this autoregressive language model rose from around 120 million in 2018 to almost 180 billion in 2020,” said TrendForce in its report. Although it didn’t share any 2023 estimates, it’s safe to assume that these numbers will only continue to rise as much as technology and budget allow.

The firm claims that the GPT model needed a whopping 20,000 graphics cards to process training data in 2020. As it continues expanding, that number is expected to rise to above 30,000. This could be great news for Nvidia.

These calculations are based on the assumption that OpenAI would be using Nvidia’s A100 GPUs in order to power the language model. These ultrapowerful graphics cards are really pricey — in the ballpark of $10,000 to $15,000 each. They’re also not Nvidia’s top data center cards right now, so it’s possible that OpenAI would go for the newer H100 cards instead, which are supposed to deliver up to three times the performance of A100. These GPUs come with a steep price increase, with one card costing around $30,000 or more.

The data center GPU market doesn’t only consist of Nvidia — Intel and AMD also sell AI accelerators. However, Nvidia is often seen as the go-to solution for AI-related tasks, so it’s possible that it might be able to score a lucrative deal if and when OpenAI decides to scale up.

Should gamers be worried if Nvidia does, indeed, end up supplying a whopping 10,000 GPUs to power up ChatGPT? It depends. The graphics cards required by OpenAI have nothing to do with Nvidia’s best GPUs for gamers, so we’re safe there. However, if Nvidia ends up shifting some production to data center GPUs, we could see a limited supply of consumer graphics cards down the line. Realistically, the impact may not be that bad — even if the 10,000-GPU prediction checks out, Nvidia won’t need to deliver them all right away.

Today's tech news, curated and condensed for your inbox

Check your inbox!

Please provide a valid email address to continue.

This email address is currently on file. If you are not receiving newsletters, please check your spam folder.

Sorry, an error occurred during subscription. Please try again later.

Konoly

Konoly