The Usability Testing Playbook [Expert Tips & Sample Questions]

As an employee and a freelancer, I’ve seen plenty of brands fall prey to a shiny object syndrome where they want to build a feature they saw a competitor launch or simply thought was a good idea. Then, they...

![The Usability Testing Playbook [Expert Tips & Sample Questions]](https://www.hubspot.com/hubfs/usability-testing-1-20250305-3357250.webp)

As an employee and a freelancer, I’ve seen plenty of brands fall prey to a shiny object syndrome where they want to build a feature they saw a competitor launch or simply thought was a good idea. Then, they build that idea without knowing if their audience needs or wants it — they skip usability testing. “In many companies, if the leading competitor has this feature and we think it’s a good idea, validation is done. It never works,” shares author and product leader Itamar Gilad. “You should not assume that your competitor actually knows what they are doing any more than you do. I always ask, ‘Where is your evidence?’” Usability testing is a way for your company to test and validate new ideas and features with evidence. In this post, I’ll cover what usability testing is, the main types of usability tests, and how to run a usability test — even without a big research team or budget. Table of Contents Usability testing, also called user testing, is a method of testing how real users interact with a product. The goal of usability testing is to identify usability issues and insights that can improve the product, website, or app. These tests can be moderated or unmoderated, qualitative or quantitative, and give you valuable insights into whether a product is achieving its goal. User research, also known as UX research, is a broad field that includes multiple methods to understand the needs, behaviors, and motivations of users. Usability testing is just one of many user testing techniques. A/B testing compares two variations to determine which performs better. While usability testing typically analyzes a single version of a product, UX researchers sometimes use A/B usability testing to compare two prototypes and understand why one version outperforms the other. When you’re trying to build and launch a product, running a usability test may seem daunting or expensive. But I promise it’s worth the effort — here are four reasons why. I’ve seen some companies get so caught up in what they think their customers want that they only find out after launching a product that it doesn’t match what they need or want. Testing a product early means companies can understand the market and create a product that’s much more likely to succeed. In any development cycle, products go through multiple rounds of iterations to reach a launch-ready product. Design teams have to make some assumptions when creating a first iteration, but usability testing can validate or disprove a concept before it moves from prototype to development. Many teams can fall prey to a “false consensus effect,” where they assume that others will agree with their point of view. Usability testing removes the bias and the designer’s lens so you can have a data-based, objective view of what’s working and what’s not. You might assume that adding a testing phase would lengthen your product development cycle. But, when companies invest in UX and usability testing early, they can shorten product development by 33 to 55%. That means you need fewer resources to fix a faulty product later on — and you can deliver value add, revenue-generating products to the market faster. Studies show that companies that invest in customer experience see 42% higher customer retention, 33% higher customer satisfaction, and 32% higher cross-selling and up-selling. Simply put, with a good experience, customers are less likely to leave and more likely to spend more over time. Not every company has a dedicated UX research team, nor needs one. While SaaS companies who ship products constantly may need a robust usability testing strategy, most companies need only periodic research. Here are some scenarios where usability testing may be beneficial. Most product designs start with wireframes. Teams then build out high-fidelity mockups and a working prototype. These steps give companies a fast, low-cost way to validate a design before actually building it. “Usability testing and design is so much cheaper than coding, then figuring out you created a problem and having to pivot back,” shares Katie Lukes, VP of Product Strategy at Innovatemap. “If you are making big swings and assumptions about how people work, you want to get that design in people‘s hands to see, ‘Can they navigate this experience that I’ve designed for them? Can they achieve this goal?’” If you are designing whole screens that will be brand new experiences for the user or workflows for them to go through, designing a working prototype is really important. Once you’ve validated and coded your concept, late-stage usability testing can help you catch bugs and issues before launch. If you have an existing website or app, you can run usability tests for a prototype of a new or improved feature. You can also A/B test small changes like copy, color, or other design choices to see if it improves a quantifiable outcome like a user clicking “Buy Now.” If you have an existing product but are launching to new geographies or industries, usability testing can help you see how a different audience interacts with your product. For example, educators and students may use collaboration software differently than a business team. When I conduct a usability test, I’m looking for much more detailed information than whether users had a “good” user experience or a “bad” one. To set up a successful usability test, identify the problem you are aiming to solve and create a study plan outlining exactly what you want to test and how. Here are some components that you may want to consider testing. How easily can users find their way around your product? Test the intuitiveness of your menu structure, search functionality, and overall information architecture. One of the top quantitative questions you need to answer is, can users accomplish a key task without frustration? For instance, what percentage of users can place an order on an ecommerce site? How long does it take them to do it on average? Identify any common barriers. Moderated usability testing is a great opportunity to uncover emotions users feel while using your product. A simple task completion percentage won’t tell you whether users were frustrated or happy completing a task and why. Are the words on your website or app clear, concise, and valuable to users? Evaluate the clarity, readability, and relevance of your content. On my website, I want to know if all the features are working as intended. Assess the performance of key functions and identify any technical issues or bugs. Look at whether your product is fast and responsive, performing well across different devices, screens, and browsers. Color, typography, and layout aren’t just for aesthetic appeal. Each design choice affects the user experience. A cluttered interface, for example, can lead to confusion about where to turn or what to do next. Can all users, including people with disabilities, effectively use your product? Test your product’s compatibility with assistive technologies and any applicable accessibility guidelines. Usability testing has a real business impact for companies who take the time to invest in it. Take inspiration from these three usability testing examples. Like many of you, I can’t resist Panera’s baguettes, salads, and addictive green tea. Ordering myself lunch on their app or kiosk is typically seamless. However, Panera realized that an administrative assistant ordering lunch for a large group needed a different experience than their typical customer. They set out to redesign their ordering website for catering customers. With a prototype built in Figma, Panera sent out unmoderated tests through UserTesting to test perceptions and usability of the order setup and checkout processes. Here are the improvements they made as a result of the insights: Before launching its partners program, Shopify wanted to design an experience that would allow merchants to connect with experts to support their businesses. First, Shopify conducted broad user research, including moderated interviews, card sorting, and tree sorting, to discover which information would be most important to Shopify merchants. Once they had a prototype of a Shopify expert profile, the brand ran a moderated usability test with current merchants. In addition to asking participants to complete general tasks, they asked open-ended questions like, “What information is helping you determine if an Expert is a good fit for your needs?” As a result: Contactless card company V1CE knew its website was driving low engagement — but they didn’t know why. Working with agency Credo and Hotjar, they tested through heat maps and user recordings to analyze how people interacted with the site. Here’s the impact of the studies: There are many different types of usability tests, including moderated vs. unmoderated, remote vs. in-person, and qualitative vs. quantitative. Here are three types to consider, as well as the pros and cons of each. Have you ever been approached by someone with a tablet asking you to review a product? This informal method involves approaching random people in a public space for a short in-person test. It's quick, cost-effective, and can provide immediate feedback. You may want to include a couple of qualifying questions to ensure they fit your target demographic. I’d also recommend incentivizing participation with a small gift card or other reward. Moderated usability testing is a method where a facilitator (either remote or in-person) guides participants through a study. For example, a moderator may ask, “Where would you expect to find information about shipping?” or “Can you try to contact customer service through the website?” “Moderated tests are wonderful for answering big questions and getting deep into a person's experience. You can observe them, have them think out loud, and ask them follow-up questions as you go,” explains Lukes. On the downside, moderated usability testing is slower and more expensive because you need to pay people for their time. But it can help you find the reason behind a problem. Observing people using your product first-hand, observing facial expressions, and asking follow-up questions gives you deep insights. Another popular way to test a product is through unmoderated usability testing, where a company sets up an on-demand testing experience that they can send out at scale. These can range in formatting from a simple task completion to heatmap tracking, or surveys. Sites like UserTesting offer brands a relatively quick, inexpensive way to run usability tests. These tests can gather data quickly and are best for answering set questions like design or navigation choices. Running a usability test for the first time? Here’s how to get started. First, decide which question you’re trying to answer. Use a problem statement to frame your goal, for example: Users struggle to complete checkout on our e-commerce site, leading to high cart abandonment. This usability test will identify friction points and opportunities to improve the experience. “You should know exactly the blanks you need to fill in, because then you know the questions you need to ask in interviews,” says Lukes. “Get those goals laid out first, then work your way backward into the questions and the prototype.” Next, determine which part of your product or website you want to test and which tasks you want your participants to complete. Set clear criteria to determine success for each task. Next, choose the best usability testing method for your study from those I explained above. In the early stages, moderated tests are often best. In later stages, unmoderated or guerilla testing is better. Let’s be honest: A lot of this depends on your resources. If you have an in-house UX research team or the budget to hire an agency, you can lean on them to choose a method and set up the study. But if you’re a marketing manager or small business owner with fewer resources, you may not be able to run robust studies. In that case: “If you have little to no budget, run quick guerilla tests yourself to get some fast and inexpensive results,” recommends Lukes. “You can also get some users on a Zoom call and walk them through a design, even if it's just a wireframe. Even that much feedback is a form of usability testing that is less expensive, and you can at least start with some insights to share with your team.” Next, determine which part of your product or website you want to test and which tasks you want your participants to complete. Set clear criteria to determine success for each task, for example error rate, completion rate, or time to completion. At the beginning of your script, you should include the purpose of the study, if you’ll be recording, some background on the product or website, questions to learn about the participants’ current knowledge of the product or website, and, finally, their tasks. To make your study consistent, unbiased, and scientific, moderators should follow the same script in each user session. During your usability study, the moderator has to remain neutral, carefully guiding the participants through the tasks while strictly following the script. Whoever on your team is best at staying neutral, not giving in to social pressure, and making participants feel comfortable while pushing them to complete the tasks should be your moderator. Note-taking or recording insights during the study is also just as important. If there’s no recorded data, you can’t extract any insights that’ll prove or disprove your hypothesis. Your team’s most attentive listener should be your note-taker during the study. Screening and recruiting the right participants is the hardest part of usability testing. Your participants should closely resemble your user base or be current customers whenever possible. To recruit the ideal participants for your study, create the most detailed and specific persona as you possibly can and screen potential participants to make sure they fit. Incentivize them to participate with a gift card or another monetary reward. While you can recruit and screen participants yourself, it’s often better to use an agency or usability testing service. During the actual study, you should ask your participants to complete one task at a time, without your help or guidance. If the participant asks you how to do something, don’t say anything. You want to see how long it takes users to figure out your interface. Asking participants to “think out loud” is also an effective tactic — you’ll know what’s going through a user’s head when they interact with your product or website. After they complete each task, ask for their feedback, like if they expected to see what they just saw, if they would’ve completed the task if it wasn’t a test, if they would recommend your product to a friend, and what they would change about it. This qualitative data can pinpoint more pros and cons of your design. You’ll collect a ton of qualitative and quantitative data during your study. Analyzing it will help you discover patterns of problems, gauge the severity of each usability issue, and provide design recommendations to the engineering team. When you analyze your data, make sure to pay attention to both the users’ performance and their feelings about the product. Break out your data into visualizations and share it with all relevant stakeholders. After extracting insights from your data, report the main takeaways and lay out the next steps for improving your product or website’s design and the enhancements you expect to see during the next round of testing. Include everyone in your reporting — from executives to designers to developers — so they can see the evidence and make changes accordingly. Need inspiration for your usability test script? These 15 tried-and-true questions can uncover user insights, friction in your product flows, and opportunities for improvement. I’ve learned first-hand that it takes a cultural shift for a company to stop chasing shiny objects and instead slow down and ask users what they want. At the end of the day, usability testing helps you move beyond assumptions, validate ideas with real user feedback, and build products that people actually want to use. And the best part? You don’t need a massive budget or a dedicated research team to get started. With even small usability tests, you can create better user experiences, shorten development cycles, and improve customer retention. So, before you roll out your next big idea, ask yourself: What do your users think? Editor's note: This post was originally published in August 2018 and has been updated for comprehensiveness.What is usability testing?

Usability Testing vs. User Research

Usability Testing vs. A/B Testing

Benefits of Usability Testing

1. Helps you understand your users’ wants and needs.

2. Helps you make data-based decisions.

3. Creates shorter product development cycles.

4. Leads to higher customer retention, cross-sell, and upsell rates.

When to Do Usability Testing

Prototyping: Launching a New Product, App, or Website

Pre-Launch: Late-Stage Testing

Post-Launch: Improving an Existing Product

Expanding to New Markets

What to Test for Usability Testing

Navigation

Task Completion

User Sentiment

Content

Performance and Functionality

Visual Design

Accessibility

Usability Testing Examples & Case Studies

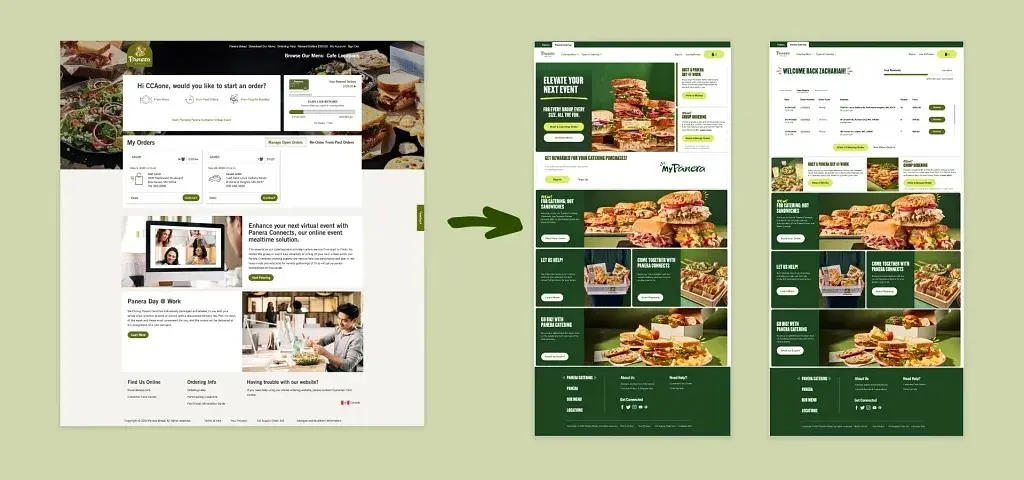

1. Unmoderated Usability Tests: Panera Bread Catering Site

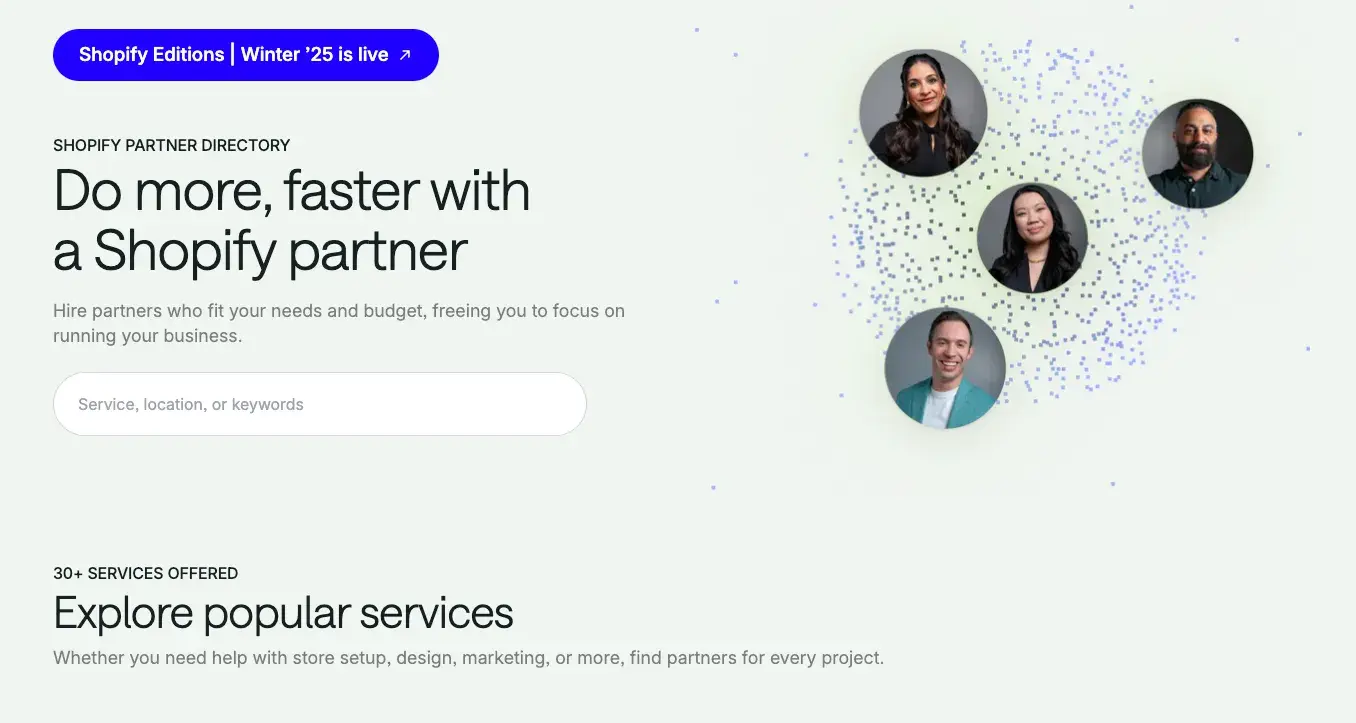

2. Moderated Usability Tests: Shopify Partners Site

2. Unmoderated Usability Tests and Heatmaps: V1CE Website Optimization

Types of Usability Tests

1. Hallway/Guerilla Usability Testing

2. Moderated Usability Testing

3. Unmoderated Usability Testing

How to Conduct a Usability Test

1. Frame the problem.

2. Pick a focus area.

3. Choose a usability testing method.

3. Choose your study tasks and questions.

4. Write a study plan and script.

5. Delegate roles.

6. Find your participants.

7. Conduct the study.

8. Analyze your data.

9. Report your findings.

15 Usability Testing Questions

Stop Guessing, Start Validating with Usability Testing

Tfoso

Tfoso