This year’s physics Nobel Prize went to pioneers in quantum tech. Here’s how their work could change the world.

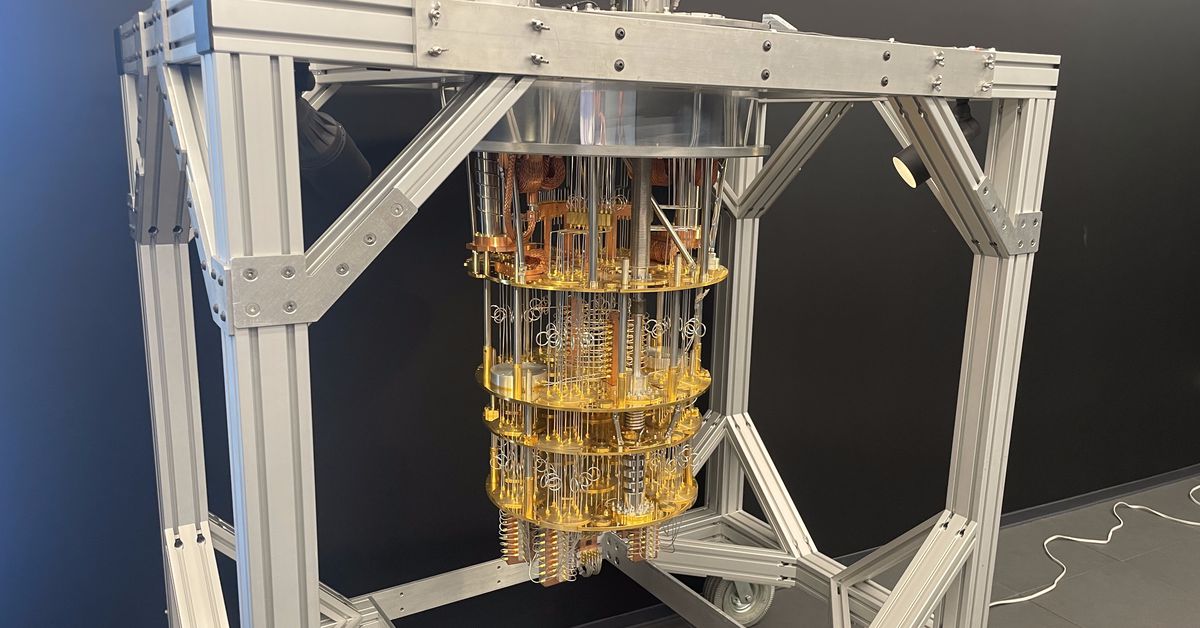

The inside of an IBM System One quantum computer. | Bryan Walsh/VoxA brief guide to the weird and revolutionary world of quantum computers. Quantum computing is one of those technologies of the future — like nuclear fusion power or...

Quantum computing is one of those technologies of the future — like nuclear fusion power or self-driving cars — that seems as potentially transformative as it is perpetually just out of reach. If researchers are able to develop quantum machines that are stable and reliable, it could jump-start the pace of computing, which has slowed down as Moore’s Law — the long-accurate prediction by Intel co-founder Gordon Moore that computer chips would get faster and cheaper — seems to be coming to an end. But the path to a practical quantum computer has been long and difficult, combining some of the hardest problems in quantum science with the hardest problems in computing hardware.

Just how long the road to quantum computing has been — and how important it will be to reach the destination — became clear again on Tuesday morning when the Nobel Prize in physics for 2022 was awarded to three researchers whose work had “laid the foundation for a new era of quantum technology,” as the Nobel Committee on Physics put it.

John F. Clauser, an American, showed in 1972 that photon pairs were entangled, underscoring that the particles behave like a single unit even when separated by great distances. Alain Aspect of the University of Paris furthered that work a decade later, and in 1998, Austrian physicist Anton Zeilinger explored entanglement for three or more particles. Together, as the Nobel Committee put it, they paved the way for “new technology based upon quantum information.”

But a real, workable quantum computer will take much more than theory, as I learned on a visit earlier this year to the small Westchester County community of Yorktown Heights. There, amid the rolling hills and old farmhouses, sits the Thomas J. Watson Research Center, the Eero Saarinen-designed, 1960s Jet Age-era headquarters for IBM Research.

Deep inside that building, through endless corridors and security gates guarded by iris scanners, is where the company’s scientists are hard at work developing what IBM director of research Dario Gil told me is “the next branch of computing”: quantum computers.

I was at the Watson Center to preview IBM’s updated technical roadmap for achieving large-scale, practical quantum computing. This involved a great deal of talk about “qubit count,” “quantum coherence,” “error mitigation,” “software orchestration” and other topics you’d need to be an electrical engineer with a background in computer science and a familiarity with quantum mechanics to fully follow.

I am not any of those things, but I have watched the quantum computing space long enough to know that the work being done here by IBM researchers — along with their competitors at companies like Google and Microsoft, along with countless startups around the world — stands to drive the next great leap in computing. Which, given that computing is a “horizontal technology that touches everything,” as Gil told me, will have major implications for progress in everything from cybersecurity to artificial intelligence to designing better batteries.

Provided, of course, they can actually make these things work.

Entering the quantum realm

The best way to understand a quantum computer — short of setting aside several years for grad school at MIT or Caltech — is to compare it to the kind of machine I’m typing this piece on: a classical computer.

My MacBook Air runs on an M1 chip, which is packed with 16 billion transistors. Each of those transistors can represent either the “1” or “0” of binary information at a single time — a bit. The sheer number of transistors is what gives the machine its computing power.

Sixteen billion transistors packed onto a 120.5 sq. mm chip is a lot — TRADIC, the first transistorized computer, had fewer than 800. The semiconductor industry’s ability to engineer ever more transistors onto a chip, a trend forecast by Intel co-founder Gordon Moore in the law that bears his name, is what has made possible the exponential growth of computing power, which in turn has made possible pretty much everything else.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23583802/IMG_4482.jpg) The exterior of an IBM System One quantum computer, as seen at the Thomas J. Watson Research Center.Bryan Walsh/Vox

The exterior of an IBM System One quantum computer, as seen at the Thomas J. Watson Research Center.Bryan Walsh/Vox

But there are things classic computers can’t do that they’ll never be able to do, no matter how many transistors get stuffed onto a square of silicon in a Taiwan semiconductor fabrication plant (or “fab,” in industry lingo). And that’s where the unique and frankly weird properties of quantum computers come in.

Instead of bits, quantum computers process information using qubits, which can represent “0” and “1” simultaneously. How do they do that? You’re straining my level of expertise here, but essentially qubits make use of the quantum mechanical phenomenon known as “superposition,” whereby the properties of some subatomic particles are not defined until they’re measured. Think of Schrödinger’s cat, simultaneously dead and alive until you open its box.

A single qubit is cute, but things get really exciting when you start adding more. Classic computing power increases linearly with the addition of each transistor, but a quantum computer’s power increases exponentially with the addition of each new reliable qubit. That’s because of another quantum mechanical property called “entanglement,” whereby the individual probabilities of each qubit can be affected by the other qubits in the system.

All of which means that the upper limit of a workable quantum computer’s power far exceeds what would be possible in classic computing.

So quantum computers could theoretically solve problems that a classic computer, no matter how powerful, never could. What kind of problems? How about the fundamental nature of material reality, which, after all, ultimately runs on quantum mechanics, not classical mechanics? (Sorry, Newton.) “Quantum computers simulate problems that we find in nature and in chemistry,” said Jay Gambetta, IBM’s vice president of quantum computing.

Quantum computers could simulate the properties of a theoretical battery to help design one that is far more efficient and powerful than today’s versions. They could untangle complex logistical problems, discover optimal delivery routes, or enhance forecasts for climate science.

On the security side, quantum computers could break cryptography methods, potentially rendering everything from emails to financial data to national secrets insecure — which is why the race for quantum supremacy is also an international competition, one that the Chinese government is pouring billions into. Those concerns helped prompt the White House earlier this month to release a new memorandum to architect national leadership in quantum computing and prepare the country for quantum-assisted cybersecurity threats.

Beyond the security issues, the potential financial upsides could be significant. Companies are already offering early quantum-computing services via the cloud for clients like Exxon Mobil and the Spanish bank BBVA. While the global quantum-computing market was worth less than $500 million in 2020, International Data Corporation projects that it will reach $8.6 billion in revenue by 2027, with more than $16 billion in investments.

But none of that will be possible unless researchers can do the hard engineering work of turning a quantum computer from what is still largely a scientific experiment into a reliable industry.

The cold room

Inside the Watson building, Jerry Chow — who directs IBM’s experimental quantum computer center — opened a 9-foot glass cube to show me something that looked like a chandelier made out of gold: IBM’s Quantum System One. Much of the chandelier is essentially a high-tech fridge, with coils that carry superfluids capable of cooling the hardware to 100th of a degree Celsius above absolute zero — colder, Chow told me, than outer space.

Refrigeration is key to making IBM’s quantum computers work, and it also demonstrates why doing so is such an engineering challenge. While quantum computers are potentially far more powerful than their classic counterparts, they’re also far, far more finicky.

Remember what I said about the quantum properties of superposition and entanglement? While qubits can do things a mere bit could never dream of, the slightest variation in temperature or noise or radiation can cause them to lose those properties through something called decoherence.

That fancy refrigeration is designed to keep the system’s qubits from decohering before the computer has completed its calculations. The very earliest superconducting qubits lost coherence in less than a nanosecond, while today IBM’s most advanced quantum computers can maintain coherence for as many as 400 microseconds. (Each second contains 1 million microseconds.)

The challenge IBM and other companies face is engineering quantum computers that are less error-prone while “scaling the systems beyond thousands or even tens of thousands of qubits to perhaps millions of them,” Chow said.

That could be years off. Last year, IBM introduced the Eagle, a 127-qubit processor, and in its new technical roadmap, it aims to unveil a 433-qubit processor called the Osprey later this year, and a 4,000-plus qubit computer by 2025. By that time, quantum computing could move beyond the experimentation phase, IBM CEO Arvind Krishna told reporters at a press event earlier this month.

Plenty of experts are skeptical that IBM or any of its competitors will ever get there, raising the possibility that the engineering problems presented by quantum computers are simply too hard for the systems to ever be truly reliable. “What’s happened over the last decade is that there have been a tremendous number of claims about the more immediate things you can do with a quantum computer, like solve all these machine learning problems,” Scott Aaronson, a quantum computing expert at the University of Texas, told me last year. “But these claims are about 90 percent bullshit.” To fulfill that promise, “you’re going to need some revolutionary development.”

In an increasingly digital world, further progress will depend on our ability to get ever more out of the computers we create. And that will depend on the work of researchers like Chow and his colleagues, toiling away in windowless labs to achieve a revolutionary new development around some of the hardest problems in computer engineering — and along the way, trying to build the future.

A version of this story was initially published in the Future Perfect newsletter. Sign up here to subscribe!

Update, October 4, 4 pm ET: This story was originally published on May 24 and has been updated to reflect that Clauser, Aspect, and Zeilinger were awarded the 2022 Nobel Prize in physics.

Now is not the time for paywalls. Now is the time to point out what’s hidden in plain sight (for instance, the hundreds of election deniers on ballots across the country), clearly explain the answers to voters’ questions, and give people the tools they need to be active participants in America’s democracy. Reader gifts help keep our well-sourced, research-driven explanatory journalism free for everyone. By the end of September, we’re aiming to add 5,000 new financial contributors to our community of Vox supporters. Will you help us reach our goal by making a gift today?

ShanonG

ShanonG