Google Helpfulness Signals Might Change – Why It’s Not Enough via @sejournal, @martinibuster

Google might update the helpful content signals so that new pages can rank. This is why that may not be enough The post Google Helpfulness Signals Might Change – Why It’s Not Enough appeared first on Search Engine Journal.

Google’s John Mueller indicated the possibility of changes to sitewide helpful content signals so that new pages may be allowed to rank. But there is reason to believe that even if that change goes through it may not be enough to help.

Helpful Content Signals

Google’s Helpful Content Signals (aka Helpful Content Update aka HCU) was originally a site-wide signal when launched in 2022. That meant that an entire site would be classified as unhelpful and become unable to rank, regardless if some pages were helpful.

Recently the signals associated with the Helpful Content System were absorbed into Google’s core ranking algorithm, generally changing them to page-level signals, with a caveat.

Google’s documentation advises:

“Our core ranking systems are primarily designed to work on the page level, using a variety of signals and systems to understand the helpfulness of individual pages. We do have some site-wide signals that are also considered.”

There are two important takeaways:

There is no longer a single system for helpfulness. It’s now a collection of signals within the core ranking algorithm. The signals are page-level but there are site-wide signals that can impact the overall rankings.Some publishers have tweeted that the site-wide effect is impacting the ability of new helpful pages from ranking and John Mueller offered some hope.

If Google follows through with lightening the helpfulness signals so that individual pages are able to rank, there is reason to believe that it may not impact many websites that publishers and SEOs believe are suffering from sitewide helpfulness signals.

Publishers Express Frustration With Sitewide Algorithm Effects

Someone on X (formerly Twitter) shared:

“It’s frustrating when new content is also being penalized without having a chance to gather positive user signals. I publish something it goes straight to page 4 and stays there, regardless of if there are any articles out on the location.”

Someone else brought up the point that if helpfulness signals are page-level then in theory the better (helpful) pages should begin ranking but that’s not happening.

John Mueller Offers Hope

Google’s John Mueller responded to a query about sitewide helpfulness signals suppressing the rankings of new pages created to be helpful and later indicated there may be a change to the way helpfulness signals are applied sitewide.

Mueller tweeted:

“Yes, and I imagine for most sites strongly affected, the effects will be site-wide for the time being, and it will take until the next update to see similar strong effects (assuming the new state of the site is significantly better than before).”

Possible Change To Helpfulness Signals

Mueller followed up his tweet by saying that the search ranking team is working on a way to surface high quality pages from sites that may contain strong negative sitewide signals indicative of unhelpful content, providing relief to some sites that are burdened by sitewide signals.

He tweeted:

“I can’t make any promises, but the team working on this is explicitly evaluating how sites can / will improve in Search for the next update. It would be great to show more users the content that folks have worked hard on, and where sites have taken helpfulness to heart.”

Why Changes To Sitewide Signal May Not Be Enough

Google’s search console tells publishers when they’ve received a manual action. But it doesn’t tell publishers when their sites lost rankings due to algorithmic issues like helpfulness signals. Publishers and SEOs don’t and cannot “know” if their sites are affected by helpfulness signals. Just the core ranking algorithm contains hundreds of signals, so it’s important to keep an open mind about what may be affecting search visibility after an update.

Here are five examples of changes during a broad core update that can affect rankings:

The way a query is understood could have changed which affects what kinds of sites are able to rank Quality signals changed Rankings may change to respond to search trends A site may lose rankings because a competitor improved their site Infrastructure may have changed to accommodate more AI on the back endA lot of things can influence rankings before, during, and after a core algorithm update. If rankings don’t improve then it may be time to consider that a knowledge gap is standing in the way of a solution.

Examples Of Getting It Wrong

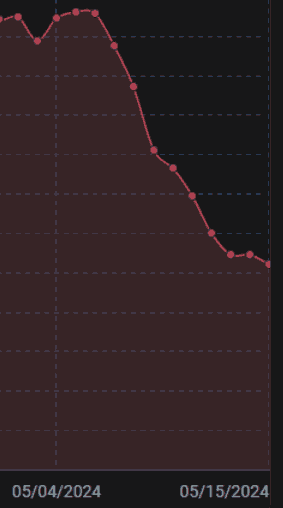

For example, a publisher who recently lost rankings correlated the date the of their rankings collapse to the announcement of the site Reputation Abuse update. It’s a reasonable assumption that if the rankings drop on the same date of an update then it’s the update.

Here’s the tweet:

“@searchliaison feeling a bit lost here. Judging by the timing, we got hit by the Reputation Abuse algorithm. We don’t do coupons, or sell links, or anything else.

Very, very confused. We’ve been stable through all this and continue to re-work/remove older content that is poor.”

They posted a screenshot of the rankings collapse.

Screenshot Showing Search Visibility Collapse

SearchLiaison responded to that tweet by noting that Google is currently only doing manual actions. It’s reasonable to assume that an update that correlates to a ranking issue is related, one to the other.

But one cannot ever be 100% sure about the cause of a rankings drop, especially if there’s a knowledge gap about other possible reasons (like the five I listed above). This bears repeating: one cannot be certain that a specific signal is the reason for a rankings drop.

In another tweet SearchLiaison remarked about how some publishers mistakenly assumed they had an algorithmic spam action or were suffering from negative Helpful Content Signals.

SearchLiaison tweeted:

“I’ve looked at many sites where people have complained about losing rankings and decide they have a algorithmic spam action against them, but they don’t.

…we do have various systems that try to determine how helpful, useful and reliable individual content and sites are (and they’re not perfect, as I’ve said many times before, anticipating a chorus of “whatabouts…..” Some people who think they are impacted by this, I’ve looked at the same data they can see in Search Console and … not really. “

SearchLiaison, in the same tweet, addressed a person who remarked that getting a manual action is more fair than receiving an algorithmic action, pointing out the inherent knowledge gap that would lead someone to surmise such a thing.

He tweeted:

“…you don’t really want to think “Oh, I just wish I had a manual action, that would be so much easier.” You really don’t want your individual site coming the attention of our spam analysts. First, it’s not like manual actions are somehow instantly processed.”

The point I’m trying to make (and I have 25 years of hands-on SEO experience so I know what I’m talking about), is to keep an open mind that maybe there’s something else going on that is undetected. Yes, there are such things as false positives, but it’s not always the case that Google is making a mistake, it could be a knowledge gap. That’s why I suspect that many people will not experience a lift in rankings if Google makes it easier for new pages to rank and if that happens, keep an open mind about maybe there’s something else going on.

Featured Image by Shutterstock/Sundry Photography

Konoly

Konoly

![How To Stay Visible in AI Search [Webinar] via @sejournal, @lorenbaker](https://www.searchenginejournal.com/wp-content/uploads/2025/07/1-359.png)