Google Updates Crawler Documentation To Fix A Typo via @sejournal, @martinibuster

Google updated official crawler documentation to fix a typo in a user agent string that could cause errors in crawler identification The post Google Updates Crawler Documentation To Fix A Typo appeared first on Search Engine Journal.

Google updated crawler documentation to fix a user agent typo that could lead to a crawler misidentification

Google has fixed a typo in their crawler documentation that inadvertently misidentified one of their crawlers.

In general, this is a minor issue but it’s a major issue for SEOs and publishers who depend on the documentation to set firewall rules.

Failure to notate the correct data could cause a website to inadvertently block a legitimate Google crawler.

Google Inspection Tool

The typo is in the section of the documentation about the Google Inspection Tool.

This is an important crawler that is sent out to a website in response to two prompts.

1. URL inspection functionality in Search Console

When a user wants to check within search console whether a webpage is indexed or to request indexing, Google’s system responds with the Google Inspection Tool crawler.

The URL inspection tool offers the following functionality:

See the status of a URL in the Google index Inspect a live URL Request indexing for a URL View a rendered version of the page View loaded resources, JavaScript output, and other information Troubleshoot a missing page Learn your canonical page2. Rich results test

This is a test for checking the validity of structured data and to see if it qualifies for an enhanced search results, also known as a rich result.

Using this test will trigger a specific crawler to fetch the webpage and analyze the structured data.

Why Crawler User Agent Typo Error is Problematic

This can become a troublesome issue for websites that are behind a paywall but whitelist specific robots, such as the Google-InspectionTool user agent.

Improper user agent identification can also be problematic if the CMS needs to block the crawler with robots.txt or a robots meta directive in order to keep Google from discovering pages it shouldn’t be looking at.

Some forum content management systems remove links to parts of the site like the user registration page, user profiles and the search function to keep bots from indexing those pages.

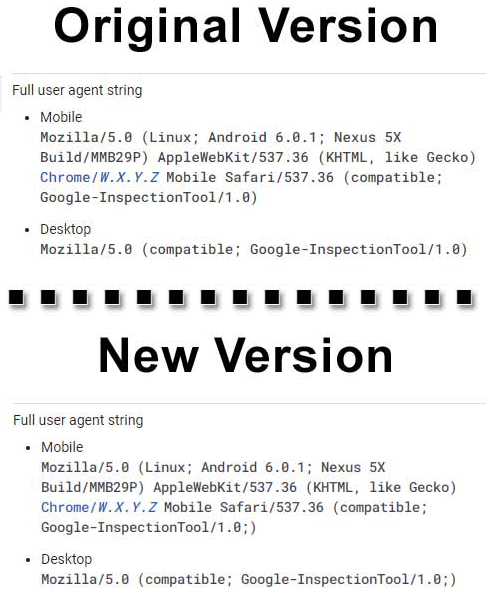

Hard To Spot User Agent Typo

The issue involved a difficult to catch typo in the user agent description.

See if you can tell the difference?

This is the answer:

Original version:

Mozilla/5.0 (compatible; Google-InspectionTool/1.0)

New version:

Mozilla/5.0 (compatible; Google-InspectionTool/1.0;)

Be sure to update relevant robots.txt, meta robots directives or CMS code if you or a client are whitelisting Google’s crawlers or blocking crawlers from certain webpages.

Compare the original version (on Internet Archive Wayback Machine) with the updated version here.

It’s a small little detail but it can make a big difference.

Featured image by Shutterstock/Nicoleta Ionescu

SEJ STAFF Roger Montti Owner - Martinibuster.com at Martinibuster.com

Roger Montti is a search marketer with over 20 years experience. I offer site audits and phone consultations. See me ...

![]()

Subscribe To Our Newsletter.

Conquer your day with daily search marketing news.

Lynk

Lynk