“I worry my employer will increase my workload”: Is it important to disclose ChatGPT usage at work?

Opt for anonymity or accountability? Nearly 70 per cent of professionals are keeping their use of ChatGPT under wraps from their bosses.

When ChatGPT was first released, Joel Yap, a marketing executive at an MNC in Singapore, was utterly fascinated by its range of functionalities.

He began experimenting with its features — at first, for personal use — but soon realised that the chatbot can be utilised to simplify various work-related tasks, such as sending out emails and for content creation.

From then on, the 26-year-old has been actively utilising it on a daily basis. “ChatGPT is sort of like my own personal assistant at work now,” he said.

However, none of Joel’s colleagues and bosses know that he has been utilising the artificial intelligence (AI) tool for work.

His case is not an isolated incident. While a significant portion of professionals — around 43 per cent to be precise — have embraced AI tools at their workplace, nearly 70 per cent of them are keeping their usage of these AI tools under wraps from their bosses.

But what is driving them to do so?

Why are employees choosing to hide their use of AI tools?

Given that ChatGPT can decrease the time it takes for a worker to complete tasks by up to 40 per cent while increasing output quality by 18 per cent, Joel fears that the increased productivity from the automation of tasks with ChatGPT may increase his workload.

I get to send out emails faster and I get ideas for marketing campaigns whenever I’m stuck. But if I am transparent about my use of ChatGPT, [I worry] my employer will increase my workload. With ChatGPT, I usually get to finish my work one or two hours earlier and just chill out for the rest of the day.

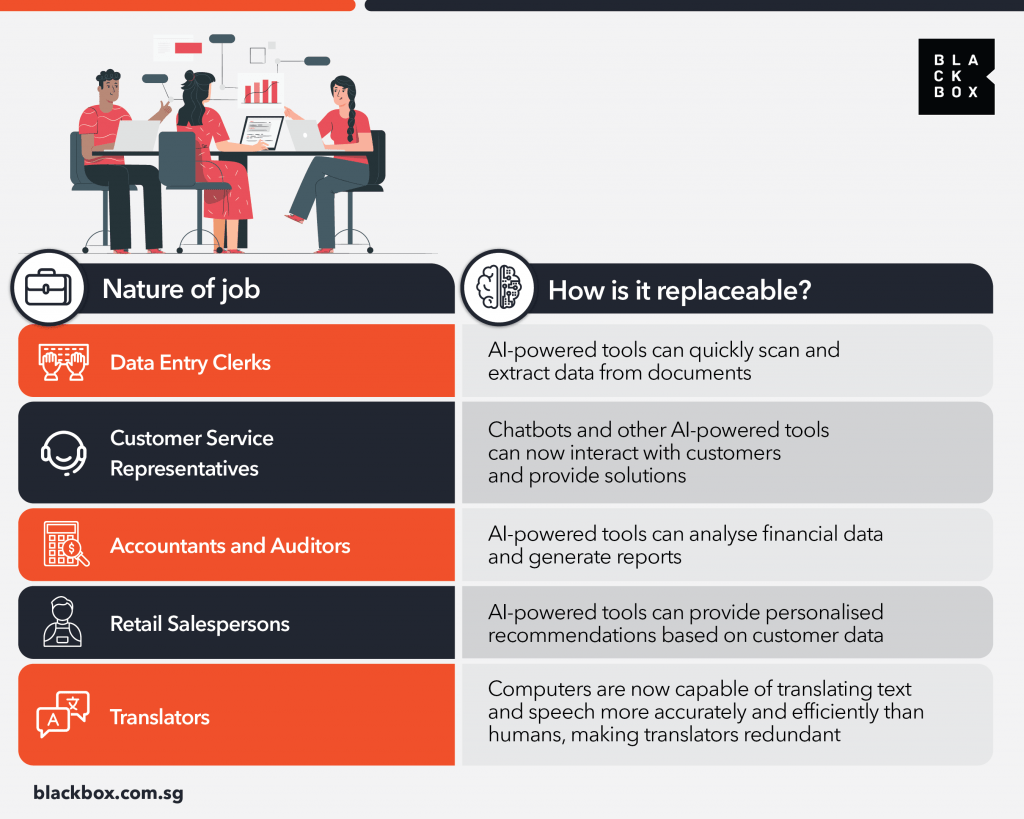

– Joel Yap, a marketing executive at an MNC Jobs that can be replaced by AI / Image Credit: Blackbox

Jobs that can be replaced by AI / Image Credit: BlackboxOn the other hand, Charlene Goh, an administrative executive at a biotech firm, says that she fears her job will be replaced by ChatGPT if she discloses her use of the AI tool to her boss. Much of her day-to-day work responsibilities are carried out by the AI tool, such as creating real-time notes during meetings and drafting documents.

This concern is definitely not unfounded. In fact, a recent Goldman Sachs report found that 300 million jobs around the world stand to be impacted by AI and automation, with administrative jobs having the highest proportion of tasks that could be automated.

For some workers, the impact of ChatGPT is already felt, with about 4,000 people being laid off in the United States alone to be replaced by AI tools back in May.

Employers are concerned about privacy

While these concerns do hold merit and serve as legitimate incentives for keeping the usage of ChatGPT in the workplace under wraps, it’s not a prudent idea to clandestinely use ChatGPT at work.

What many employees are not aware of when using the chatbot is that when you ask it to summarise important memos or check your work for errors, anything you share with ChatGPT could be used to train the system, and perhaps even pop up in its responses to other users.

Back in May, Samsung had banned the usage of ChatGPT at its workplace as its employees had inadvertently leaked sensitive information to the AI tool.

One employee pasted confidential source code into the chat to check for errors, another employee solicited code optimisation, while a third fed a recorded meeting into ChatGPT and asked it to generate minutes.

By feeding these confidential data to the AI tool, the company is concerned the data may be stored on external servers, making it difficult to retrieve and delete, and could end up being disclosed to other users.

Aside from Samsung employees, many other employees are unknowingly leaking sensitive information to ChatGPT. In fact, sensitive data makes up 11 per cent of what employees paste into ChatGPT, and the average company leaks sensitive data to ChatGPT hundreds of times each week.

As such, many companies across Singapore consider the disclosure of the usage of AI tools crucial. For Singapore-based car marketplace Carro, safeguarding its proprietary and sensitive information, particularly customer information, is of paramount importance.

Carro sits on a trove of data from hundreds of thousands of cars we inspect annually, including customer engagements and car reviews. Disclosure aids us in exercising vigilant protection of customer information.

– Chua Zi Yong, Chief Operating Officer, CarroAI hallucinations and copyright issues pose a threat to businesses

Beyond privacy issues, AI tools like ChatGPT also bring about concerns relating to plagiarism and pose copyright issues to companies.

For instance, Kelvin Lam, the Chief Operating Officer of YouTrip, shared that the company takes immense pride in its ability to connect with its users through its hyper-localised campaigns and content.

Generative AI enables the company to create content within a matter of seconds based on large datasets of text, which include a variety of original and generated content, as well as different writing styles.

However, as these tools are unable to determine the originality of the content and replicate human touch completely, concerns around plagiarism, copyright infringement, and the lack of personality in the creation of these content becomes an issue. “If left unchecked, it can cause users distrust,” Kelvin adds.

Kelvin Lam, Chief Operating Officer, YouTrip / Image Credit: Slack

Kelvin Lam, Chief Operating Officer, YouTrip / Image Credit: SlackMoreover, as these AI tools can sometimes “hallucinate” — or in other words, generate responses that sound possible, but are factually incorrect or unrelated to the given context — it may impact the output or quality of work from employees.

Employees should be transparent about their use of AI tools so that the team is aware of how these tools are being used. AI tools are not infallible and can sometimes provide biased or inaccurate results.

By having the open communication and trust in each other to disclose the role that AI tools played in their work, it allows the team to make informed decisions and assess the risk of potential misinformation and biases of generative AI results.

– Kelvin Lam, Chief Operating Officer, YouTripCarro’s Zi Yong echoes the same sentiments. The company is especially vigilant when it comes to preventing the use of external tools that may lead to inaccurate outcomes, which could potentially compromise its customer experience and operational excellence.

“Given the nascent stage of many AI tools, addressing issues like hallucination is vital. To this end, our IT team rigorously pre-qualifies tools, affirming their reliability and aligning with our commitment to maintaining high standards,” he explains.

What are companies doing to mitigate risks associated with AI tools?

That said, the benefits of chatbots like ChatGPT ultimately outweigh the downsides that come with its usage.

Although transparency from employees can mitigate the risks associated with AI, companies are also looking for alternative methods to leverage the tech without compromising their business’ privacy, while ensuring operational efficiency.

Chua Zi Yong, Chief Operating Officer, Carro / Image Credit: Carro

Chua Zi Yong, Chief Operating Officer, Carro / Image Credit: CarroFor instance, Carro has undertaken a “phased roll out strategy for ChatGPT usage amongst its employees” to prevent any inadvertent sharing of customer or sensitive information with external systems.

In tandem with this strategy, the company is also in the midst of developing their own internal chatbot, CarroGPT, an in-house solution meant to replace ChatGPT while empowering its staff by streamlining their workflow and contributing to enhanced efficiency.

At Carro, we see AI as a co-pilot to our dedicated teams. Our belief is that AI empowers our workforce to operate with greater efficiency, effectiveness, and productivity.

In essence, our stance on AI tools is rooted in using technology as a driving force to enable our people and propel our business toward sustainable success.

– Chua Zi Yong, Chief Operating Officer, CarroWhile YouTrip has yet to establish a rigid policy regarding employees’ usage of ChatGPT at work, the company provides regular reminders on specific areas, such as the ethical use of AI tools for content creation to ensure that its employees are only utilising ChatGPT as points of reference or inspiration to mitigate plagiarism and copyright infringement risks.

“We require team members to review one another’s work to ensure originality with the style of writing that complies with YouTrip’s brand guidelines,” says Kelvin.

“Our employees are also expected to disclose the role that AI tools played in the content creation process, which ensures that while employees are allowed to leverage generative AI tools for inspiration, employees continue to create original content that is credible and relevant to our users.”

AI is an essential driver of progress

Ultimately, YouTrip views AI and machine learning as essential drivers of progress, with the capability to revolutionise the way we work and live.

Besides creating hyper-localised campaigns, the company also leverages AI tools to detect fraud in real time, helping both businesses and customers to prevent financial losses.

As a leading fintech startup, we are big enthusiasts for technologies that push the boundaries of innovation. By harnessing the potential of AI, machine learning, and other emerging technologies, it propels us further on our journey as frontrunners in the tech domain.

– Kelvin Lam, Chief Operating Officer, YouTripMirroring the same viewpoint, Zi Yong explains that AI plays an instrumental role in various aspects of Carro’s operations, including pricing and inspecting its cars.

But in order to fully utilise AI tools to their maximum potential while mitigating the risks that come with it, employee disclosure is of utmost importance.

He sums up Carro’s approach to AI tools like ChatGPT as one of “balanced enthusiasm and thoughtful consideration”. “We are committed to leveraging AI tools to their fullest potential, while also safeguarding the quality and reliability of our services,” he adds.

Featured Image Credit: DPA

FrankLin

FrankLin

.jpg&h=630&w=1200&q=100&v=6e07dc5773&c=1)