Is AI Sentient? Could it Ever Be? Experts Weigh In

Right now, AI does what we want. But what if it gets to the point where it only does what it wants?

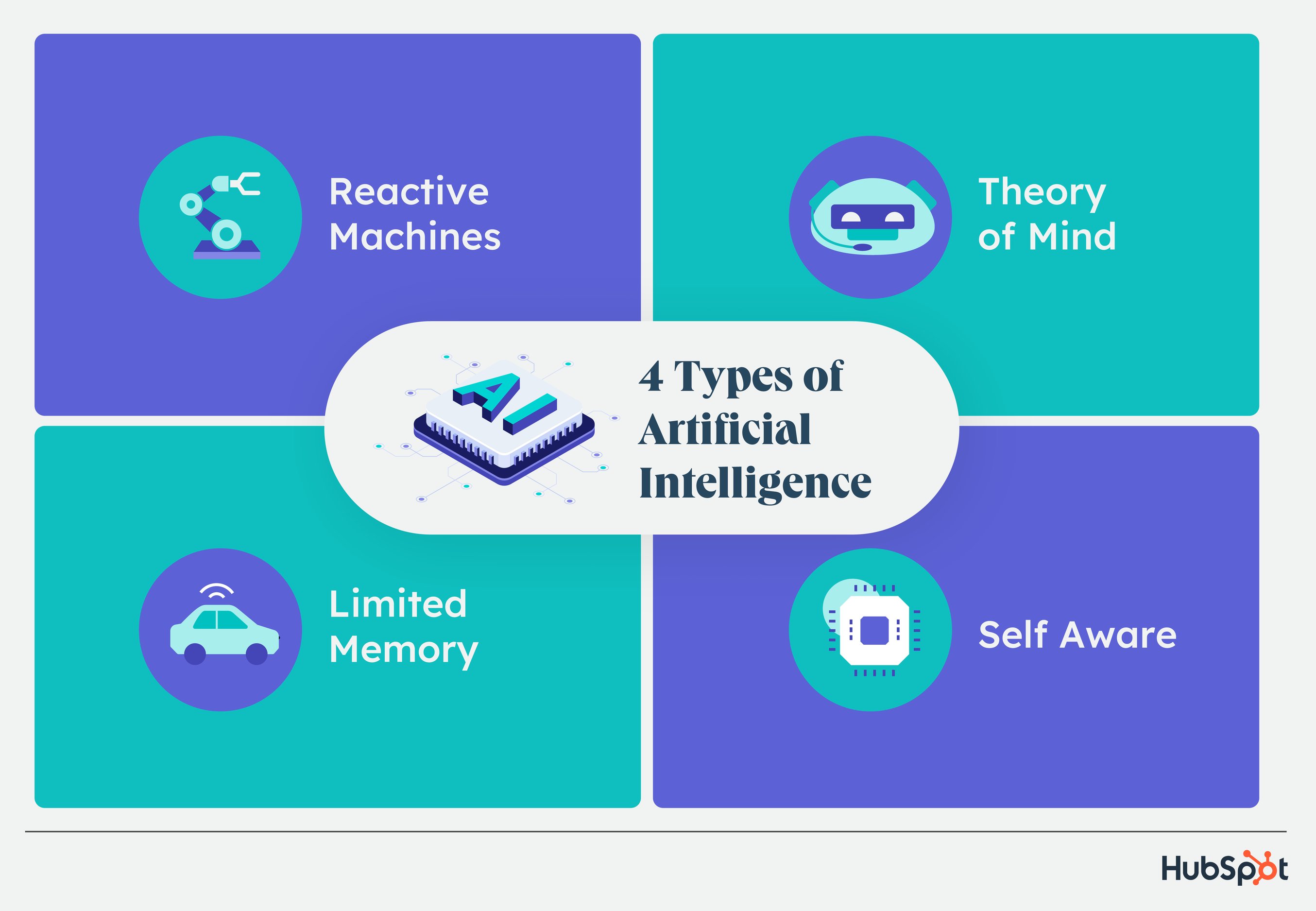

Right now, AI does what we want. But what if it gets to the point where it only does what it wants? The current AI gold rush might leave many thinking about what AI might look like as it develops. In this post, we’ll discuss sentient AI, what it could look like, and if we should be worried about a world where robots are conscious (with scientific insight, too). Sentient AI can feel and have experiences just as humans can. Sentient AI is emotionally intelligent, conscious and can perceive the world around it and turn those perceptions into emotions. In short, sentient AI thinks, feels, and perceives like a person. M3GAN, the robot from the movie of the same name, is a great example of sentient AI. She has emotions and can perceive and understand the feelings of those around her, so much so that she builds a friendship with a human girl and eventually becomes murderously protective of her. Some of the main concerns and questions about sentient AI come from science fiction movies, books, and TV shows that paint the picture of a dystopia with super-intelligent machines that have autonomy and eventually build an AI-ruled society where humans become their compliant subjects. Many of these scenarios also involve these machines developing sentient abilities without human input. Aside from that, the questions about sentient AI center around control, safety, and communication. Current applications of AI, like language models (i.e., GPT and LaMDA), are not sentient. Language models can seem sentient because of their conversational style, but the catch is they’re designed that way. They’re meant to use natural language processing and natural language generation to replicate human speech, which can seem like a form of sentient cognition, but it’s not. They can’t experience, much less have, emotions. AI has progressed rapidly in the last year, so it makes sense to think that sentient AI is right on the horizon. Most experts agree that we are nowhere near that happening, or if it will ever happen. For one, scientists and researchers would first have to define consciousness, which has been a philosophical debate for centuries. They would also need to use that definition of consciousness and translate it into exact algorithms to program into AI systems. Human emotions, perceptions, etc., would have to be deeply understood in a way that makes it possible to give that knowledge to a computer system. Nir Eisikovits, Professor of Philosophy and Director of the Applied Ethics Center at UMass Boston said people's worries about sentient AI are groundless: “ChatGPT and similar technologies are sophisticated sentence completion applications – nothing more, nothing less. Their uncanny responses are a function of how predictable humans are if one has enough data about the ways in which we communicate.” Eisikovits thinks people believe sentient AI is closer than it is because humans tend to anthropomorphize, giving human qualities to things that don’t have them, like naming a car or assigning objects pronouns. Enzo Pasquale Scilingo, a bioengineer at the Research Center E. Piaggio at the University of Pisa in Italy, also said this. He says, “We attribute characteristics to machines that they do not and cannot have.” He and his colleagues work with a robot, Abel, that emulates facial expressions. He says, “All these machines, Abel in this case, are designed to appear human, but I feel I can be peremptory in answering, ‘No, absolutely not. As intelligent as they are, they cannot feel emotions. They are programmed to be believable.’” “If a machine claims to be afraid, and I believe it, that’s my problem!” he adds. It might be helpful to understand the far-off ness of sentient AI by understanding where it stands today. Out of the four types of AI, all systems today are part of the first two: reactive or limited memory AI. The next type is theory of mind AI, a significant advancement where machines can understand human emotions and have social interactions based on this understanding. There are also three stages of AI, each defined by how closely it replicates human abilities. Despite how long the field has been around, AI is currently in stage one, narrow AI. The next stage is AGI, which are systems with human-like intelligence that think abstractly, reason, and adapt to new situations. There is debate that language models are AGI, but some say that any indications of abstract thinking or reasoning are learned from its training data. No existing system has grown beyond theory of mind AI or fully surpassed narrow AI, and there are a lot of lines to cross before it does. Any feelings that AI is sentient can be chalked up to algorithms doing what they’re programmed to do. But, that being said, it’s always important to stay on top of the trends, especially with technologies with short-term high growth. To stay ahead of the curve, check out this helpful learning path with all you need to know about AI, from AI ethics to cool jobs created around AI (and to be the first to know when AI does become sentient).

What is sentient AI?

Concerns About Sentient AI

Is AI sentient?

What if AI becomes sentient? Will it ever?

Sentient AI Is Part of the Not-So-Near Future.

Over to You

BigThink

BigThink