Meta’s AI Chatbot Reawakens Concerns About Data Tracking

Personalization generally comes at the cost of privacy.

Over the past week, the specter of Meta’s potentially intrusive data tracking has once again raised its head, this time due to the launch of its new, personalized AI chat app, as well as recent testimony presented by former Meta employee Sarah Wynn-Williams.

In the case of Williams, who’s written a tell-all book about her time working at Meta, recent revelations in her appearance before the U.S. Senate have raised eyebrows, with Wynn-Williams noting, among other points, that Meta can identify when users are feeling worthless or helpless, which it can use as a cue for advertisers.

As reported by the Business and Human Rights Resource Center:

“[Wynn-Williams] said the company was letting advertisers know when the teens were depressed so they could be served an ad at the best time. As an example, she suggested that if a teen girl deleted a selfie, advertisers might see that as a good time to sell her a beauty product as she may not be feeling great about her appearance. They also targeted teens with ads for weight loss when young girls had concerns around body confidence.”

Which sounds horrendous, that Meta would knowingly target users, and teens no less, at especially vulnerable times with promotions.

In the case of Meta’s new AI chatbot, concerns have been raised as to the level at which it tracks user information, in order to personalize its responses.

Meta’s new AI chatbot uses your established history, based on your Facebook and Instagram profiles, to customize your chat experience, and it also tracks every interaction that you have with the bot to further refine and improve its responses.

Which, according to The Washington Post, “pushes the limits on privacy in ways that go much further than rivals ChatGPT, from OpenAI, or Gemini, from Google.”

Both are significant concerns, though the idea that Meta knows a heap about you and your preferences is nothing new. Experts and analysts have been warning about this for years, but with Meta locking down its data, following the Cambridge Analytica scandal, it’s faded as an issue.

Add to this the fact that most people clearly prefer convenience over privacy, so long as they can largely ignore that they’re being tracked, and Meta has generally been able to avoid ongoing scrutiny for such, by, essentially, not talking about its tracking and predictive capacity.

But there are plenty of examples that underline just how powerful Meta’s trove of user data can be.

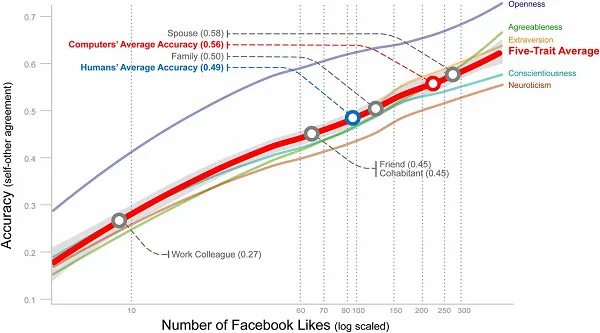

Back in 2015, for example, researchers from the University of Cambridge and Stanford University released a report which looked at how people’s Facebook activity could be used as an indicative measure of their psychological profile. The study had found that, based on their Facebook likes, mapped against their answers from a psychological study, the insights could determine a person’s psychological make-up more accurately than their friends, their family, better even than their partners.

Facebook’s true strength in this sense, is scale. For example, the information that you enter into your Facebook profile, in isolation, doesn’t mean a heap. You might like cat videos, Coca-Cola, maybe you visit Pages about certain bands, brands etc. By themselves, these actions might not reveal that much, but on a broader scale, each of these elements can be indicative. It could be, for example, that people who like this specific combination of things have an 80% chance of being a smoker, or a criminal, or a racist, whether they specifically indicate such or not.

Some of these signals are more overt, others require more insights. But basically, your Facebook activity does show who you are, whether you meant to share that or not. We’re just not confronted with it, outside of ad placements, and with personal posting to Facebook declining in recent times, Meta’s also lost some of its data points, so you’d assume that its predictions are likely not as accurate as they once were.

But with Meta AI now hosting increasingly personal chats, on a broad range of topics, Meta now has a new stream of connection into our minds, which will indeed showcase, once again, just how much Meta does know you, and what your personal preferences and leanings may be.

Which it does indeed use for ads.

Meta does note in its AI documentation that “details that contain inappropriate information or are unsafe in nature” won't be saved, while you can also delete the details that Meta AI saves about you at any time.

So you do have some options on this front. But if you needed a reminder, Meta is tracking a heap of personal information, and it has unmatched scale to crosscheck that data against, which gives it a huge amount of embedded understanding about user preferences, interests, leanings, etc.

All of these could be used for ad targeting, content promotion, influence, etc.

And yes, that is a concern, which is worth exploring. But again, over time, and given variable controls over their data, the capacity to limit information that Facebook tracks, their privacy settings, etc. Despite all of these options, research shows that most people simply don’t restrict such.

Convenience trumps privacy, and Meta will be hoping the same rings true for its AI chatbot as well. That’s also why its Advantage+ AI-powered ads are producing results, and as its AI tools get smarter, and increase Meta’s capacity to analyze data at scale, Meta’s going to get even better at knowing everything about you, as revealed by your Facebook and Instagram presence.

And now you AI chats as well. Which will indeed mean a more personalized experience. But the pay-off here is that Meta will also use that understanding in ways you may not agree with.

MikeTyes

MikeTyes