Sam Altman is destroying trust in OpenAI with Worldcoin, proof-of-personhood crypto project

We may be entering the age of AI, but it seems that who designs it may still matter very much.

Disclaimer: Opinions expressed below belong solely to the author.

Silicon Valley leaders aren’t exactly among the world’s most trusted people, even if billions are using their products and services; but I have to say that nobody has ever been more intent on ruining his reputation than Sam Altman, the CEO of OpenAI, the creator of ChatGPT.

Typically, he hate and distrust comes only after you’ve achieved a billionaire status, heading a major tech company worth hundreds of billions of dollars. Bill Gates, Elon Musk, Mark Zuckerberg, Jeff Bezos and even the late Steve Jobs had millions of critics.

Sam, however, has decided not to wait and decided to become Bay Area’s latest bad guy before OpenAI may even start thinking about IPO (that it currently has no plans for).

Sam Altman getting his iris scanned in Warsaw, May 24, 2023 / Image Credit: Twitter @sama

Sam Altman getting his iris scanned in Warsaw, May 24, 2023 / Image Credit: Twitter @samaProbably emboldened by the success of ChatGPT and surge in interest in OpenAI, he took his other pet project out of the closet, pulling Worldcoin out of beta in July of 2023, in the hope that it too could ride the same wave of popularity.

But what is Worldcoin exactly?

It’s a bizarre and rather unsettling blend of crypto, blockchain and personal recognition, conducted using quite dystopian-looking devices called Orbs, that the company launched in dozens of locations around the world to scan irises of all willing participants.

Their purpose is generating an encrypted, anonymised proof-of-personhood, in the form of unique hash of your iris which is then verifiable using the Worldcoin blockchain via the World ID app on your phone generating a zero-knowledge proof, allowing you to confirm you’re a real human being without revealing who you actually are.

Worldcoin Orb on display in Lisbon, Portugal / Image Credit: Worldcoin

Worldcoin Orb on display in Lisbon, Portugal / Image Credit: WorldcoinIn theory, the protocol and the app are supposed to act as an anonymous, digital passport of sorts, providing you access to services on the internet (assuming they opt in to use it), proving that you are, indeed, a unique human being and not a sophisticated AI bot (goodbye, annoying CAPTCHA!).

In other words, Worldcoin is, in no small part, sold as a solution to a problem companies like OpenAI have created in the first place (though it could be acceptable if its net contribution is positive and offers protection against a flood of AI-generated spam).

The biggest issue is that little of what Worldcoin has done inspires confidence or trust, which should be the cornerstone of what such a company does.

Not only is the concept rather suspect — why would you willingly allow a relatively unknown company to physically scan your eye on location when you have Face ID available in your phone today? — but its execution befits a Bond villain.

The company launched in 2019, originally just as a crypto project, whose goal was to attempt to create a parallel digital economy run by cryptocurrency that all humans would be entitled to receive a portion of. It later began adding personal identification features, which led to the creation of the Orb as the gateway to, ultimately, access the Worldcoin token while proving a real human is using it.

The company boasted signing on approximately two million users during beta, which has now reportedly jumped to 16 million following the launch six weeks ago. This may sound like quite a lot until you realise how many of those sign-ups were acquired.

During beta (and in some countries after it as well), the company operated around 30 Orbs globally, not only in the US and some locations in the EU, but also in India, Indonesia and places up and down Africa like Benin, Ghana, Kenya, Sudan, Uganda or Zimbabwe (where banks cannot even process crypto transactions), with its local representatives wooing people with promises of US$20 worth of free Worldcoin crypto, as well as merchandise and gadgets, and unsubstantiated promises of high returns in the future.

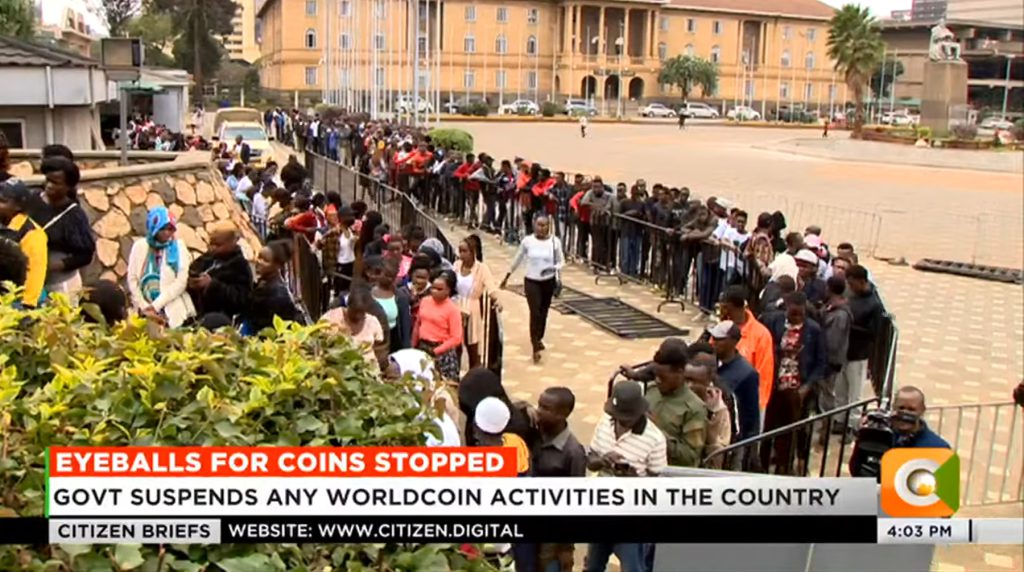

Unsurprisingly, the promises had drawn long queues of mostly impoverished locals, hoping to benefit from the unexpected windfall, in exchange for an iris scan by a company with no established track record and no existing use case.

Queues to Worldcoin venues in Kenya / Image Credit: Citizen TV Kenya via YouTube

Queues to Worldcoin venues in Kenya / Image Credit: Citizen TV Kenya via YouTubeContrary to the company’s promises, its representatives — though they were effectively just subcontractors and not actual employees — often collected names, phone numbers or email addresses of participants. So much for “anonymity”.

I might not remember everything in the world, but I do believe this would be the first time any significant IT service was launched with such emphasis on building its user base using decidedly underhand tactics targeting some of the poorest people in the third world, suffering from post-pandemic fallout, instead of hipster influencers in Palo Alto.

Why is that?

Image Credit: Citizen TV Kenya, Youtube

Image Credit: Citizen TV Kenya, YoutubePerhaps, it’s because few people in the developed world would eagerly allow strangers to scan their eyes to access an app which doesn’t really have a real function yet in exchange for a few bucks in crypto that was only expected to launch in some barely defined future.

It’s not going to be a stretch to say that those of us living in wealthier societies already have enormous concerns about privacy given the extent to which our actions are being tracked on the internet.

Reports of dystopian systems like smart, person-recognising cameras in China, where a digital social credit system is being rolled out, designed to penalise or reward you for your behaviour, certainly don’t help.

Image Credit: Decrypt

Image Credit: DecryptIs this the reason Worldcoin decided to target the third world with its shiny Orbs, knowing that value of and concerns about privacy are much lower there?

Like those companies buying fake followers on social media to appear popular, Worldcoin appears to have wanted to set off a snowball effect, making bold claims of having onboarded millions of people, in the hope of convincing sceptics in Europe and America to sign up (possibly triggering a rally in the currency attached to the project as well).

If that was the plan, then it doesn’t seem to have worked as mass media of the world have done their job at least this one time, reporting on the questionable practices of the company, triggering a suspension of Worldcoin in Kenya by local regulators, several investigations in Europe, and not much excitement in the West or developed Asia either, while the coin itself has lost more than half of its value since launch.

Worldcoin tokens are down from over US$2 at launch to just US$1.09 as of writing of this article on September 11, 2023 / Image Credit: CoinMarketCap

Worldcoin tokens are down from over US$2 at launch to just US$1.09 as of writing of this article on September 11, 2023 / Image Credit: CoinMarketCapThe fish stinks from the head

But the more important question now is — do we want the person who has created this scheme to be in charge of one of the leading developers of AI solutions which may soon dominate many domains of our lives?

Self-help coaches love to say that “how you do anything is how you do everything” and while it is, perhaps, an exaggeration it feels like it could apply to at least one domain of a person’s activity — e.g. doing business.

I find it difficult to believe that any person would run one company decidedly differently than another.

And while Altman isn’t, strictly speaking, the CEO of Worldcoin (or its actual developer, Tools for Humanity), he is the chairman of Tools For Humanity and co-founded the project with Alex Bania, who is its current chief executive.

Surely, then, OpenAI’s CEO is one of the driving forces behind it and yet for the past few years, the company was busy skirting local laws, pursuing vulnerable audiences in the third world, and trying to recruit them to scan their irises with highly questionable promises — particularly given lack of World ID’s real-life use case.

If this is the sort of “growth hacking” he engages in, can we really trust him not only with our private data, but also with how AI that his other company develops is going to impact our lives, the veracity of the information it provides and whether nobody is using it as a propaganda tool, to feed us predetermined answers to politically and socially sensitive questions?

After all, even at the current stage, ChatGPT’s responses to certain categories of questions about politics or social affairs are demonstrably biased leading some to suspect that they could have been altered by human beings to produce a desirable output.

We may be entering the age of AI, but it seems that who designs it may still matter very much.

Featured Image Credit: Tools For Humanity / Bitcoin.com

Aliver

Aliver