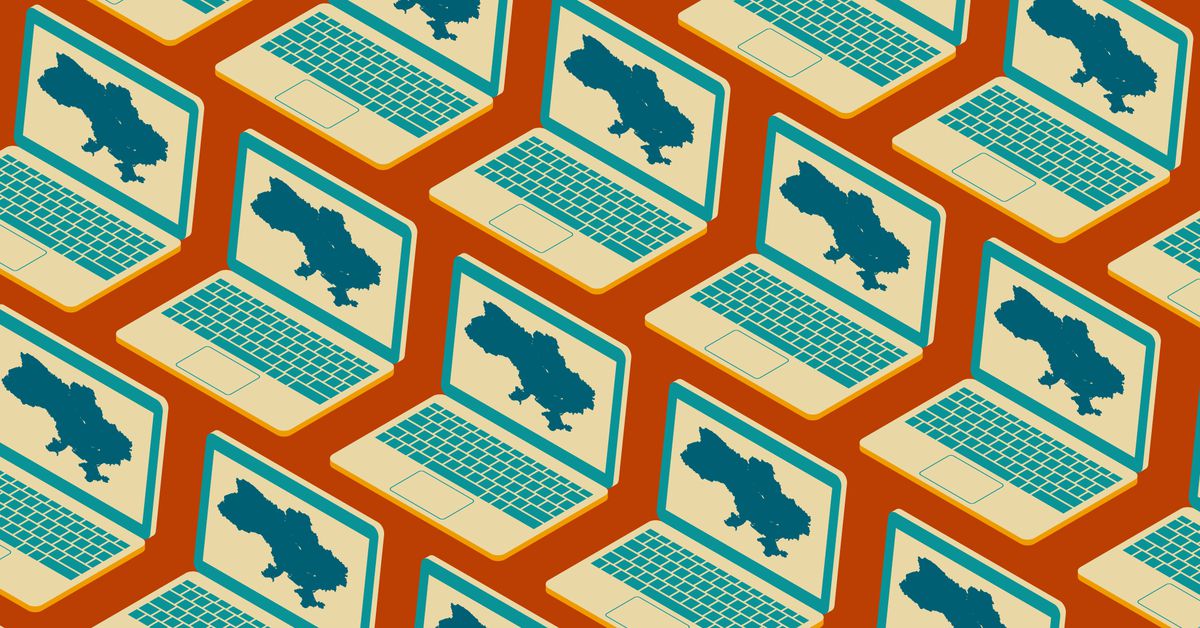

What platforms know — but don’t tell us — about the war on Ukraine

Illustration by Kristen Radtke / The VergeBrandon Silverman knows more about how stories spread on Facebook than almost anyone. As co-founder and CEO of CrowdTangle, he helped to build systems that instantly understood which stories were going viral: valuable...

Brandon Silverman knows more about how stories spread on Facebook than almost anyone. As co-founder and CEO of CrowdTangle, he helped to build systems that instantly understood which stories were going viral: valuable knowledge for publishers at a time when Facebook and other social networks accounted for a huge portion of their traffic. It was so valuable, in fact, that in 2016 Facebook bought the company, saying it would help the institutions of journalism identify stories of interest to help their own coverage plans.

But a funny thing happened along the way to CrowdTangle becoming just another tool in a publisher’s analytics toolkit. Facebook’s value to publishers declined after the company decided to de-emphasize news posts in 2018, making CrowdTangle’s original function less vital. But at the same time, in the wake of the 2016 US presidential election, Facebook itself was of massive interest to researchers, lawmakers, and journalists seeking to understand the platform. CrowdTangle offered a unique, real-time window into what was spreading on the site, and observers have relied upon it ever since.

As Kevin Roose has documented at The New York Times, this has been a persistent source of frustration for Facebook, which found that a tool it had once bought to court publishers was now used primarily as a cudgel with which to beat its owners. Last year, the company broke up the CrowdTangle team in what it has described, unconvincingly, as a “reorganization.” The tool remains active, but appears to be getting little investment from its parent company. In October, Silverman left the company.

Since then, he has been working to further what had become his mission at CrowdTangle outside Facebook’s walls: working with a bipartisan group of senators on legislation that would legally require Facebook owner Meta and other platform companies to publicly disclose the kind of information you can still find on CrowdTangle, along with much more.

I’ve been trying to convince Silverman to talk to me for months. He’s critical of his old employer, but only ever constructively, and he’s careful to note both when Facebook is better than its peers and where the entire industry has failed us.

But with Russia’s invasion of Ukraine — and the many questions about the role of social networks that it has posed — Silverman agreed to an email Q&A.

What I liked about our conversation is how Silverman focuses relentlessly on solutions: the interview below is a kind of handbook for how platforms (or their regulators) could help us understand them both by making new kinds of data available, and by making existing data much easier to parse.

It’s a conversation that shows how much is still possible here, and how low much of that fruit hangs to the ground.

Our conversation has been edited for clarity and length.

Casey Newton: What role is social media playing in how news about the war is being understood so far?

Brandon Silverman: This is one of the single most prominent examples of a major event in world history unfolding before our eyes on social media. And in a lot of ways, platforms are stepping up to the challenge.

But we’re also seeing exactly how important it is to have platforms working alongside the rest of civil society to respond to moments like this.

For instance, we’re seeing the open-source intelligence community, as well as visual forensics teams at news outlets, do incredible work using social media data to help verify posts from on the ground in Ukraine. We’re also seeing journalists and researchers do their best to uncover misinformation and disinformation on social media. They’re regularly finding examples that have been viewed by millions of people, including repurposed video game footage pretending to be real, coordinated Russian disinformation among TikTok influencers, and fake fact-checks on social media that make their way onto television.

That work has been critical to what we know about the crisis, and it highlights exactly why it’s so important that social media companies make it easier for civil society to be able to see what’s happening on their platforms.

Right now, the status quo isn’t good enough.

So far, the discussion about misinformation in the Russia-Ukraine war mostly centers on anecdotes about videos that got a lot of views. What kind of data would be more helpful here, and do platforms actually have it?

The short answer is absolutely. Platforms have a lot of privacy-safe data they could make available. But maybe more importantly, they could also take data that’s already available and simply make it easier to access.

For instance, one data point that is already public but incredibly hard to use is around “labels”. A label is when a platform adds their own information to a piece of content — whether a piece of content has been fact-checked, if the source of the content is a state-controlled media outlet, etc. And they’re becoming an increasingly popular way for platforms to help shape the flow of information during major crises like this.

However, despite the fact that the labels are public and don’t contain any privacy-sensitive material, right now there are no programmatic ways for researchers or journalists or human rights activists to be able to sort through and study all those labels. So, if a newsroom or a researcher wants to sort through all the fact-checked articles on a particular platform and see what the biggest myths about the war were on any given day, they can’t. If they want to see what narratives all the state-controlled media outlets were pushing, they can’t do that either.

It was something we tried to get added to CrowdTangle, but couldn’t get it over the finish line. I think it’s a simple piece of data that should be more accessible for any platform that uses them.

That makes a lot of sense to me. What else could platforms do here?

A big part of all this sort of work isn’t always about making more data available —it’s oftentimes about making existing data more useful.

Can a journalist or a researcher quickly and easily see which accounts have gotten the most engagement around the Ukrainian situation? Can anyone quickly and easily see who the first person to use the phrase “Ghost of Kyiv” was? Can anyone quickly and easily see the history of all the state-controlled media outlets that have been banned and what they were saying about Ukraine in the lead-up to the war?

All of that data is technically publicly available, but it’s incredibly hard to access and organize.

That’s why a big part of effective transparency is simply about making data easy to use. It’s also a big piece of what we were always trying to do at CrowdTangle.

How would you rate the various platforms’ performance on this stuff so far?

Well, not all platforms are equal.

We’ve seen some platforms become really critical public forums for discussing the war, but they are making almost no effort to support civil society in making sense of what’s happening. I’m talking specifically about TikTok and Telegram, and to a lesser extent YouTube as well.

There are researchers who are trying to monitor those forums, but they have to get incredibly creative and scrappy about how to do it. For all the criticism Facebook gets, including a lot of very fair criticism, it does still make CrowdTangle available (at least for the moment). It also has an Ad Library and an incredibly robust network of fact-checkers that they’ve funded, trained and actively support.

But TikTok, Telegram and YouTube are all way behind even those efforts. I hope this moment is a wake-up call for ways in which they can do better.

One blind spot we have is that whatever platforms remove content, researchers can’t study it. How would we benefit from, say, platforms letting academics study a Russian disinformation campaign that got removed from Twitter or Facebook or YouTube or TikTok?

I think unlocking the potential of independent research on removed content accomplishes at least three really important things. First, it helps build a much more robust and powerful community of researchers that can study and understand the phenomenon, and help the entire industry make progress on it. (The alternative is leaving it entirely up to the platforms to figure out by themselves). Second, it helps hold the platforms accountable for whether they made the right decisions — and some of these decisions are very consequential. Third, it can help be a deterrent for bad actors as well.

The single biggest blind spot in policies around removed content is that there are no industry-wide norms or regulatory requirements for archiving or finding ways to share it with select researchers after it’s removed. And in the places where platforms have voluntarily chosen to do some of this, it’s not nearly as comprehensive or robust as it should be.

The reality is that a lot of the removed content is permanently deleted and gone forever.

We know that a lot of content is being removed from platforms around Ukraine. We know that YouTube has removed hundreds of channels and thousands of videos, and that both Twitter and Meta have announced networks of accounts they’ve each removed. And that’s to say nothing of all the content that is being automatically removed for graphic violence, which could represent important evidence of war crimes.

I think not having a better solution to that entire problem is a huge missed opportunity, and one we’ll all ultimately regret not having solved sooner.

I think platforms should release all this data and more. But I can also imagine them looking at Facebook’s experience with CrowdTangle and say hmm, it seems like the primary effect of releasing this data is that everyone makes fun of you. Platforms should have thicker skins, of course. But if you were to make this case internally to YouTube or TikTok — what’s in it for them?

Well, first, I’d pause a bit on your first point. They’re going to get made of fun regardless — and in some ways, I actually think that’s healthy. These platforms are enormously powerful, and they should be getting scrutinized. In general, history hasn’t been particularly kind to companies that decide they want to hide data from the outside world.

I also want to point out that there’s a lot of legislation being drafted around the world to simply require more of this — including the Platform Accountability and Transparency Act in the U.S. Senate and Article 31 and the Code of Practice in the Digital Services Act in Europe. Not all of the legislation is going to become law, but some of it will. And so your question is an important one, but it’s also not the only one that matters anymore.

That being said, given everything that happened to our team over the last year, your question is one I’ve thought about a lot.

There were times over the past few years where I tried to argue that transparency is one of the few ways for platforms to build legitimacy and trust with outside stakeholders. Or that transparency is a useful form of accountability that can act as a useful counterweight to other competing incentives inside big companies, especially around growth. Or that the answer to frustrating analysis isn’t less analysis, it’s more analysis.

I also saw first-hand how hard it is to be objective about these issues from the inside. There were absolutely points where it felt like executives weren’t always being objective about some of the criticism they were getting, or at worst didn’t have a real understanding of some of the gaps in the systems. And that’s not an indictment of anyone in particular, I think that’s just a reality of being human and working on something this hard and emotional and with this much scrutiny. It’s hard not to get defensive. But I think that’s another reason why you need to build out more systems that involve the external community, simply as a check on our own natural biases.

In the end, though, I think the real reason you do it because you think it’s just a responsibility you have given what you’ve built.

But what’s the practical effect of sharing data like this? How does it help?

I can connect you to human rights activists in places like Myanmar, Sri Lanka and Ethiopia who would tell you that when you give them tools like CrowdTangle, it can be instrumental in helping prevent real-world violence and protecting the integrity of elections. This year’s Nobel Peace Prize Winner, Maria Ressa, and her team at Rappler have used CrowdTangle for years to try and stem the tide of disinformation and hate speech in the Philippines.

That work doesn’t always generate headlines, but it matters.

So how do we advance the cause of platforms sharing more data with us?

Instead of leaving it up platforms to do entirely by themselves, and with a single set of rules for the entire planet, the next evolution in thinking about managing large platforms safely should be about empowering more of civil society, from journalists to researchers to nonprofits to fact-checkers to human rights organizations, with the opportunity to help.

And that’s not to defer responsibility from the platforms around any of this. But it’s also recognizing that they can’t do it alone — and pushing them, or simply legislating, ways in which they have to collaborate more and open up more.

Every platform should have tools like CrowdTangle to make it easy to search and monitor important organic content in real time. But it should also be way more powerful, and we should hold Meta accountable if they try to shut it down. It means that every platform should have Ad Libraries — but also the existing Ad Libraries should be way better.

It means we should be encouraging the industry to both do their own research and share it more regularly, including calling out platforms that aren’t doing any research at all. It means we should create more ways for researchers to study privacy-sensitive data sets within clean rooms so they can do more quantitative social science.

That means that every platform should have fact-checking programs similar to Meta’s. But Meta’s should also be much bigger, way more robust, and include more experts from around civil society. It means we should keep learning from Twitter’s Birdwatch — and if it works, use it as a potential model for the rest of the industry.

We should be building out more solutions that enable civil society to help be a part of managing the public spaces we’ve all found ourselves in. We should lean on the idea that thriving public spaces only prosper when everyone feels some sense of ownership and responsibility for them.

We should act like we really believe an open internet is better than a closed one.

AbJimroe

AbJimroe

.jpg&h=630&w=1200&q=100&v=6e07dc5773&c=1)