Why You Shouldn't Trust AI Detectors

Many teachers aren’t happy about the AI revolution, and it’s tough to blame them: ChatGPT has proven you can feed AI a prompt, say for a high school essay, and the AI can spit out a result in seconds....

Many teachers aren’t happy about the AI revolution, and it’s tough to blame them: ChatGPT has proven you can feed AI a prompt, say for a high school essay, and the AI can spit out a result in seconds. Of course, that essay might be riddled with errors, but, hey, the homework’s done. So, when AI checkers advertise themselves as a new line of defense against AI cheating, it makes sense for anyone affected to start using them. The problem is, they’re not perfect, and those imperfections are hurting people.

How AI detectors work

All the AI programs that are popular right now (e.g., ChatGPT) are based on large language models (LLMs). LLMs are trained on huge amounts of text, and pull from that knowledge in order to respond to you. In simple terms, all the AI is doing is predicting what word should follow the last thing it said, based on what it knows from its training. It doesn’t know what “Snow is cold, but fire is hot” means, but it knows that “hot” often follows “fire is.” (Again, super simplistic explanation.)

AI checkers operate similarly: They’re trained on data sets of text—some written by humans, others written by bots, and some written by both. AI detectors look for telltale signs of text generated by LLMs, such as repetitive language, probability of word choice, and tone. Just as you hope to train an AI to recognize patterns, so too do developers hope AI checkers can recognize whether text was written by a robot or not.

These developers claim their checkers can do this. But I’m not so sure. In fact, I asked ChatGPT whether or not it could recognize whether a given text was written by AI or human. It responded:

As an AI language model, I can generate text that may be difficult to distinguish from text written by a human. However, it is not always possible to definitively determine whether a piece of text was generated by an AI or written by a human, especially if the text has been well-written and edited.

There are some indicators that can suggest whether a text was generated by AI, such as unusual or repetitive patterns, lack of coherence, or unusual vocabulary choices. However, these indicators are not always present and can be overcome with advanced AI technologies.

It’s also worth noting that the quality of AI-generated text is constantly improving, so it may become increasingly difficult to distinguish between human and AI-generated text in the future. Ultimately, the best way to determine whether a piece of text was generated by AI is to have a trained expert in the field analyze it carefully.

Not really a glowing endorsement by the godfather of AI bots. But let’s take a look at how these detectors are holding up in action:

How AI detectors perform in the wild

Turnitin, which I remember inspiring fear for its plagiarism checker, claims its software now detects AI generated content with 98% confidence. If true, that’s undoubtedly high. BestColleges tested the software, which was able to distinguish between human-generated, AI-generated, and hybrid writing. But even that stat means a teacher will wrongly accuse one in every 50 students of using AI tools to cheat.

The Washington Post’s experience with Turnitin, however, is more damning than that. Students helped the Post come up with 16 writing samples compromised of human-generated, AI-generated, and hybrid text. In the end, Turnitin got over half of the samples at least partly incorrect. It correctly labeled six, but dropped the ball entirely on three others. Had this been a real class, Turnitin would’ve produced nothing short of a mess.

One of the first AI generators to go viral, GPTZero, fails the accuracy test with little experimentation. I tested it by writing a paragraph about ice cream in a neutral tone. It told me “Your text is likely to be written entirely by AI.”

Ice cream is a popular frozen dessert enjoyed by people around the world. It is primarily eaten during warm or hot weather, such as during summer, but it is enjoyed year round. Ice cream comes in many flavors, and is often paired with toppings, such as candies, nuts, fruit, or syrups.

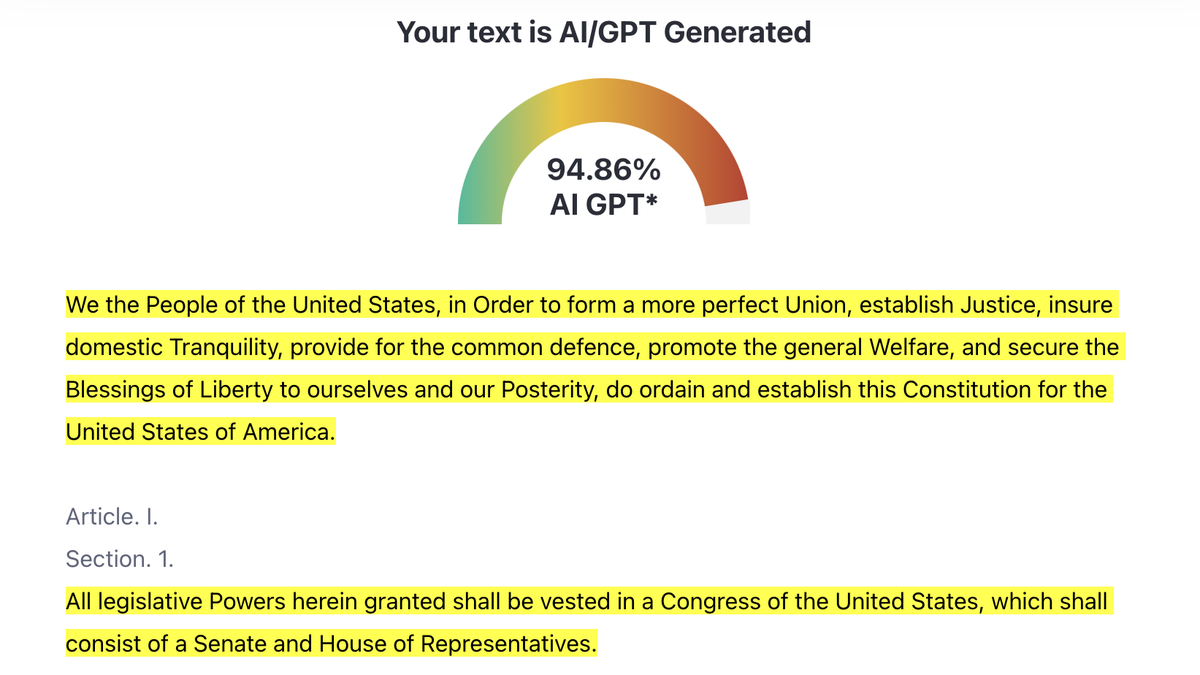

But my ambiguous paragraph is only the beginning. Another detector, confusingly named ZeroGPT, fell on its face when a Redditor decided to analyze the Constitution of the United States. According to ZeroGPT, the Constitution was 92.26% written by AI. Who knew the Philadelphia Convention was so reliant on artificial intelligence when crafting the law of the land. (I guess that explains a few of those amendments, anyway.)

One way to fool the detectors, however, is to give an AI-generated text another pass through AI. QuillBot, for example, rewrites text for you, and is already being used by students to evade checkers. If an AI detector is looking for a given text’s “averageness” in order to determine whether it was written by AI or not, having another AI add more variety to the text will throw a wrench in that system. QuillBot frequently pops up in the comments of TikToks discussing AI detectors in school. The kids will find a way.

AI detectors are hurting innocent students

So far, these examples are all theory. But these checkers aren’t prototypes. They’re here, and they’re being used against real students. I’m sure these detectors have outed many students who did use tools like ChatGPT to cheat, but they’re also wrongly accusing innocent students of doing the same, and it’s taking a toll:

This tweet had a “good” resolution, as the instructor admitted the mistake and took back their accusation. But in other situations, teachers are treating AI checkers as gospel, shutting down discussion with each “AI-generated” result:

I won’t deny we’re facing a new world. Large language models mean that students can plug in an essay prompt and receive a fully written essay in return (with varying levels of quality). But perhaps the fact that students can so easily fool the system points to a system that needs to change, rather than a Band-Aid solution that punishes innocent students as easily as the ones who are guilty.

Turnitin calls moments when it labels human-generated text as written by AI “false positives.” Cute. But the company emphasizes it “does not make a determination of misconduct;” rather, they offer data, and leave the final call up to the educator. That disclaimer seems to have been lost on many: In their eyes, if the AI checker says you’re a cheater, you’re a cheater.

Tfoso

Tfoso