OpenAI Claims New “o1” Model Can Reason Like A Human via @sejournal, @MattGSouthern

OpenAI claims new o1 model excels in complex reasoning, outperforming humans in math, coding, and science tests. The post OpenAI Claims New “o1” Model Can Reason Like A Human appeared first on Search Engine Journal.

OpenAI claims new o1 model excels in complex reasoning, outperforming humans in math, coding, and science tests.

OpenAI claims o1 model excels in complex reasoning. O1 allegedly outperforms humans in math, coding, and science tests. Skepticism advised until independent verification occurs.

OpenAI has unveiled its latest language model, “o1,” touting advancements in complex reasoning capabilities.

In an announcement, the company claimed its new o1 model can match human performance on math, programming, and scientific knowledge tests.

However, the true impact remains speculative.

Extraordinary Claims

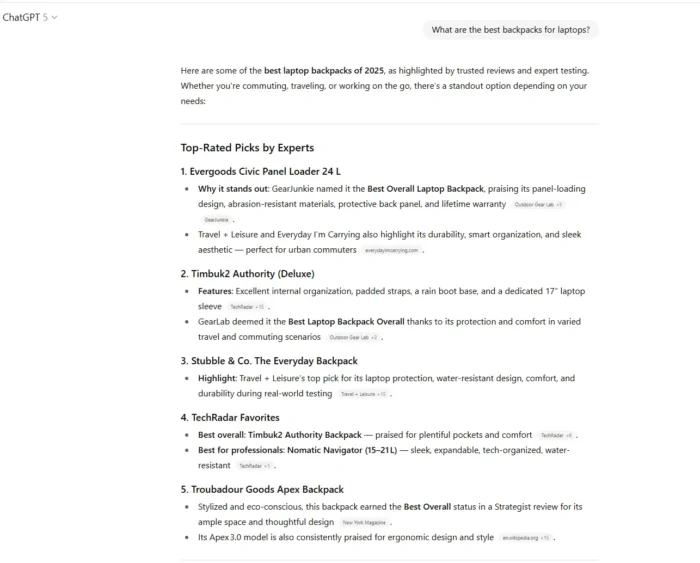

According to OpenAI, o1 can score in the 89th percentile on competitive programming challenges hosted by Codeforces.

The company insists its model can perform at a level that would place it among the top 500 students nationally on the elite American Invitational Mathematics Examination (AIME).

Further, OpenAI states that o1 exceeds the average performance of human subject matter experts holding PhD credentials on a combined physics, chemistry, and biology benchmark exam.

These are extraordinary claims, and it’s important to remain skeptical until we see open scrutiny and real-world testing.

Reinforcement Learning

The purported breakthrough is o1’s reinforcement learning process, designed to teach the model to break down complex problems using an approach called the “chain of thought.”

By simulating human-like step-by-step logic, correcting mistakes, and adjusting strategies before outputting a final answer, OpenAI contends that o1 has developed superior reasoning skills compared to standard language models.

Implications

It’s unclear how o1’s claimed reasoning could enhance understanding of queries—or generation of responses—across math, coding, science, and other technical topics.

From an SEO perspective, anything that improves content interpretation and the ability to answer queries directly could be impactful. However, it’s wise to be cautious until we see objective third-party testing.

OpenAI must move beyond benchmark browbeating and provide objective, reproducible evidence to support its claims. Adding o1’s capabilities to ChatGPT in planned real-world pilots should help showcase realistic use cases.

Featured Image: JarTee/Shutterstock

SEJ STAFF Matt G. Southern Senior News Writer at Search Engine Journal

Matt G. Southern, Senior News Writer, has been with Search Engine Journal since 2013. With a bachelor’s degree in communications, ...

Aliver

Aliver