The problem with schools turning to surveillance after mass shootings

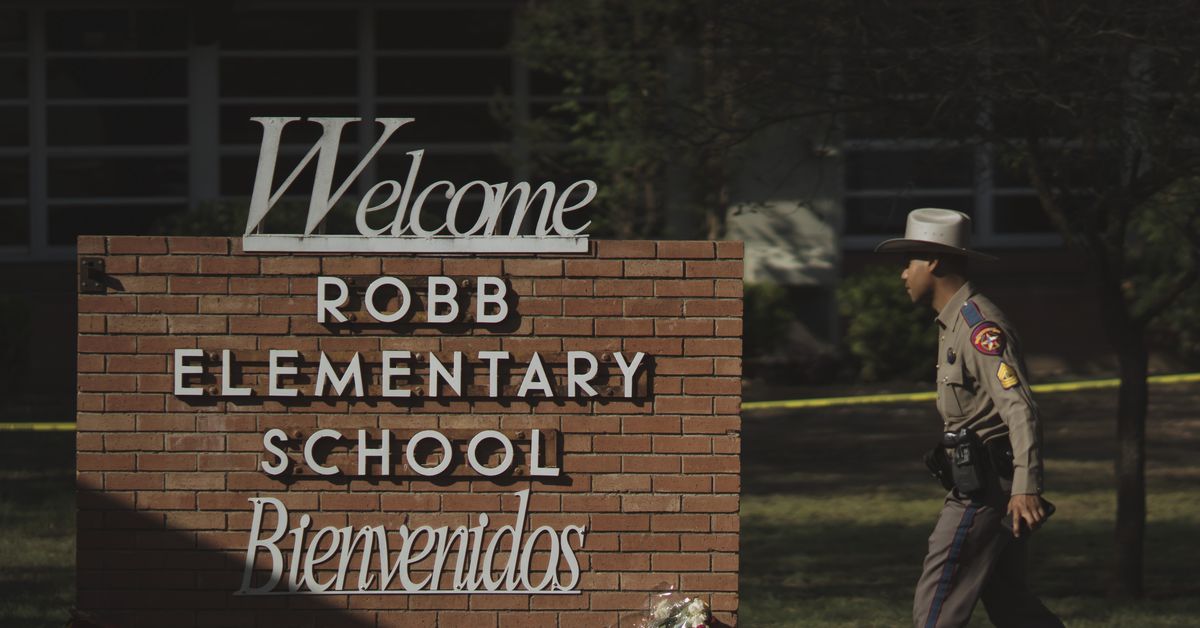

In the wake of school shootings, some schools are turning to technology. | Eric Thayer/Bloomberg via Getty ImagesInstalling advanced security tech doesn’t appear to stop these tragedies, but it can harm students in other ways. After a shooter killed...

After a shooter killed 21 people, including 19 children, in the massacre at Robb Elementary School in Uvalde, Texas, last week, the United States is yet again confronting the devastating impact of gun violence. While lawmakers have so far failed to pass meaningful reform, schools are searching for ways to prevent a similar tragedy on their own campuses. Recent history, as well as government spending records, indicate that one of the most common responses from education officials is to invest in more surveillance technology.

In recent years, schools have installed everything from facial recognition software to AI-based tech, including programs that purportedly detect signs of brandished weapons and online screening tools that scan students’ communications for mentions of potential violence. The startups selling this tech have claimed that these systems can help school officials intervene before a crisis happens or respond more quickly when one is occurring. Pro-gun politicians have also advocated for this kind of technology, and argued that if schools implement enough monitoring, they can prevent mass shootings.

The problem is that there’s very little evidence that surveillance technology effectively stops these kinds of tragedies. Experts even warn that these systems can create a culture of surveillance at schools that harms students. At many schools, networks of cameras running AI-based software would join other forms of surveillance that schools already have, like metal detectors and on-campus police officers.

“In an attempt to stop, let’s say, a shooter like what happened at Uvalde, those schools have actually extended a cost to the students that attend them,” Odis Johnson Jr, the executive director of the Johns Hopkins Center for Safe and Healthy Schools, told Recode. “There are other things we now have to consider when we seek to fortify our schools, which makes them feel like prisons and the students themselves feel like suspects.”

Still, schools and other venues often turn to surveillance technology in the wake of gun violence. The year following the 2018 mass shooting at Marjory Stoneman Douglas High School, the local Broward County School District installed analytic surveillance software from Avigilon, a company that offers AI-based recognition that tracks students’ appearances. After the mass shooting at Oxford High School in Michigan in 2021, the local school district announced it would trial a gun detection system sold by ZeroEyes, which is one of several startups that makes software that scours security camera feeds for images of weapons. Similarly, New York City Mayor Eric Adams said he would look into weapons detection software from a company called Evolv, in the aftermath of a mass shooting on the city’s subway system.

Various government agencies have helped schools purchase this kind of technology. Education officials have requested funding from the Department of Justice’s School Violence Prevention Program for a variety of products, including monitoring systems that look for “warning signs of … aggressive behaviors,” according to a 2019 document Recode received through a public records request. And generally speaking, surveillance tech has become even more prominent at schools during the pandemic, since some districts used Covid-19 relief programs to purchase software designed to make sure students were social distancing and wearing masks.

Even before the mass shooting in Uvalde, many schools in Texas had already installed some form of surveillance tech. In 2019, the state passed a law to “harden” schools, and within the US, Texas has the most contracts with digital surveillance companies, according to an analysis of government spending data conducted by the Dallas Morning News. The state’s investment in “security and monitoring” services has grown from $68 per student to $113 per student over the past decade, according to Chelsea Barabas, an MIT researcher studying the security systems deployed at Texas schools. Spending on social work services, however, grew from $25 per student to just $32 per student during the same time period. The gap between these two areas of spending is widest in the state’s most racially diverse school districts.

The Uvalde school district had already acquired various forms of security tech. One of those surveillance tools is a visitor management service sold by a company called Raptor Technologies. Another is a social media monitoring tool called Social Sentinel, which is supposed to “identify any possible threats that might be made against students and or staff within the school district,” according to a document from the 2019-2020 school year.

It’s so far unclear exactly which surveillance tools may have been in use at Robb Elementary School during the mass shooting. JP Guilbault, the CEO of Social Sentinel’s parent company, Navigate360, told Recode that the tool plays “an important role as an early warning system beyond shootings.” He claimed that Social Sentinel can detect “suicidal, homicidal, bullying, and other harmful language that is public and connected to district-, school-, or staff-identified names as well as social media handles and hashtags associated with school-identified pages.”

“We are not currently aware of any specific links connecting the gunman to the Uvalde Consolidated Independent School District or Robb Elementary on any public social media sites,” Guilbault added. The Uvalde gunman did post ominous photos of two rifles on his Instagram account before the shooting, but there’s no evidence that he publicly threatened any of the schools in the district. He privately messaged a girl he did not know that he planned to shoot an elementary school.

Even more advanced forms of surveillance tech have a tendency to miss warning signs. So-called weapon detection technology has accuracy issues and can flag all sorts of items that aren’t weapons, like walkie-talkies, laptops, umbrellas, and eyeglass cases. If it’s designed to work with security cameras, this tech also wouldn’t necessarily pick up any weapons that are hidden or covered. As critical studies by researchers like Joy Buolamwini, Timnit Gebru, and Deborah Raji have demonstrated, racism and sexism can be built inadvertently into facial recognition software. One firm, SN Technologies, offered a facial recognition algorithm to one New York school district that was 16 times more likely to misidentify Black women than white men, according to an analysis conducted by the National Institute of Standards and Technology. There’s evidence, too, that recognition technology may identify children’s faces less accurately than those of adults.

Even when this technology does work as advertised, it’s up to officials to be prepared to act on the information in time to stop any violence from occurring. While it’s still not clear what happened during the recent mass shooting in Uvalde — in part because local law enforcement has shared conflicting accounts about their response — it is clear that having enough time to respond was not the issue. Students called 911 multiple times, and law enforcement waited more than an hour before confronting and killing the gunman.

Meanwhile, in the absence of violence, surveillance makes schools worse for students. Research conducted by Johnson, the Johns Hopkins professor, and Jason Jabbari, a research professor at Washington University in St. Louis, found that a wide range of surveillance tools, including measures like security cameras and dress codes, hurt students’ academic performance at schools that used them. That’s partly because the deployment of surveillance measures — which, again, rarely stops mass shooters — tends to increase the likelihood that school officials or law enforcement at schools will punish or suspend students.

“Given the rarity of school shooting events, digital surveillance is more likely to be used to address minor disciplinary issues,” Barabas, the MIT researcher, explained. “Expanded use of school surveillance is likely to amplify these trends in ways that have a disproportionate impact on students of color, who are frequently disciplined for infractions that are both less serious and more discretionary than white students.”

This is all a reminder that schools often don’t use this technology in the way that it’s marketed. When one school deployed Avigilon’s software, school administrators used it to track when one girl went to the bathroom to eat lunch, supposedly because they wanted to stop bullying. An executive at one facial recognition company told Recode in 2019 that its technology was sometimes used to track the faces of parents who had been barred from contacting their children by a legal ruling or court order. Some schools have even used monitoring software to track and surveil protesters.

These are all consequences of the fact that schools feel they must go to extreme lengths to keep students safe in a country that is teeming with guns. Because these weapons remain a prominent part of everyday life in the US, schools try to adapt. That often means students must adapt to surveillance, including surveillance that shows limited evidence of working, and may actually hurt them.

ValVades

ValVades