What Is JavaScript SEO? 6 Best Practices to Boost Rankings

JavaScript has enabled highly interactive and dynamic websites. But it also presents a challenge: ensuring your site is crawlable, indexable, and fast. That’s why JavaScript SEO is essential. When applied correctly, these strategies can significantly boost organic search performance....

JavaScript has enabled highly interactive and dynamic websites. But it also presents a challenge: ensuring your site is crawlable, indexable, and fast.

That’s why JavaScript SEO is essential.

When applied correctly, these strategies can significantly boost organic search performance.

For instance, book retailer Follet saw a remarkable recovery after fixing JavaScript issues:

That’s the impact of effective JavaScript SEO.

In this guide, you’ll:

Get an introduction to JavaScript SEO Understand the challenges with using JavaScript for search Learn best practices to optimize your JavaScript site for organic searchWhat Is JavaScript SEO?

JavaScript SEO is the process of optimizing JavaScript websites. It ensures search engines can crawl, render, and index them.

Aligning JavaScript websites with SEO best practices can boost organic search rankings. All without hurting the user experience.

However, there are still uncertainties surrounding JavaScript and SEO’s impact.

Common JavaScript Misconceptions

| Google can handle all JavaScript perfectly. | Since JavaScript is rendered in two phases, delays and errors can occur. These issues can stop Google from crawling, rendering, and indexing content, hurting rankings. |

| JavaScript is only for large sites. | JavaScript is versatile and benefits websites of varying sizes. Smaller sites can use JavaScript in interactive forms, content accordions, and navigation dropdowns |

| JavaScript SEO is optional. | JavaScript SEO is key for finding and indexing content, especially on JavaScript-heavy sites. |

Benefits of JavaScript SEO

Optimizing JavaScript for SEO can offer several advantages:

Improved visibility: Crawled and indexed JavaScript content can boost search rankings Enhanced performance: Techniques like code splitting deliver only the important JavaScript code. This speeds up the site and reduces load times. Stronger collaboration: JavaScript SEO encourages SEOs, developers, and web teams to work together. This helps improve communication and alignment on your SEO project plan. Enhanced user experience: JavaScript boosts UX with smooth transitions and interactivity. It also speeds up and makes navigation between webpages more dynamic.How Search Engines Render JavaScript

To understand JavaScript’s SEO impact, let’s explore how search engines process JavaScript pages.

Google has outlined that it processes JavaScript websites in three phases:

Crawling Processing Indexing

Crawling

When Google finds a URL, it checks the robots.txt file and meta robots tags. This is to see if any content is blocked from being crawled or rendered.

If a link is discoverable by Google, the URL is added to a queue for simultaneous crawling and rendering.

Rendering

For traditional HTML websites, content is immediately available from the server response.

In JavaScript websites, Google must execute JavaScript to render and index the content. Due to resource demands, rendering is deferred until resources are available with Chromium.

Indexing

Once rendered, Googlebot reads the HTML, adds new links to the crawl list, and indexes the content.

How JavaScript Affects SEO

Despite its growing popularity, the question often arises: Is JavaScript bad for SEO?

Let’s examine aspects that can severely impact SEO if you don’t optimize JavaScript for search.

Rendering Delays

For Single Page Applications (SPAs) — like Gmail or Twitter, where content updates without page refreshes — JavaScript controls the content and user experience.

If Googlebot can’t execute the JavaScript, it may show a blank page.

This happens when Google struggles to process the JavaScript. It hurts the page’s visibility and organic performance.

To test how Google will see your SPA site if it can’t execute JavaScript, use the web crawler Screaming Frog. Configure the render settings to “Text Only” and crawl your site.

Indexing Issues

JavaScript frameworks (like React or Angular, which help build interactive websites) can make it harder for Google to read and index content.

For example, Follet’s online bookstore migrated millions of pages to a JavaScript framework.

Google had trouble processing the JavaScript, causing a sharp decline in organic performance:

Crawl Budget Challenges

Websites have a crawl budget. This refers to the number of pages Googlebot can crawl and index within a given timeframe.

Large JavaScript files consume significant crawling resources. They also limit Google’s ability to explore deeper pages on the site.

Core Web Vitals Concerns

JavaScript can affect how quickly the main content of a web page is loaded. This affects Largest Contentful Paint (LCP), a Core Web Vitals score.

For example, check out this performance timeline:

Section #4 (“Element Render Delay”) shows a JavaScript-induced delay in rendering an element.

This negatively impacts the LCP score.

JavaScript Rendering Options

When rendering webpages, you can choose from three options:

Server-Side Rendering (SSR), Client-Side Rendering (CSR), or Dynamic Rendering.

Let’s break down the key differences between them.

Server-Side Rendering (SSR)

SSR creates the full HTML on the server. It then sends this HTML directly to the client, like a browser or Googlebot.

This approach means the client doesn’t need to render the content.

As a result, the website loads faster and offers a smoother experience.

| Improved performance | Higher server load |

| Search engine optimization | Longer time to interactivity |

| Enhanced accessibility | Complex implementation |

| Consistent experience | Limited caching |

Client-Side Rendering (CSR)

In CSR, the client—like a user, browser, or Googlebot—receives a blank HTML page. Then, JavaScript runs to generate the fully rendered HTML.

Google can render client-side, JavaScript-driven pages. But, it may delay rendering and indexing.

| Reduced server load | Slower initial load times |

| Enhanced interactivity | SEO challenges |

| Improved scalability | Increased complexity |

| Faster page transitions | Performance variability |

Dynamic Rendering

Dynamic rendering, or prerendering, is a hybrid approach.

Tools like Prerender.io detect Googlebot and other crawlers. They then send a fully rendered webpage from a cache.

This way, search engines don’t need to run JavaScript.

At the same time, regular users still get a CSR experience. JavaScript is executed and content is rendered on the client side.

Google says dynamic rendering isn’t cloaking. The content shown to Googlebot just needs to be the same as what users see.

However, it warns that dynamic rendering is a temporary solution. This is due to its complexity and resource needs.

| Better SEO | Complex setup |

| Crawler compatibility | Risk of cloaking |

| Optimized UX | Tool dependency |

| Scalable for large sites | Performance latency |

Which Rendering Approach is Right for You?

The right rendering approach depends on several factors.

Here are key considerations to help you determine the best solution for your website:

| Server-Side Rendering (SSR) | SEO-critical sites (e.g., ecommerce, blogs)

Sites relying on organic traffic Faster Core Web Vitals (e.g., LCP) |

Need timely indexing and visibility

Users expect fast, fully-rendered pages upon load |

Strong server infrastructure to handle higher load

Expertise in SSR frameworks (e.g., Next.js, Nuxt.js) |

| Client-Side Rendering (CSR) | Highly dynamic user interfaces (e.g., dashboards, web apps)

Content not dependent on organic traffic (e.g. behind login) |

SEO is not a top priority

Focus on reducing server load and scaling for large audiences |

JavaScript optimization to address performance issues

Ensuring crawlability with fallback content |

| Dynamic Rendering | JavaScript-heavy sites needing search engine access

Large-scale, dynamic content websites |

SSR is resource-intensive for the entire site

Need to balance bot crawling with user-focused interactivity |

Pre-rendering tool like Prerender.io

Bot detection and routing configuration Regular audits to avoid cloaking risks |

Knowing these technical solutions is important. But the best approach depends on how your website uses JavaScript.

Where does your site fit?

Minimal JavaScript: Most content is in the HTML (e.g., WordPress sites). Just make sure search engines can see key text and links. Moderate JavaScript: Some elements load dynamically, like live chat, AJAX-based widgets, or interactive product filters. Use fallbacks or dynamic rendering to keep content crawlable. Heavy JavaScript: Your site depends on JavaScript to load most content, like SPAs built with React or Vue. To make sure Google can see it, you may need SSR or pre-rendering. Fully JavaScript-rendered: Everything from content to navigation relies on JavaScript (e.g., Next.js, Gatsby). You’ll need SSR or Static Site Generation (SSG), optimized hydration, and proper metadata handling to stay SEO-friendly.The more JavaScript your site relies on, the more important it is to optimize for SEO.

JavaScript SEO Best Practices

So, your site looks great to users—but what about Google?

If search engines can’t properly crawl or render your JavaScript, your rankings could take a hit.

The good news? You can fix it.

Here’s how to make sure your JavaScript-powered site is fully optimized for search.

1. Ensure Crawlability

Avoid blocking JavaScript files in the robots.txt file to ensure Google can crawl them.

In the past, HTML-based websites often blocked JavaScript and CSS.

Now, crawling JavaScript files is crucial for accessing and rendering key content.

2. Choose the Optimal Rendering Method

It’s crucial to choose the right approach based on your site’s needs.

This decision may depend on your resources, user goals, and vision for your website. Remember:

Server-side rendering: Ensures content is fully rendered and indexable upon page load. This improves visibility and user experience. Client-side rendering: Renders content on the client side, offering better interactivity for users Dynamic rendering: Sends crawlers pre-rendered HTML and users a CSR experience

3. Reduce JavaScript Resources

Reduce JavaScript size by removing unused or unnecessary code. Even unused code must be accessed and processed by Google.

Combine multiple JavaScript files to reduce the resources Googlebot needs to execute. This helps improve efficiency.

4. Defer Scripts Blocking Content

You can defer render-blocking JavaScript to speed up page loading.

Use the “defer” attribute to do this, as shown below:

<script defer src="your-script.js"></script>This tells browsers and search engines to run the code once the main CSS and JavaScript have loaded.

5. Manage JavaScript-Generated Content

Managing JavaScript content is key. It must be accessible to search engines and provide a smooth user experience.

Here are some best practices to optimize it for SEO:

Provide Fallback Content

Use the <noscript> tag to show essential info if JavaScript fails or is disabled Ensure critical content like navigation and headings is included in the initial HTMLFor example, Yahoo uses a <noscript> tag. It shows static product details for JavaScript-heavy pages.

Optimize JavaScript-Based Pagination

Use HTML <a> tags for pagination to ensure Googlebot can crawl each page Dynamically update URLs with the History API for “Load More” buttons Add rel=”prev” and rel=”next” to indicate paginated page relationshipsFor instance, Skechers employs a “Load More” button that generates accessible URLs:

Test and Verify Rendering

Use Google Search Console’s (GSC) URL Inspection Tool and Screaming Frog to check JavaScript content. Is it accessible? Test JavaScript execution using browser automation tools like Puppeteer to ensure proper renderingConfirm Dynamic Content Loads Correctly

Use loading=”lazy” for lazy-loaded elements and verify they appear in rendered HTML Provide fallback content for dynamically loaded elements to ensure visibility to crawlersFor example, Backlinko lazy loads images within HTML:

6. Create Developer-Friendly Processes

Working closely with developers is key to integrating JavaScript and SEO best practices.

Here’s how you can streamline the process:

Spot the issues: Use tools like Screaming Frog or Chrome DevTools. They can find JavaScript rendering issues. Document these early. Write actionable tickets: Write clear SEO dev tickets with the issue, its SEO impact, and step-by-step instructions to fix it. For example, here’s a sample dev ticket: Test and validate fixes: Conduct quality assurance (QA) to ensure fixes are implemented correctly. Share updates and results with your team to maintain alignment.

Collaborate in real time: Use project management tools like Notion, Jira, or Trello. These help ensure smooth communication between SEOs and developers.

Test and validate fixes: Conduct quality assurance (QA) to ensure fixes are implemented correctly. Share updates and results with your team to maintain alignment.

Collaborate in real time: Use project management tools like Notion, Jira, or Trello. These help ensure smooth communication between SEOs and developers.

By building developer-friendly processes, you can solve JavaScript SEO issues faster. This also creates a collaborative environment that helps the whole team.

Communicating SEO best practices for JavaScript usage is as crucial as its implementation.

JavaScript SEO Resources + Tools

As you learn how to make your javascript SEO friendly, several tools can assist you in the process.

Educational Resources

Google has provided or contributed to some great resources:

Understand JavaScript SEO Basics

Google’s JavaScript basics documentation explains how it processes JavaScript content.

What you’ll learn:

How Google processes JavaScript content, including crawling, rendering, and indexing Best practices for ensuring JavaScript-based websites are fully optimized for search engines Common pitfalls to avoid and strategies to improve SEO performance on JavaScript-driven websitesWho it’s for: Developers and SEO professionals optimizing JavaScript-heavy sites.

Rendering on the Web

The web.dev article Rendering on the Web is a comprehensive resource. It explores various web rendering techniques, including SSR, CSR, and prerendering.

What you’ll learn:

An in-depth overview of web rendering techniques Performance implications of each rendering method. And how they affect user experience and SEO. Actionable insights for choosing the right rendering strategy based on your goalsWho it’s for: Marketers, developers, and SEOs wanting to boost performance and visibility.

Diagnostic Tools

Screaming Frog & Sitebulb

Crawlers such as Screaming Frog or Sitebulb help identify issues affecting JavaScript.

How? By simulating how search engines process your site.

Key features:

Crawl JavaScript websites: Detect blocked or inaccessible JavaScript files using robots.txt configurations Render simulation: Crawl and visualize how JavaScript-rendered pages appear to search engines Debugging capabilities: Identify rendering issues, missing content, or broken resources preventing proper indexingExample use case:

Use Screaming Frog’s robots.txt settings to emulate Googlebot. The tool can confirm if critical JavaScript files are accessible.

When to use:

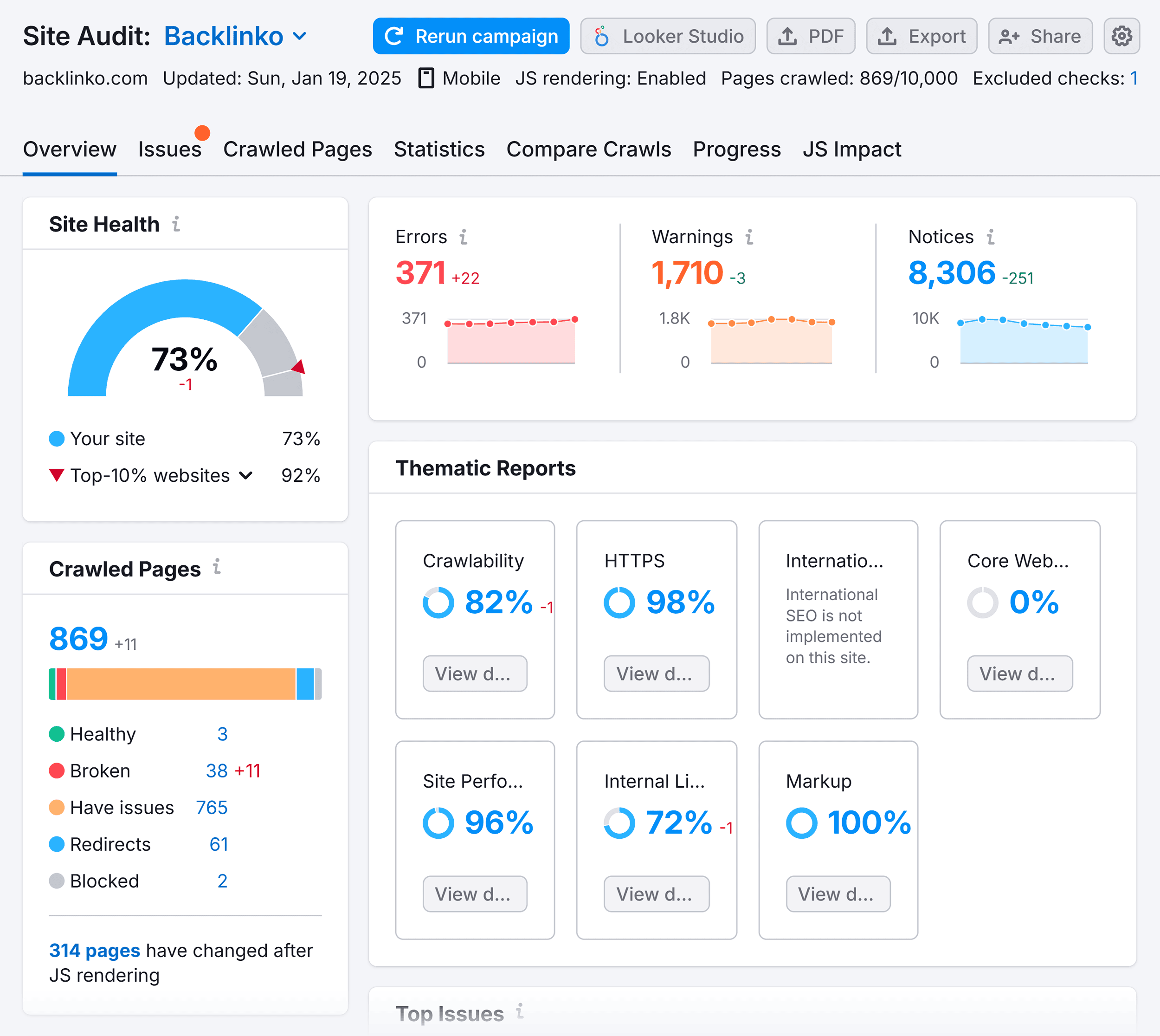

Debugging JavaScript-related indexing problems Testing rendering issues with pre-rendered or dynamic contentSemrush Site Audit

Semrush’s Site Audit is a powerful tool for diagnosing JavaScript SEO issues.

Key features:

Crawlability checks: Identifies JavaScript files that hinder rendering and indexing Rendering insights: Detects JavaScript-related errors impacting search engines’ ability to process content Performance metrics: Highlights Core Web Vitals like LCP and Total Blocking Time (TBT) Actionable fixes: Provides recommendations to optimize JavaScript code, improve speed, and fix rendering issues

Site Audit also includes a “JS Impact” report, which focuses on uncovering JavaScript-related issues.

It highlights blocked files, rendering errors, and performance bottlenecks. The report provides actionable insights to enhance SEO.

When to use:

Identify rendering blocking issues caused by JavaScript Troubleshoot performance issues after implementing large JavaScript implementationsGoogle Search Console

Google Search Console’s Inspection Tool helps analyze your JavaScript pages. It checks how Google crawls, renders, and indexes them.

Key features:

Rendering verification: Check if Googlebot successfully executes and renders JavaScript content Crawlability insights: Identify blocked resources or missing elements impacting indexing Live testing: Use live tests to ensure real-time changes are visible to GoogleExample use case:

Inspecting a JavaScript-rendered page to see if all critical content is in the rendered HTMLWhen to use:

Verifying JavaScript rendering and indexing Troubleshooting blank or incomplete content in Google’s search resultsPerformance Optimization

You may need to test your JavaScript website’s performance. These tools granularly break down performance:

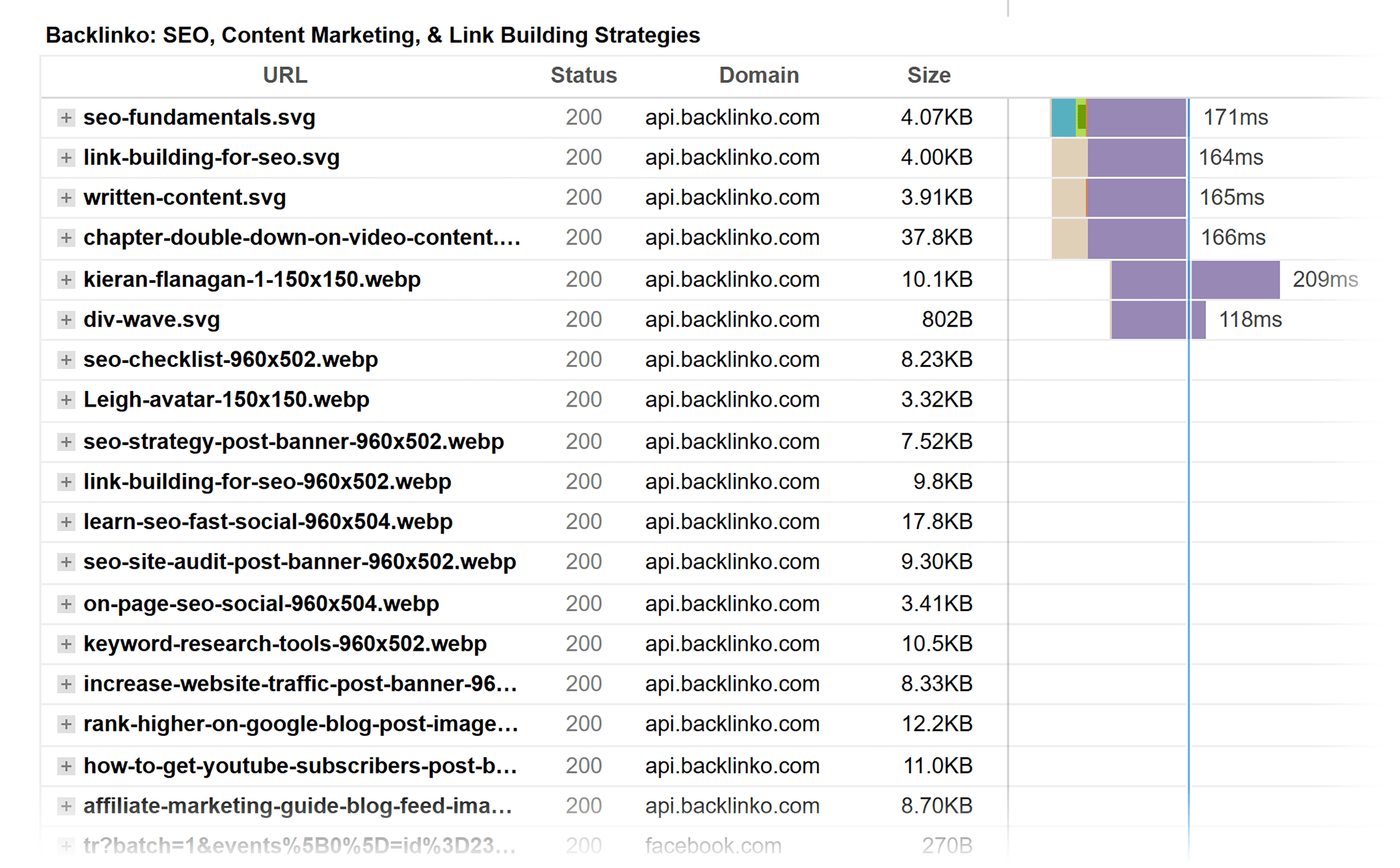

WebPageTest

WebPageTest helps analyze website performance, including how JavaScript affects load times and rendering.

The screenshot below shows high-level performance metrics for a JavaScript site. It includes when the webpage was visible to users.

Key features:

Provides waterfall charts to visualize the loading sequence of JavaScript and other resources Measures critical performance metrics like Time to First Byte (TTFB) and LCP Simulates slow networks and mobile devices to identify JavaScript bottlenecksUse case: Finding scripts or elements that slow down page load and affect Core Web Vitals.

GTMetrix

GTmetrix helps measure and optimize website performance, focusing on JavaScript-related delays and efficiency.

Key features:

Breaks down page performance with actionable insights for JavaScript optimization Provides specific recommendations to minimize and defer non-critical JavaScript Visualizes load behavior with video playback and waterfall charts to pinpoint render delays

Use case: Optimizing JavaScript delivery to boost page speed and user experience. This includes minifying, deferring, or splitting code.

Chrome DevTools & Lighthouse

Chrome DevTools and Lighthouse are free Chrome tools. They assess site performance and accessibility. Both are key for JavaScript SEO.

Key features:

JavaScript execution analysis: Audits JavaScript execution time. It also identifies scripts that delay rendering or impact Core Web Vitals. Script optimization: Flags opportunities for code splitting, lazy loading, and removing unused JavaScript Network and coverage insights: Identifies render-blocking resources, unused JavaScript, and large file sizes Performance audits: Lighthouse measures critical Core Web Vitals to pinpoint areas for improvement Render simulation: It emulates devices, throttles network speeds, and disables JavaScript. This alleviates rendering issues.For example, the below screenshot is taken with DevTools’s Performance panel. After page load, various pieces of data are recorded to assess the culprit of heavy load times.

Use cases:

Testing JavaScript-heavy pages for performance bottlenecks, rendering issues, and SEO blockers Identifying and optimizing scripts, ensuring key content is crawlable and indexableSpecialized Tools

Prerender.io helps JavaScript-heavy websites by serving pre-rendered HTML to bots.

This allows search engines to crawl and index content while users get a dynamic CSR experience.

Key features:

Pre-rendered content: Serves a cached, fully rendered HTML page to search engine crawlers like Googlebot Easy integration: Compatible with frameworks like React, Vue, and Angular. It also integrates with servers like NGINX or Apache. Scalable solution: Ideal for large, dynamic sites with thousands of pages Bot detection: Identifies search engine bots and serves optimized content Performance optimization: Reduces server load by offloading rendering to Prerender.io’s serviceBenefits:

Ensures full crawlability and indexing of JavaScript content Improves search engine rankings by eliminating blank or incomplete pages Balances SEO performance and user experience for JavaScript-heavy sitesWhen to use:

For Single-Page Applications or dynamic JavaScript frameworks As an alternative to SSR when resources are limitedFind Your Next JavaScript SEO Opportunity Today

Most JavaScript SEO problems stay hidden—until your rankings drop.

Is your site at risk?

Don’t wait for traffic losses to find out.

Run an audit, fix rendering issues, and make sure search engines see your content.

Want more practical fixes?

Check out our guides on PageSpeed and Core Web Vitals for actionable steps to speed up your JavaScript-powered site.

BigThink

BigThink