Amazon CEO Andy Jassy in shareholder letter says he's committed to cost cutting while investing in AI

Andy Jassy pledged to keep looking for ways to cut costs even as the company doubles down on investing in new growth areas like artificial intelligence.

Amazon CEO Andy Jassy on Thursday published his annual shareholder letter, where he pledged to look for ways to keep costs in check even as the company doubles down on investing in new growth areas like artificial intelligence.

"I think every one of us at Amazon believes that we have a long way to go, in every one of our businesses, before we exhaust how we can make customers' lives better and easier, and there is considerable upside in each of the businesses in which we're investing," Jassy wrote in his third shareholder letter since taking the helm at Amazon from former CEO Jeff Bezos, who stepped down in mid-2021.

Under Jassy, Amazon has morphed into a leaner version of itself, as slowing sales and a challenging economy pushed the company to eschew the relentless growth of the Bezos years. Beginning at the end of 2022 and continuing through 2023, Amazon initiated the largest layoffs in its history, cutting more than 27,000 jobs. Those cuts have continued this year, with Amazon announcing layoffs in its cloud computing, Prime Video and Twitch livestreaming units, among others.

Even amid a period of retrenchment in some areas, Jassy said he's focused on finding new areas of growth within the company so that Amazon remains "resilient" in the long term. He stressed the importance of building "primitive services," which he described as "discrete, foundational building blocks" that can spur new projects and businesses.

Jassy used Amazon Web Services, its cloud computing division, as an example. Before he became Amazon's CEO, Jassy oversaw the creation of AWS, which grew from an internal tool powering its retail operations to become the dominant cloud service and one of Amazon's most profitable businesses.

He said he believes generative artificial intelligence is shaping up to become Amazon's next big primitive service, or "pillar," which is an internal phrase the company often uses to describe its most successful businesses. Amazon's first three pillars are its retail, Prime subscription and cloud computing units.

"While we're building a substantial number of GenAI applications ourselves, the vast majority will ultimately be built by other companies," Jassy said. "However, what we're building in AWS is not just a compelling app or foundation model."

He noted Amazon's cloud computing service will be an important player in the AI boom, saying, "We're optimistic that much of this world-changing AI will be built on top of AWS."

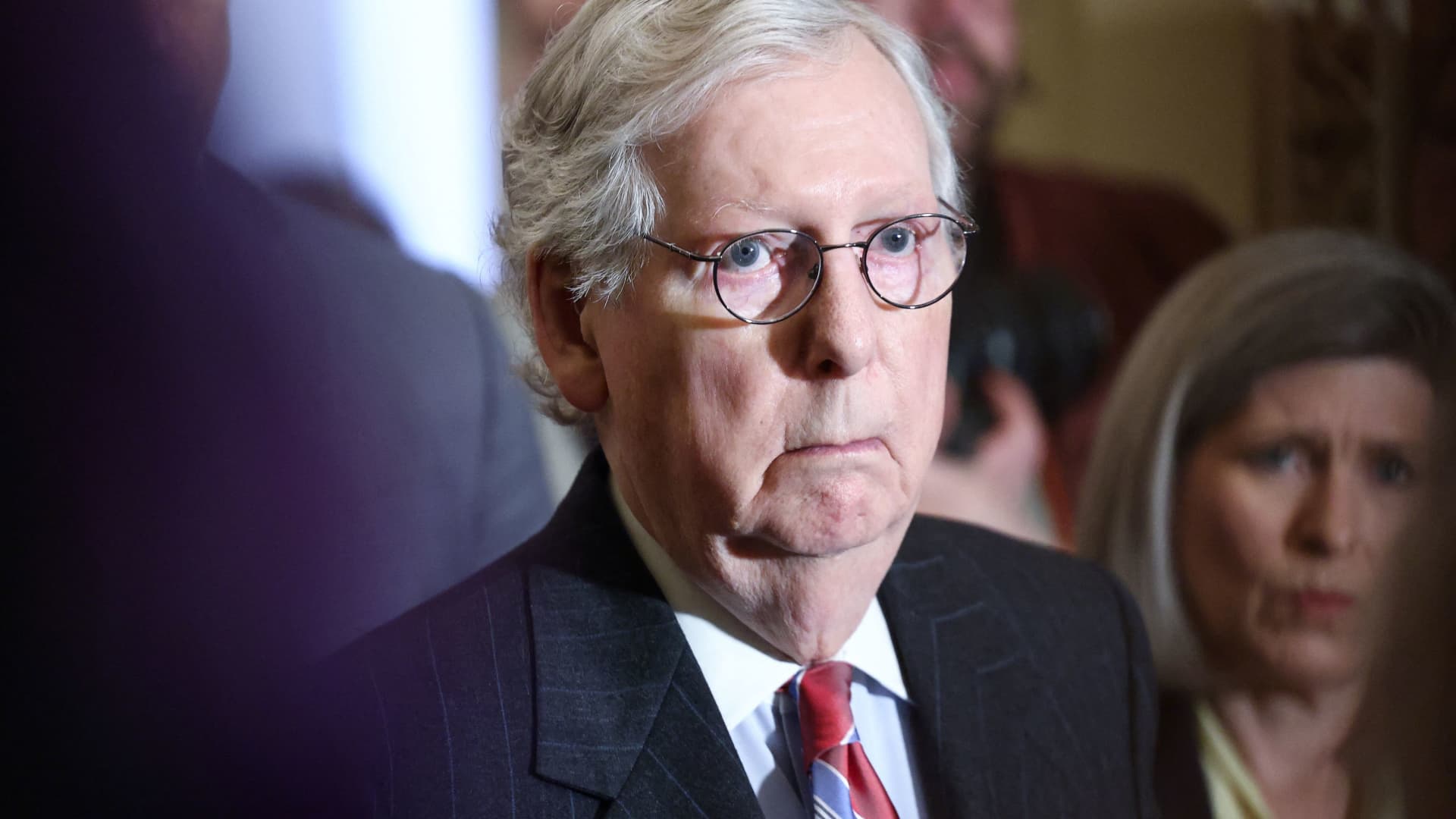

Amazon on Thursday added Andrew Ng, a renowned AI pioneer who previously led Google Brain and was a scientist at Baidu, to its board. Ng will succeed Judy McGrath, who has sat on the board since 2014.

In the past year, Amazon has made a flurry of AI announcements as the field exploded, causing tech companies to pour money into the space. Last month, Amazon added $2.75 billion to its stake in AI startup Anthropic in its largest venture investment yet. Jassy has also pledged to infuse AI into every one of Amazon's businesses.

The company in February launched a tool called Rufus that uses AI to help users search and shop for products. Elsewhere, it has rolled out "Q," an AI chatbot for companies to assist with daily tasks, and Bedrock, a generative AI service for Amazon Web Services customers.

Dear Shareholders:

Last year at this time, I shared my enthusiasm and optimism for Amazon's future. Today, I have even more. The reasons are many, but start with the progress we've made in our financial results and customer experiences, and extend to our continued innovation and the remarkable opportunities in front of us.

In 2023, Amazon's total revenue grew 12% year-over-year ("YoY") from $514B to $575B. By segment, North America revenue increased 12% YoY from $316B to $353B, International revenue grew 11% YoY from $118B to $131B, and AWS revenue increased 13% YoY from $80B to $91B.

Further, Amazon's operating income and Free Cash Flow ("FCF") dramatically improved. Operating income in 2023 improved 201% YoY from $12.2B (an operating margin of 2.4%) to $36.9B (an operating margin of 6.4%). Trailing Twelve Month FCF adjusted for equipment finance leases improved from -$12.8B in 2022 to $35.5B (up $48.3B).

While we've made meaningful progress on our financial measures, what we're most pleased about is the continued customer experience improvements across our businesses.

In our Stores business, customers have enthusiastically responded to our relentless focus on selection, price, and convenience. We continue to have the broadest retail selection, with hundreds of millions of products available, tens of millions added last year alone, and several premium brands starting to list on Amazon (e.g. Coach, Victoria's Secret, Pit Viper, Martha Stewart, Clinique, Lancôme, and Urban Decay).

Being sharp on price is always important, but particularly in an uncertain economy, where customers are careful about how much they're spending. As a result, in Q4 2023, we kicked off the holiday season with Prime Big Deal Days, an exclusive event for Prime members to provide an early start on holiday shopping. This was followed by our extended Black Friday and Cyber Monday holiday shopping event, open to all customers, that became our largest revenue event ever. For all of 2023, customers saved nearly $24B across millions of deals and coupons, almost 70% more than the prior year.

We also continue to improve delivery speeds, breaking multiple company records. In 2023, Amazon delivered at the fastest speeds ever to Prime members, with more than 7 billion items arriving same or next day, including more than 4 billion in the U.S. and more than 2 billion in Europe. In the U.S., this result is the combination of two things. One is the benefit of regionalization, where we re-architected the network to store items closer to customers. The other is the expansion of same-day facilities, where in 2023, we increased the number of items delivered same day or overnight by nearly 70% YoY. As we get items to customers this fast, customers choose Amazon to fulfill their shopping needs more frequently, and we can see the results in various areas including how fast our everyday essentials business is growing (over 20% YoY in Q4 2023).

Our regionalization efforts have also trimmed transportation distances, helping lower our cost to serve. In 2023, for the first time since 2018, we reduced our cost to serve on a per unit basis globally. In the U.S. alone, cost to serve was down by more than $0.45 per unit YoY. Decreasing cost to serve allows us both to invest in speed improvements and afford adding more selection at lower Average Selling Prices ("ASPs"). More selection at lower prices puts us in consideration for more purchases.

As we look toward 2024 (and beyond), we're not done lowering our cost to serve. We've challenged every closely held belief in our fulfillment network, and reevaluated every part of it, and found several areas where we believe we can lower costs even further while also delivering faster for customers. Our inbound fulfillment architecture and resulting inventory placement are areas of focus in 2024, and we have optimism there's more upside for us.

Internationally, we like the trajectory of our established countries, and see meaningful progress in our emerging geographies (e.g. India, Brazil, Australia, Mexico, Middle East, Africa, etc.) as they continue to expand selection and features, and move toward profitability (in Q4 2023, Mexico became our latest international Stores locale to turn profitable). We have high conviction that these new geographies will continue to grow and be profitable in the long run.

Alongside our Stores business, Amazon's Advertising progress remains strong, growing 24% YoY from $38B in 2022 to $47B in 2023, primarily driven by our sponsored ads. We've added Sponsored TV to this offering, a self-service solution for brands to create campaigns that can appear on up to 30+ streaming TV services, including Amazon Freevee and Twitch, and have no minimum spend. Recently, we've expanded our streaming TV advertising by introducing ads into Prime Video shows and movies, where brands can reach over 200 million monthly viewers in our most popular entertainment offerings, across hit movies and shows, award-winning Amazon MGM Originals, and live sports like Thursday Night Football. Streaming TV advertising is growing quickly and off to a strong start.

Shifting to AWS, we started 2023 seeing substantial cost optimization, with most companies trying to save money in an uncertain economy. Much of this optimization was catalyzed by AWS helping customers use the cloud more efficiently and leverage more powerful, price-performant AWS capabilities like Graviton chips (our generalized CPU chips that provide ~40% better price-performance than other leading x86 processors), S3 Intelligent Tiering (a storage class that uses AI to detect objects accessed less frequently and store them in less expensive storage layers), and Savings Plans (which give customers lower prices in exchange for longer commitments). This work diminished short-term revenue, but was best for customers, much appreciated, and should bode well for customers and AWS longer-term. By the end of 2023, we saw cost optimization attenuating, new deals accelerating, customers renewing at larger commitments over longer time periods, and migrations growing again.

The past year was also a significant delivery year for AWS. We announced our next generation of generalized CPU chips (Graviton4), which provides up to 30% better compute performance and 75% more memory bandwidth than its already-leading predecessor (Graviton3). We also announced AWS Trainium2 chips, which will deliver up to four times faster machine learning training for generative AI applications and three times more memory capacity than Trainium1. We continued expanding our AWS infrastructure footprint, now offering 105 Availability Zones within 33 geographic Regions globally, with six new Regions coming (Malaysia, Mexico, New Zealand, the Kingdom of Saudi Arabia, Thailand, and a second German region in Berlin). In Generative AI ("GenAI"), we added dozens of features to Amazon SageMaker to make it easier for developers to build new Foundation Models ("FMs"). We invented and delivered a new service (Amazon Bedrock) that lets companies leverage existing FMs to build GenAI applications. And, we launched the most capable coding assistant around in Amazon Q. Customers are excited about these capabilities, and we're seeing significant traction in our GenAI offerings. (More on how we're approaching GenAI and why we believe we'll be successful later in the letter.)

We're also making progress on many of our newer business investments that have the potential to be important to customers and Amazon long-term. Touching on two of them:

We have increasing conviction that Prime Video can be a large and profitable business on its own. This confidence is buoyed by the continued development of compelling, exclusive content (e.g. Thursday Night Football, Lord of the Rings, Reacher, The Boys, Citadel, Road House, etc.), Prime Video customers' engagement with this content, growth in our marketplace programs (through our third-party Channels program, as well as the broad selection of shows and movies customers rent or buy), and the addition of advertising in Prime Video.

In October, we hit a major milestone in our journey to commercialize Project Kuiper when we launched two end-to-end prototype satellites into space, and successfully validated all key systems and sub-systems—rare in an initial launch like this. Kuiper is our low Earth orbit satellite initiative that aims to provide broadband connectivity to the 400-500 million households who don't have it today (as well as governments and enterprises seeking better connectivity and performance in more remote areas), and is a very large revenue opportunity for Amazon. We're on track to launch our first production satellites in 2024. We've still got a long way to go, but are encouraged by our progress.

Overall, 2023 was a strong year, and I'm grateful to our collective teams who delivered on behalf of customers. These results represent a lot of invention, collaboration, discipline, execution, and reimagination across Amazon. Yet, I think every one of us at Amazon believes that we have a long way to go, in every one of our businesses, before we exhaust how we can make customers' lives better and easier, and there is considerable upside in each of the businesses in which we're investing.

===

In my annual letter over the last three years, I've tried to give shareholders more insight into how we're thinking about the company, the businesses we're pursuing, our future opportunities, and what makes us tick. We operate in a diverse number of market segments, but what ties Amazon together is our joint mission to make customers' lives better and easier every day. This is true across every customer segment we serve (consumers, sellers, brands, developers, enterprises, and creators). At our best, we're not just customer obsessed, but also inventive, thinking several years out, learning like crazy, scrappy, delivering quickly, and operating like the world's biggest start-up.

We spend enormous energy thinking about how to empower builders, inside and outside of our company. We characterize builders as people who like to invent. They like to dissect a customer experience, assess what's wrong with it, and reinvent it. Builders tend not to be satisfied until the customer experience is perfect. This doesn't hinder them from delivering improvements along the way, but it drives them to keep tinkering and iterating continually. While unafraid to invent from scratch, they have no hesitation about using high-quality, scalable, cost-effective components from others. What matters to builders is having the right tools to keep rapidly improving customer experiences.

The best way we know how to do this is by building primitive services. Think of them as discrete, foundational building blocks that builders can weave together in whatever combination they desire. Here's how we described primitives in our 2003 AWS Vision document:

"Primitives are the raw parts or the most foundational-level building blocks for software developers. They're indivisible (if they can be functionally split into two they must) and they do one thing really well. They're meant to be used together rather than as solutions in and of themselves. And, we'll build them for maximum developer flexibility. We won't put a bunch of constraints on primitives to guard against developers hurting themselves. Rather, we'll optimize for developer freedom and innovation."

Of course, this concept of primitives can be applied to more than software development, but they're especially relevant in technology. And, over the last 20 years, primitives have been at the heart of how we've innovated quickly.

One of the many advantages to thinking in primitives is speed. Let me give you two counter examples that illustrate this point. First, we built a successful owned-inventory retail business in the early years at Amazon where we bought all our products from publishers, manufacturers, and distributors, stored them in our warehouses, and shipped them ourselves. Over time, we realized we could add broader selection and lower prices by allowing third-party sellers to list their offerings next to our own on our highly trafficked search and product detail pages. We'd built several core retail services (e.g. payments, search, ordering, browse, item management) that made trying different marketplace concepts simpler than if we didn't have those components. A good set of primitives? Not really.

It turns out that these core components were too jumbled together and not partitioned right. We learned this the hard way when we partnered with companies like Target in our Merchant.com business in the early 2000s. The concept was that target.com would use Amazon's ecommerce components as the backbone of its website, and then customize however they wished. To enable this arrangement, we had to deliver those components as separable capabilities through application programming interfaces ("APIs"). This decoupling was far more difficult than anticipated because we'd built so many dependencies between these services as Amazon grew so quickly the first few years.

This coupling was further highlighted by a heavyweight mechanism we used to operate called "NPI." Any new initiative requiring work from multiple internal teams had to be reviewed by this NPI cabal where each team would communicate how many people-weeks their work would take. This bottleneck constrained what we accomplished, frustrated the heck out of us, and inspired us to eradicate it by refactoring these ecommerce components into true primitive services with well-documented, stable APIs that enabled our builders to use each other's services without any coordination tax.

In the middle of the Target and NPI challenges, we were contemplating building a new set of infrastructure technology services that would allow both Amazon to move more quickly and external developers to build anything they imagined. This set of services became known as AWS, and the above experiences convinced us that we should build a set of primitive services that could be composed together how anybody saw fit. At that time, most technology offerings were very feature-rich, and tried to solve multiple jobs simultaneously. As a result, they often didn't do any one job that well.

Our AWS primitive services were designed from the start to be different. They offered important, highly flexible, but focused functionality. For instance, our first major primitive was Amazon Simple Storage Service ("S3") in March 2006 that aimed to provide highly secure object storage, at very high durability and availability, at Internet scale, and very low cost. In other words, be stellar at object storage. When we launched S3, developers were excited, and a bit mystified. It was a very useful primitive service, but they wondered, why just object storage? When we launched Amazon Elastic Compute Cloud ("EC2") in August 2006 and Amazon SimpleDB in 2007, people realized we were building a set of primitive infrastructure services that would allow them to build anything they could imagine, much faster, more cost-effectively, and without having to manage or lay out capital upfront for the datacenter or hardware. As AWS unveiled these building blocks over time (we now have over 240 at builders' disposal—meaningfully more than any other provider), whole companies sprang up quickly on top of AWS (e.g. Airbnb, Dropbox, Instagram, Pinterest, Stripe, etc.), industries reinvented themselves on AWS (e.g. streaming with Netflix, Disney+, Hulu, Max, Fox, Paramount), and even critical government agencies switched to AWS (e.g. CIA, along with several other U.S. Intelligence agencies). But, one of the lesser-recognized beneficiaries was Amazon's own consumer businesses, which innovated at dramatic speed across retail, advertising, devices (e.g. Alexa and FireTV), Prime Video and Music, Amazon Go, Drones, and many other endeavors by leveraging the speed with which AWS let them build. Primitives, done well, rapidly accelerate builders' ability to innovate.

So, how do you build the right set of primitives?

Pursuing primitives is not a guarantee of success. There are many you could build, and even more ways to combine them. But, a good compass is to pick real customer problems you're trying to solve.

Our logistics primitives are an instructive example. In Amazon's early years, we built core capabilities around warehousing items, and then picking, packing, and shipping them quickly and reliably to customers. As we added third-party sellers to our marketplace, they frequently requested being able to use these same logistics capabilities. Because we'd built this initial set of logistics primitives, we were able to introduce Fulfillment by Amazon ("FBA") in 2006, allowing sellers to use Amazon's Fulfillment Network to store items, and then have us pick, pack, and ship them to customers, with the bonus of these products being available for fast, Prime delivery. This service has saved sellers substantial time and money (typically about 70% less expensive than doing themselves), and remains one of our most popular services. As more merchants began to operate their own direct-to-consumer ("DTC") websites, many yearned to still use our fulfillment capabilities, while also accessing our payments and identity primitives to drive higher order conversion on their own websites (as Prime members have already shared this payment and identity information with Amazon). A couple years ago, we launched Buy with Prime to address this customer need. Prime members can check out quickly on DTC websites like they do on Amazon, and receive fast Prime shipping speeds on Buy with Prime items—increasing order conversion for merchants by ~25% vs. their default experience.

As our Stores business has grown substantially, and our supply chain become more complex, we've had to develop a slew of capabilities in order to offer customers unmatched selection, at low prices, and with very fast delivery times. We've become adept at getting products from other countries to the U.S., clearing customs, and then shipping to storage facilities. Because we don't have enough space in our shipping fulfillment centers to store all the inventory needed to maintain our desired in-stock levels, we've built a set of lower-cost, upstream warehouses solely optimized for storage (without sophisticated end-user, pick, pack, and ship functions). Having these two pools of inventory has prompted us to build algorithms predicting when we'll run out of inventory in our shipping fulfillment centers and automatically replenishing from these upstream warehouses. And, in the last few years, our scale and available alternatives have forced us to build our own last mile delivery capability (roughly the size of UPS) to affordably serve the number of consumers and sellers wanting to use Amazon.

We've solved these customer needs by building additional fulfillment primitives that both serve Amazon consumers better and address external sellers' increasingly complex ecommerce activities. For instance, for sellers needing help importing products, we offer a Global Mile service that leverages our expertise here. To ship inventory from the border (or anywhere domestically) to our storage facilities, we enable sellers to use either our first-party Amazon Freight service or third-party freight partners via our Partnered Carrier Program. To store more inventory at lower cost to ensure higher in-stock rates and shorter delivery times, we've opened our upstream Amazon Warehousing and Distribution facilities to sellers (along with automated replenishment to our shipping fulfillment centers when needed). For those wanting to manage their own shipping, we've started allowing customers to use our last mile delivery network to deliver packages to their end-customers in a service called Amazon Shipping. And, for sellers who wish to use our fulfillment network as a central place to store inventory and ship items to customers regardless of where they ordered, we have a Multi-Channel Fulfillment service. These are all primitives that we've exposed to sellers.

Building in primitives meaningfully expands your degrees of freedom. You can keep your primitives to yourself and build compelling features and capabilities on top of them to allow your customers and business to reap the benefits of rapid innovation. You can offer primitives to external customers as paid services (as we have with AWS and our more recent logistics offerings). Or, you can compose these primitives into external, paid applications as we have with FBA, Buy with Prime, or Supply Chain by Amazon (a recently released logistics service that integrates several of our logistics primitives). But, you've got options. You're only constrained by the primitives you've built and your imagination.

Take the new, same-day fulfillment facilities in our Stores business. They're located in the largest metro areas around the U.S. (we currently have 58), house our top-moving 100,000 SKUs (but also cover millions of other SKUs that can be injected from nearby fulfillment centers into these same-day facilities), and streamline the time required to go from picking a customer's order to being ready to ship to as little as 11 minutes. These facilities also constitute our lowest cost to serve in the network. The experience has been so positive for customers that we're planning to double the number of these facilities.

But, how else might we use this capability if we think of it as a core building block? We have a very large and growing grocery business in organic grocery (with Whole Foods Market) and non-perishable goods (e.g. consumables, canned goods, health and beauty products, etc.). We've been working hard on building a mass, physical store offering (Amazon Fresh) that offers a great perishable experience; however, what if we used our same-day facilities to enable customers to easily add milk, eggs, or other perishable items to any Amazon order and get same day? It might change how people think of splitting up their weekly grocery shopping, and make perishable shopping as convenient as non-perishable shopping already is.

Or, take a service that some people have questioned, but that's making substantial progress and we think of as a very valuable future primitive capability—our delivery drones (called Prime Air). Drones will eventually allow us to deliver packages to customers in less than an hour. It won't start off being available for all sizes of packages and in all locations, but we believe it'll be pervasive over time. Think about how the experience of ordering perishable items changes with sub-one-hour delivery?

The same is true for Amazon Pharmacy. Need throat lozenges, Advil, an antibiotic, or some other medication? Same-day facilities already deliver many of these items within hours, and that will only get shorter as we launch Prime Air more expansively. Highly flexible building blocks can be composed across businesses and in new combinations that change what's possible for customers.

Being intentional about building primitives requires patience. Releasing the first couple primitive services can sometimes feel random to customers (or the public at large) before we've unveiled how these building blocks come together. I've mentioned AWS and S3 as an example, but our Health offering is another. In the last 10 years, we've tried several Health experiments across various teams—but they were not driven by our primitives approach. This changed in 2022 when we applied our primitives thinking to the enormous global healthcare problem and opportunity. We've now created several important building blocks to help transform the customer health experience: Acute Care (via Amazon Clinic), Primary Care (via One Medical), and a Pharmacy service to buy whatever medication a patient may need. Because of our growing success, Amazon customers are now asking us to help them with all kinds of wellness and nutrition opportunities—which can be partially unlocked with some of our existing grocery building blocks, including Whole Foods Market or Amazon Fresh.

As a builder, it's hard to wait for these building blocks to be built versus just combining a bunch of components together to solve a specific problem. The latter can be faster, but almost always slows you down in the future. We've seen this temptation in our robotics efforts in our fulfillment network. There are dozens of processes we seek to automate to improve safety, productivity, and cost. Some of the biggest opportunities require invention in domains such as storage automation, manipulation, sortation, mobility of large cages across long distances, and automatic identification of items. Many teams would skip right to the complex solution, baking in "just enough" of these disciplines to make a concerted solution work, but which doesn't solve much more, can't easily be evolved as new requirements emerge, and that can't be reused for other initiatives needing many of the same components. However, when you think in primitives, like our Robotics team does, you prioritize the building blocks, picking important initiatives that can benefit from each of these primitives, but which build the tool chest to compose more freely (and quickly) for future and complex needs. Our Robotics team has built primitives in each of the above domains that will be lynchpins in our next set of automation, which includes multi-floor storage, trailer loading and unloading, large pallet mobility, and more flexible sortation across our outbound processes (including in vehicles). The team is also building a set of foundation AI models to better identify products in complex environments, optimize the movement of our growing robotic fleet, and better manage the bottlenecks in our facilities.

Sometimes, people ask us "what's your next pillar? You have Marketplace, Prime, and AWS, what's next?" This, of course, is a thought-provoking question. However, a question people never ask, and might be even more interesting is what's the next set of primitives you're building that enables breakthrough customer experiences? If you asked me today, I'd lead with Generative AI ("GenAI").

Much of the early public attention has focused on GenAI applications, with the remarkable 2022 launch of ChatGPT. But, to our "primitive" way of thinking, there are three distinct layers in the GenAI stack, each of which is gigantic, and each of which we're deeply investing.

The bottom layer is for developers and companies wanting to build foundation models ("FMs"). The primary primitives are the compute required to train models and generate inferences (or predictions), and the software that makes it easier to build these models. Starting with compute, the key is the chip inside it. To date, virtually all the leading FMs have been trained on Nvidia chips, and we continue to offer the broadest collection of Nvidia instances of any provider. That said, supply has been scarce and cost remains an issue as customers scale their models and applications. Customers have asked us to push the envelope on price-performance for AI chips, just as we have with Graviton for generalized CPU chips. As a result, we've built custom AI training chips (named Trainium) and inference chips (named Inferentia). In 2023, we announced second versions of our Trainium and Inferentia chips, which are both meaningfully more price-performant than their first versions and other alternatives. This past fall, leading FM-maker, Anthropic, announced it would use Trainium and Inferentia to build, train, and deploy its future FMs. We already have several customers using our AI chips, including Anthropic, Airbnb, Hugging Face, Qualtrics, Ricoh, and Snap.

Customers building their own FM must tackle several challenges in getting a model into production. Getting data organized and fine-tuned, building scalable and efficient training infrastructure, and then deploying models at scale in a low latency, cost-efficient manner is hard. It's why we've built Amazon SageMaker, a managed, end-to-end service that's been a game changer for developers in preparing their data for AI, managing experiments, training models faster (e.g. Perplexity AI trains models 40% faster in SageMaker), lowering inference latency (e.g. Workday has reduced inference latency by 80% with SageMaker), and improving developer productivity (e.g. NatWest reduced its time-to-value for AI from 12-18 months to under seven months using SageMaker).

The middle layer is for customers seeking to leverage an existing FM, customize it with their own data, and leverage a leading cloud provider's security and features to build a GenAI application—all as a managed service. Amazon Bedrock invented this layer and provides customers with the easiest way to build and scale GenAI applications with the broadest selection of first- and third-party FMs, as well as leading ease-of-use capabilities that allow GenAI builders to get higher quality model outputs more quickly. Bedrock is off to a very strong start with tens of thousands of active customers after just a few months. The team continues to iterate rapidly on Bedrock, recently delivering Guardrails (to safeguard what questions applications will answer), Knowledge Bases (to expand models' knowledge base with Retrieval Augmented Generation—or RAG—and real-time queries), Agents (to complete multi-step tasks), and Fine-Tuning (to keep teaching and refining models), all of which improve customers' application quality. We also just added new models from Anthropic (their newly-released Claude 3 is the best performing large language model in the world), Meta (with Llama 2), Mistral, Stability AI, Cohere, and our own Amazon Titan family of FMs. What customers have learned at this early stage of GenAI is that there's meaningful iteration required to build a production GenAI application with the requisite enterprise quality at the cost and latency needed. Customers don't want only one model. They want access to various models and model sizes for different types of applications. Customers want a service that makes this experimenting and iterating simple, and this is what Bedrock does, which is why customers are so excited about it. Customers using Bedrock already include ADP, Amdocs, Bridgewater Associates, Broadridge, Clariant, Dana-Farber Cancer Institute, Delta Air Lines, Druva, Genesys, Genomics England, GoDaddy, Intuit, KT, Lonely Planet, LexisNexis, Netsmart, Perplexity AI, Pfizer, PGA TOUR, Ricoh, Rocket Companies, and Siemens.

The top layer of this stack is the application layer. We're building a substantial number of GenAI applications across every Amazon consumer business. These range from Rufus (our new, AI-powered shopping assistant), to an even more intelligent and capable Alexa, to advertising capabilities (making it simple with natural language prompts to generate, customize, and edit high-quality images, advertising copy, and videos), to customer and seller service productivity apps, to dozens of others. We're also building several apps in AWS, including arguably the most compelling early GenAI use case—a coding companion. We recently launched Amazon Q, an expert on AWS that writes, debugs, tests, and implements code, while also doing transformations (like moving from an old version of Java to a new one), and querying customers' various data repositories (e.g. Intranets, wikis, Salesforce, Amazon S3, ServiceNow, Slack, Atlassian, etc.) to answer questions, summarize data, carry on coherent conversation, and take action. Q is the most capable work assistant available today and evolving fast.

While we're building a substantial number of GenAI applications ourselves, the vast majority will ultimately be built by other companies. However, what we're building in AWS is not just a compelling app or foundation model. These AWS services, at all three layers of the stack, comprise a set of primitives that democratize this next seminal phase of AI, and will empower internal and external builders to transform virtually every customer experience that we know (and invent altogether new ones as well). We're optimistic that much of this world-changing AI will be built on top of AWS.

(By the way, don't underestimate the importance of security in GenAI. Customers' AI models contain some of their most sensitive data. AWS and its partners offer the strongest security capabilities and track record in the world; and as a result, more and more customers want to run their GenAI on AWS.)

===

Recently, I was asked a provocative question—how does Amazon remain resilient? While simple in its wording, it's profound because it gets to the heart of our success to date as well as for the future. The answer lies in our discipline around deeply held principles: 1/ hiring builders who are motivated to continually improve and expand what's possible; 2/ solving real customer challenges, rather than what we think may be interesting technology; 3/ building in primitives so that we can innovate and experiment at the highest rate; 4/ not wasting time trying to fight gravity (spoiler alert: you always lose)—when we discover technology that enables better customer experiences, we embrace it; 5/ accepting and learning from failed experiments—actually becoming more energized to try again, with new knowledge to employ.

Today, we continue to operate in times of unprecedented change that come with unusual opportunities for growth across the areas in which we operate. For instance, while we have a nearly $500B consumer business, about 80% of the worldwide retail market segment still resides in physical stores. Similarly, with a cloud computing business at nearly a $100B revenue run rate, more than 85% of the global IT spend is still on-premises. These businesses will keep shifting online and into the cloud. In Media and Advertising, content will continue to migrate from linear formats to streaming. Globally, hundreds of millions of people who don't have adequate broadband access will gain that connectivity in the next few years. Last but certainly not least, Generative AI may be the largest technology transformation since the cloud (which itself, is still in the early stages), and perhaps since the Internet. Unlike the mass modernization of on-premises infrastructure to the cloud, where there's work required to migrate, this GenAI revolution will be built from the start on top of the cloud. The amount of societal and business benefit from the solutions that will be possible will astound us all.

There has never been a time in Amazon's history where we've felt there is so much opportunity to make our customers' lives better and easier. We're incredibly excited about what's possible, focused on inventing the future, and look forward to working together to make it so.

Sincerely,

Andy Jassy

President and Chief Executive Officer

Amazon.com, Inc.

P.S. As we have always done, our original 1997 Shareholder Letter follows. What's written there is as true today as it was in 1997.

Tekef

Tekef

![Run An Ecommerce SEO Audit in 4 Stages [+ Free Workbook]](https://api.backlinko.com/app/uploads/2025/06/ecommerce-seo-audit-featured-image.png)