Facebook should be 100% liable for all scams on its platform as S’pore woman loses S$20,000

Why should we pay the price? Facebook is already free to monetise our personal information, they can bombard us with unwanted content and, on top of that, allow organised digital crime to try to rob us, while bearing no...

Disclaimer: Opinions expressed below belong solely to the author.

About a week ago, another woman in Singapore fell victim of a scam which hijacked the identity of an otherwise legitimate food catering business Grain, through advertisements on Facebook.

After clicking on the ads, hoping to order healthy food delivery, she continued the conversation via Messenger and WhatsApp, which raised no concerns, during which she was requested to download an app to place her order. This is when her phone was hijacked, providing access to her credit card, which was subsequently drained of S$20,000.

A similar story was reported about a month ago (both happened around the same time, in the last week of July), when another unsuspecting lady was scammed of S$18,000. We can only guess how many others were robbed blind in a similar manner.

It’s easy to blame careless victims for, ultimately, downloading third-party apps from unknown sources, but surely it’s not too much to expect a multi-billion corporation, which specialises in algorithmic suppression of speech (and which, at one point, banned people for calling others — or themselves — fat), to be able to deploy it in the service of identifying and blocking suspicious advertisements.

Especially since, if you have any experience running ads on Facebook, you know it actually has the tools.

Due negligence

While one group was scamming people looking for healthy meals, another one used none other but Singapore’s Prime Minister, Lee Hsien Loong, to peddle investment scams.

This is particularly interesting as similar attempts occurred in the past, and were raised by the PM himself. Apparently, with all the image recognition technology, Facebook is still unable to scan ads for pictures of politicians or celebrities, and flag them for a manual review.

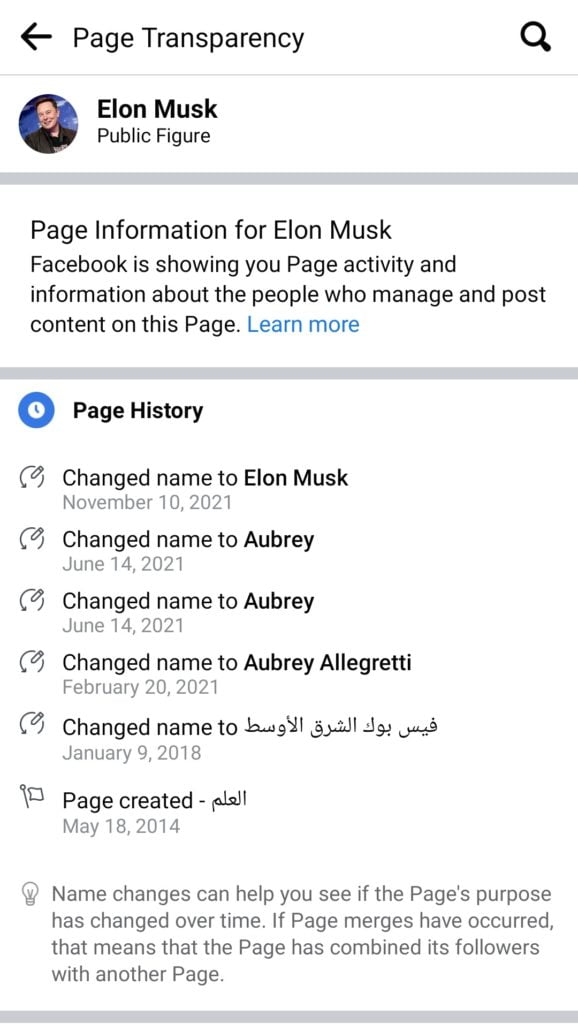

Speaking of celebrities, Elon Musk and Tesla were used very heavily during the Bitcoin craze era, touting cryptocurrency deals on Facebook, with no retribution.

Here’s one of the examples, which includes a blue tick — sign that a page has been verified under misspelled “elonnmusk” handle:

It wasn’t the only one, as “elonmuskofficis” also got its blue tick, without raising any suspicions:

This happened despite the fact that it has changed its names numerous times over the years. Anybody who has ever run a Facebook page and tried to change its name knows very well how difficult that process can be, as it is supposed to be manual and approved by people at Facebook.

And yet, this happened:

Here’s another example, this time not only using Jeff Bezos, but also pretending to be Yahoo — in the name of the page itself:

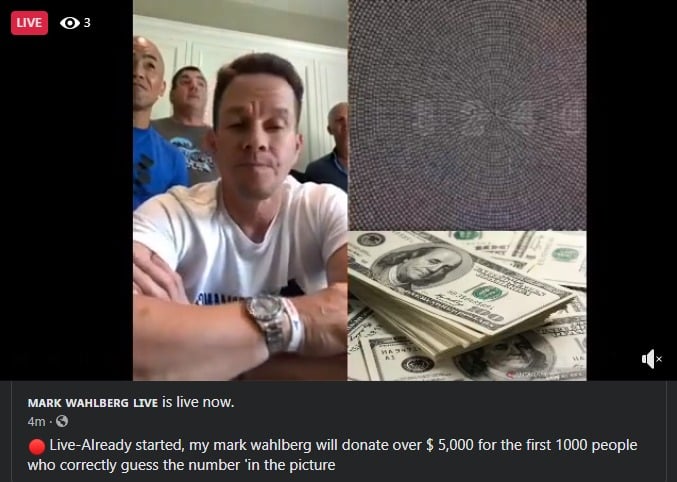

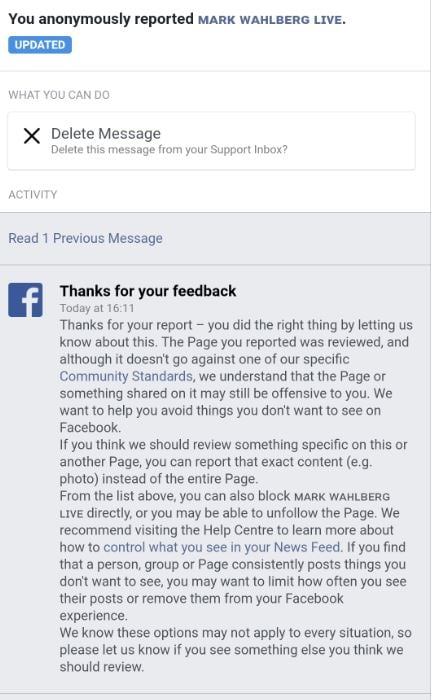

Like most other Facebook users, I have been on the receiving end of these and other scams myself, so I occasionally try to test response of Facebook moderators. Here’s an example of another scam peddled some months ago, using video of American actor Mark Wahlberg:

But what would happen if you reported it to Facebook “authorities”, which typically crack down on people for voicing their views on many social and political affairs? Well, the following:

Let’s get this straight — by issuing such a message, Facebook directly acknowledged that it reviewed the page and its content, and established that it does not go against its Community Standards.

At this point, it should be equal to legal complicity with whatever the advertiser is peddling, since a justified report was made by a concerned user of the platform only to be brushed off.

What else can we do to protect ourselves and others?

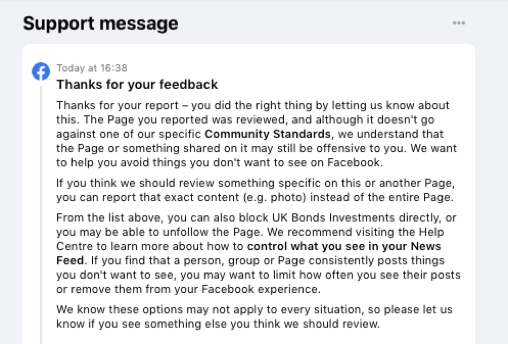

If you think it might be just some odd mistake, an overzealous reviewer clicking too quickly, here’s another case from Britain in 2022:

“An advertiser calling itself ‘UK Bonds Investment’, among other names, was advertising on the social media platform until 17 December 2021 when Which? reported it.

We’re concerned about Facebook’s lack of due diligence because this firm has been on the Financial Conduct Authority (FCA) warning list of unauthorised financial firms since September 2020.

When you click through to the website, the firm refers to itself as ‘UK Income Bonds’. The phone number supplied has also been linked to another firm on the FCA warning list called insta-crypto.pro.”

So, not only was it a known scammer. The organisation itself had already been listed on official, government warning list for two years and Facebook still approved its ads.

Moreover, when reported by a regular user, it responded with the standard message, that nothing was found wrong with the advertiser:

The ads were only removed after the outlet Which? was able to contact Meta directly. The response it then received was entirely predictable:

“Following further investigation, we have removed the accounts and ads brought to our attention. We continue to dedicate significant resources to tackle the industry-wide issue of scams and while no enforcement is perfect, we continue to invest in new technologies and methods to protect people on our services.“

This is complete and utter rubbish. A bald-faced lie. I have personally reported dozens upon dozens of pages running misleading ads or outright scams, and I struggle to recall a single instance of any action being taken.

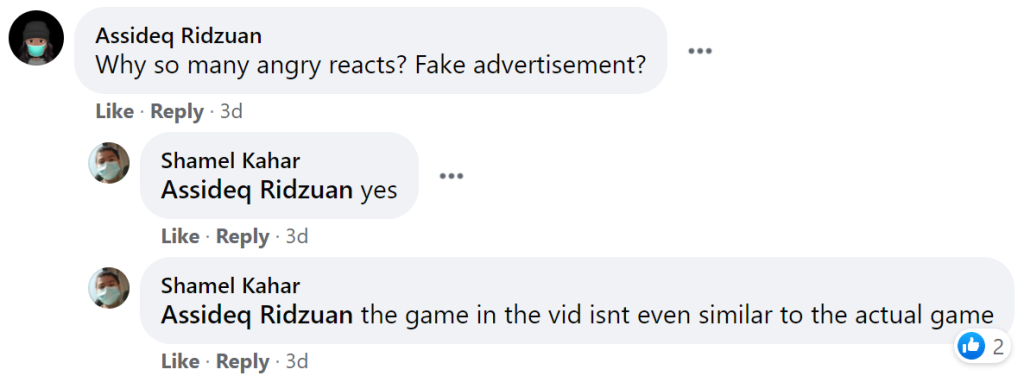

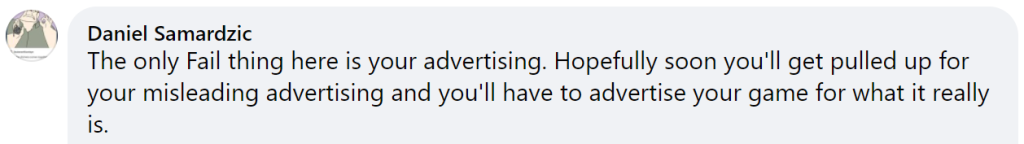

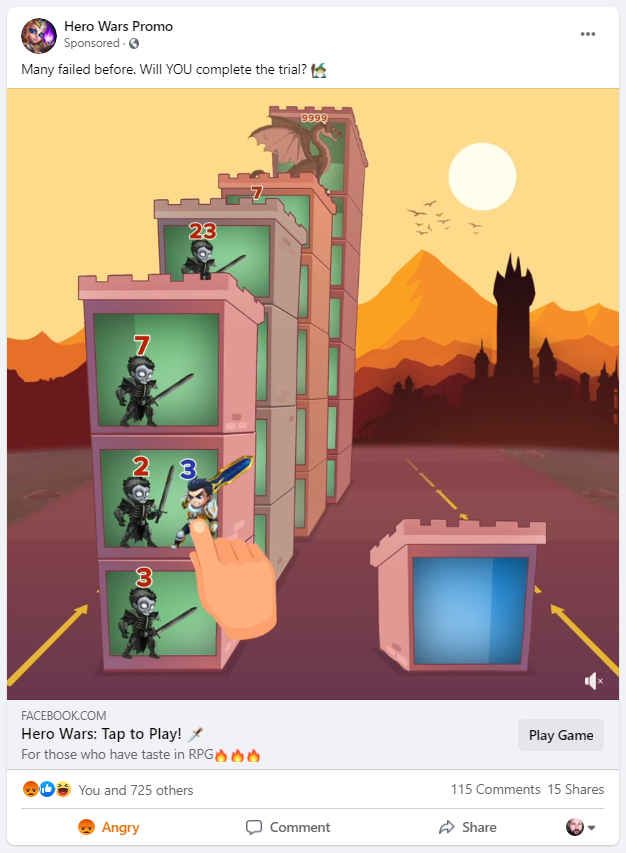

Here’s an example of one of many ads ran for a mobile game Hero Wars, which kept showing fake game footage to hoodwink people into downloading it.

You can see the angry reactions of the users and the comments. Despite probably at least dozens, if not hundreds, of reports (including my own) that people spoke of in the comments, the ads were found in compliance with Facebook’s “standards”.

This was just one out of many similar games, actively promoted via misleading ads on Facebook (but also Google, including mobile ads), despite popular user backlash.

This problem is so pervasive that it’s really difficult to accept the company’s explanations that simply some bad apples slipped through the net of its, allegedly increasingly sophisticated, scam filters.

Instead of due diligence, Facebook appears to be practising due negligence — i.e. allowing questionable ads to continue to run on the platform, regardless of user reports, unless it becomes a PR problem for the company when some media outlets pick it up.

And then, all it does is finally stop the scam and send out some lame guy to send an email they’re doing all they can.

They have the tools

Facebook is able to stop all scams, and it’s obvious to anybody who has ever tried advertising on the platform.

As some of you probably know, I’ve run my page and a blog largely to Facebook audiences for a few years, and it is downright impossible to run any advertising before it is manually pre-approved by a person inside the company.

You just can’t do it. If I occasionally try to boost a post on a weekend, I have to typically wait until Monday to get it activated.

So, if they find time to screen a random bloke spending 20 bucks to boost a post without any words that could reasonably trigger a red flag, how are they allegedly not able to screen an investment scam using Lee Hsien Loong’s photo or video, promising some hefty returns to participants?

Or Elon Musk peddling a dozen different crypto coins?

How can they permit verified profiles to completely change their names or even languages used? How has it not triggered any manual review before such a page is then able to run global advertising?

Facebook is technologically capable of automatically banning you for calling someone fat, but it claims it can’t detect obvious scams that use public figures in paid advertising? Does anybody buy this excuse?

I’m pretty sure it is excellent at screening for gore, violence or pornography, which means it should be able to detect Musk’s face in a dubious ad or OCR (Optical Character Recognition) the image for misleading claims, but somehow, it doesn’t.

Even worse, it regularly ignores community reports from its very own users. How else can you call this if not complicity in the crime?

Let’s consider the following: what is the difference between policing people’s posts and comments, and advertising?

Money.

Isn’t it peculiar how differently Facebook treats content for which it gets paid large sums of money versus your opinions that it may find inappropriate for some reason?

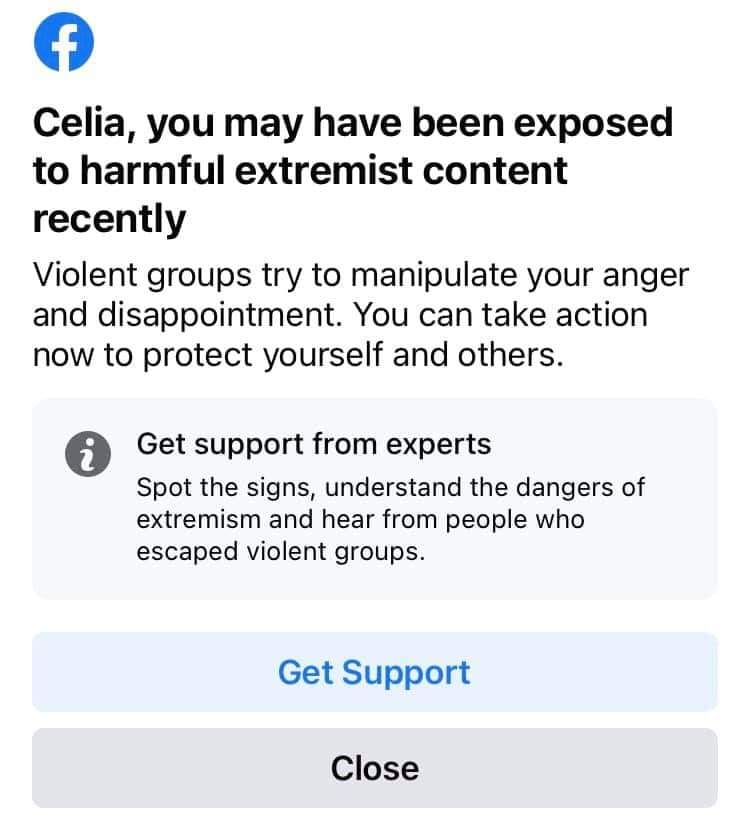

Remember when Facebook warned you about being exposed to “extremist content”? Somehow they can’t do that with scams…

Remember when Facebook warned you about being exposed to “extremist content”? Somehow they can’t do that with scams…And since it bears no legal responsibility for criminal activity on its platform, what exactly is the incentive to combat scams, when it can always say users should just be vigilant?

It gets its money, and it doesn’t care what happens to yours.

Time for governments to step in

If Facebook is unwilling to regulate itself, then it is the highest time for government regulators to step in and discipline it (as well as other companies like Google).

The problem is that Big Tech executives can typically run circles around middle-aged or older politicians who are struggling to use a smartphone.

They will deflect, pointing to huge number of users, volume of traffic, and complexity of screening millions of ads. But that’s all rubbish, given how capable they are of censorship when it comes to regular individuals just having arguments online.

Every advertising platform should bear complete responsibility for the ads that it runs, including the consequences of crimes that result from misleading information they allowed to publish.

Anybody who clicks an advertisement placed on Facebook, Google, Instagram or anywhere else, should be assured that the company on the other end has been verified.

There is absolutely no reason why Big Tech should be allowed to make billions of dollars from content they can’t be bothered to vet, while providing a link between billions of people around the world and criminals trying to rob them.

Why should we pay the price? These businesses are already free to monetise our personal information, they can bombard us with unwanted content and on top of that, allow organised digital crime to thrive, while bearing no risk themselves.

Do you think Facebook would allow that fake healthy food scam to run its ads if it had to pay back S$20,000 to the cheated woman?

Facebook and others should fully reimburse every victim of every scam that their platforms enabled and in addition, pay hefty fines, levied on the percentage of their revenue.

They will only be interested in solving the problem when their bottom lines — of billions of dollars each year — become directly and significantly affected.

Modern technology should serve humanity, not be just another vector of criminal activity, that complicit middlemen make billions off in the process, while turning their heads away or blaming the victims.

They have the financial and technical means to stop it right now. If they do not, then they should be forced to by law.

Featured Image Credit: pixinoo via Shutterstock / CanStockPhoto

KickT

KickT

![Run An Ecommerce SEO Audit in 4 Stages [+ Free Workbook]](https://api.backlinko.com/app/uploads/2025/06/ecommerce-seo-audit-featured-image.png)