The compliance countdown has started for AI companies operating in the EU

Illustration by Cath Virginia / The Verge | Photos by Getty ImagesThe AI Act is a sweeping set of rules for technology companies operating in the EU, which bans certain uses of AI tools and puts transparency requirements on...

/cdn.vox-cdn.com/uploads/chorus_asset/file/25362061/STK_414_AI_CHATBOT_R2_CVirginia_D.jpg)

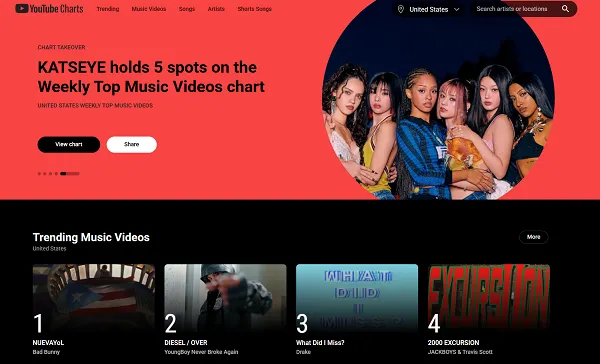

The AI Act is a sweeping set of rules for technology companies operating in the EU, which bans certain uses of AI tools and puts transparency requirements on developers. The law officially passed in March after two years of back and forth and includes several phases for compliance that will happen in waves.

Now that the full text has been published, it officially starts the clock for compliance deadlines that companies must meet. The AI Act will come into law in 20 days, on August 1st, and future deadlines will be tied to that date.

The new law prohibits certain uses for AI, and those bans are part of the first deadline. The AI Act bans application uses “that threaten citizens’ rights,” like biometric categorization to deduce information like sexual orientation or religion, or the untargeted scraping of faces from the internet or security camera footage. Systems that try to read emotions are banned in the workplace and schools, as are social scoring systems. The use of predictive policing tools is also banned in some instances. These uses are considered to have an “unacceptable risk,” and tech companies will have until February 2nd, 2025, to comply.

Nine months after the law kicks in, on May 2nd, 2025, developers will have codes of practice, a set of rules that outlines what legal compliance looks like: what benchmarks they need to hit; key performance indicators; specific transparency requirements; and more. Three months after that — so August 2025 — “general purpose AI systems” like chatbots must comply with copyright law and fulfill transparency requirements like sharing summaries of the data used to train the systems.

By August 2026, the rules of the AI Act will apply generally to companies operating in the EU. Developers of some “high risk” AI systems will have up to 36 months (until August 2027) to comply with rules around things like risk assessment and human oversight. This risk level includes applications integrated into infrastructure, employment, essential services like banking and healthcare, and the justice system.

Failure to comply will result in fines for the offending company, either a percentage of total revenue or a set amount. A violation of banned systems carries the highest fine: €35 million (about $38 million), or 7 percent of global annual revenue.

Koichiko

Koichiko

![Run An Ecommerce SEO Audit in 4 Stages [+ Free Workbook]](https://api.backlinko.com/app/uploads/2025/06/ecommerce-seo-audit-featured-image.png)